.webp&w=3840&q=95)

AI SDRでインバウンドセールスを拡大し、78%のリードをエンドツーエンドで資格確認

30以上の言語で24時間対応可能なエージェントが即座に応答し、ミーティングを予約

ElevenLabs Agentsを使い、ElevenReaderアプリのユーザー230名以上に24時間でインタビューしました。

私たちはElevenLabs Agentsを使って、230名以上のユーザーにElevenReaderアプリでインタビューしました。

この記事では、ボイスエージェントの構築方法、このテストの結果、そしてご自身のプロダクト改善にどう活用できるかをご紹介します。

カスタマーインタビューは大切ですが、数を増やすのは難しいです。通常の15分間のライブインタビューは有益ですが、1日に何件もスケジュールするのは大変です。

カレンダーの調整は難しく、少人数のチームで世界中の多言語ユーザーをサポートするのはほぼ不可能です。24時間体制で深い会話を続けるのは物理的に限界があります。

アンケートはスケールしやすいですが、情報が失われがちです。フィードバックが選択肢にまとめられ、1対1の会話で得られる感情やニュアンスが抜け落ちます。ボイスAIやLLMの進化で、このギャップを埋められるようになりました。

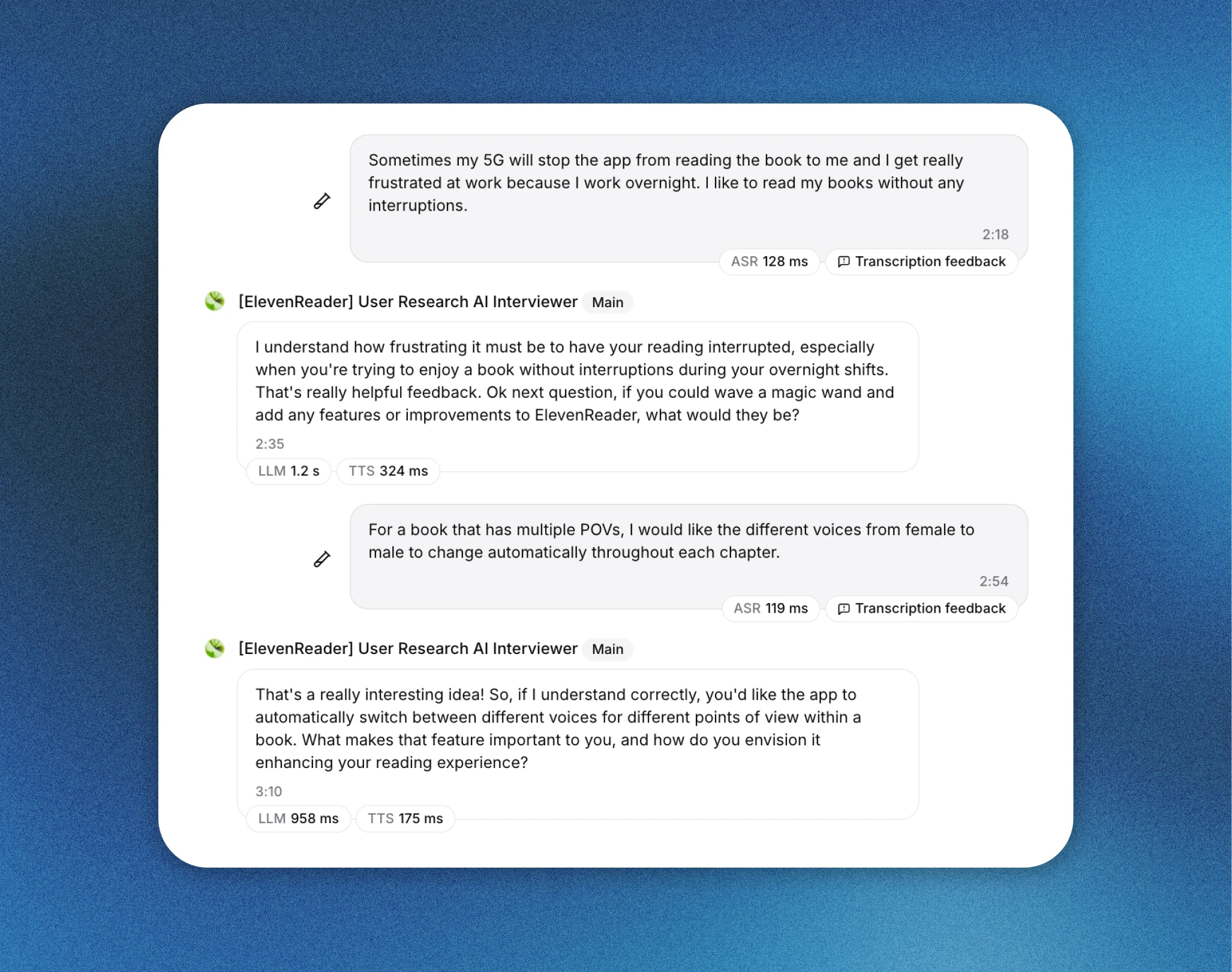

ElevenLabs AgentsでAIインタビュアーを作り、ユーザーと実際に会話しながらフィードバックを集めました。24時間以内に230件以上のインタビューを実施し、得られたインサイトをもとにアプリの改善も行いました。

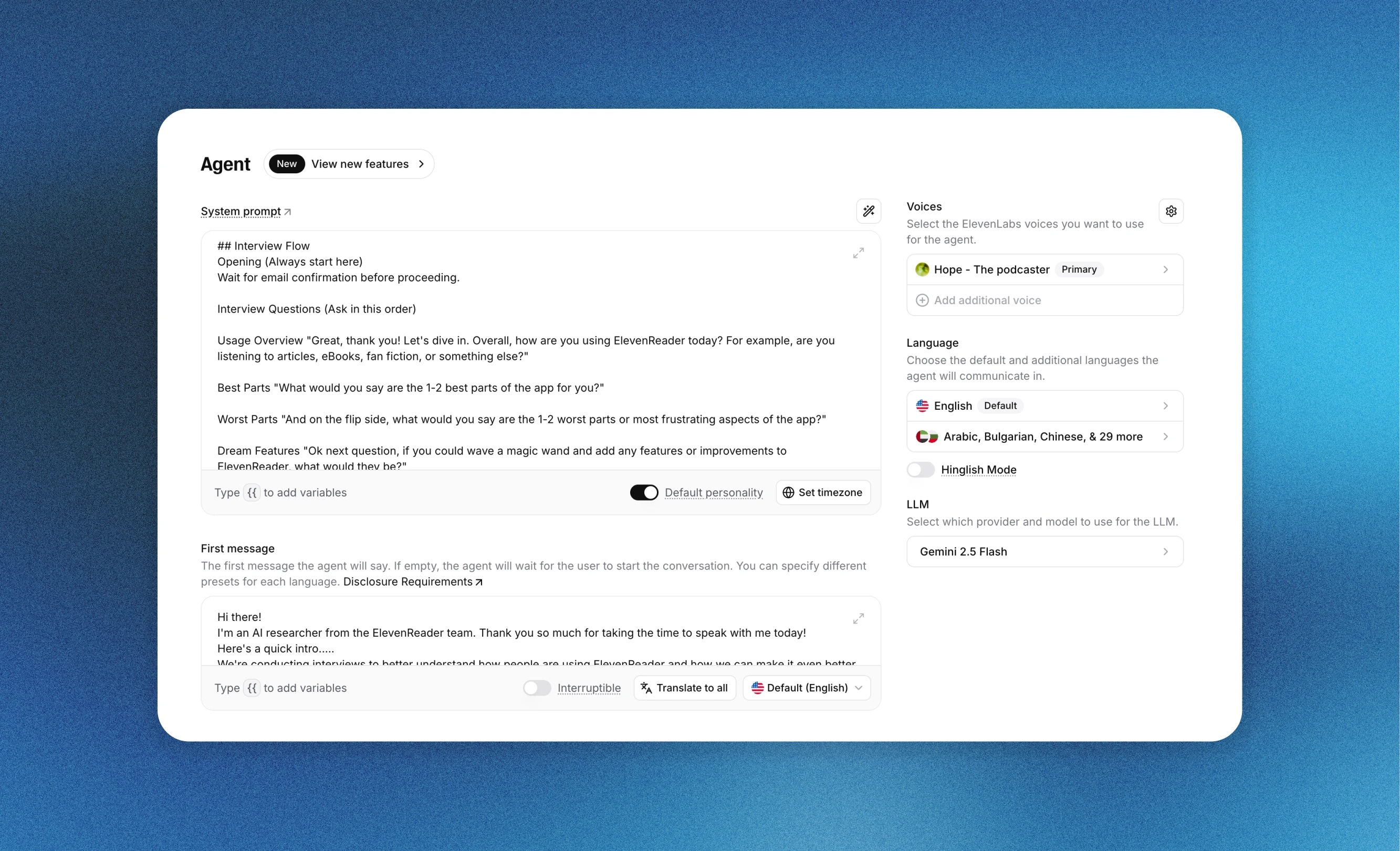

ElevenLabs Agentsのプラットフォームで会話型リサーチャーを作成しました。目的は、ElevenReaderアプリのユーザーがどう感じているかを、主に4つの観点から理解することでした:

フレンドリーで会話しやすい雰囲気を重視し、ボイス「Hope - The podcaster」を選びました。まるで共感力のあるリサーチャーと向かい合っているような感覚です。ロジックにはGemini 2.5 Flashを使い、低遅延と高い知能のバランスを取りました。

エージェントが深掘り質問をしつつ、会話が脱線しないように指示するシステムプロンプトを作成しました。ユーザーが曖昧な返答や一言だけの回答をした場合は、より詳しいフィードバックを促すようにしました。リリース前には、ElevenLabsのシミュレーションテストで、曖昧な返答や不適切な言葉などのケースにも対応できるか確認しました。

こちらをご覧ください今回使用したシステムプロンプトです。

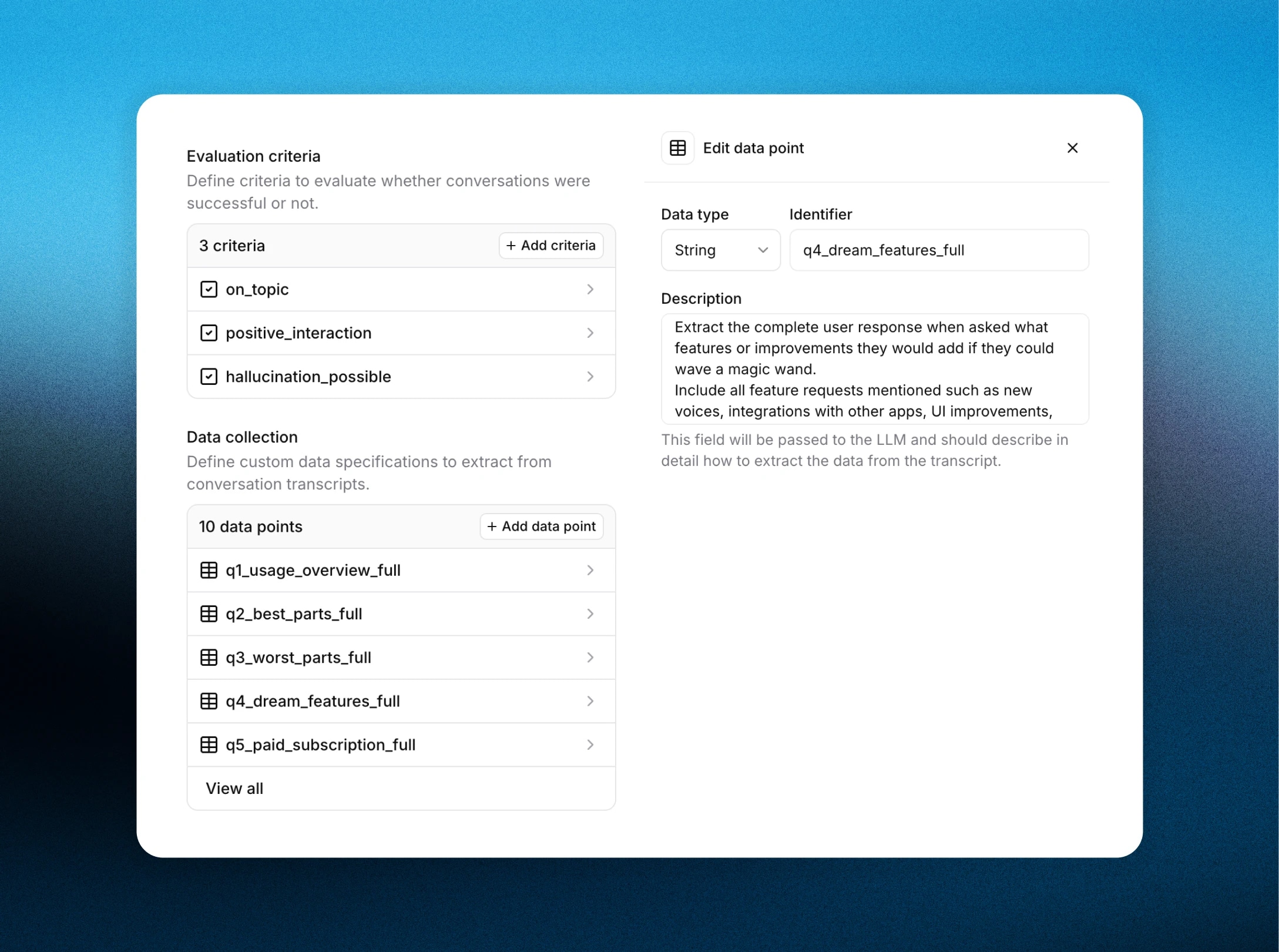

ElevenLabs Agentsの分析機能を使い、各通話を評価しました。このツールは文字起こしから構造化データを抽出し、自由な会話を具体的なインサイトに変換できます。例えば、次のような質問への回答を自動で集計できました:

エージェントは10分経過後にend_callツールで会話を終了し、ユーザーに丁寧にお礼を伝えました。

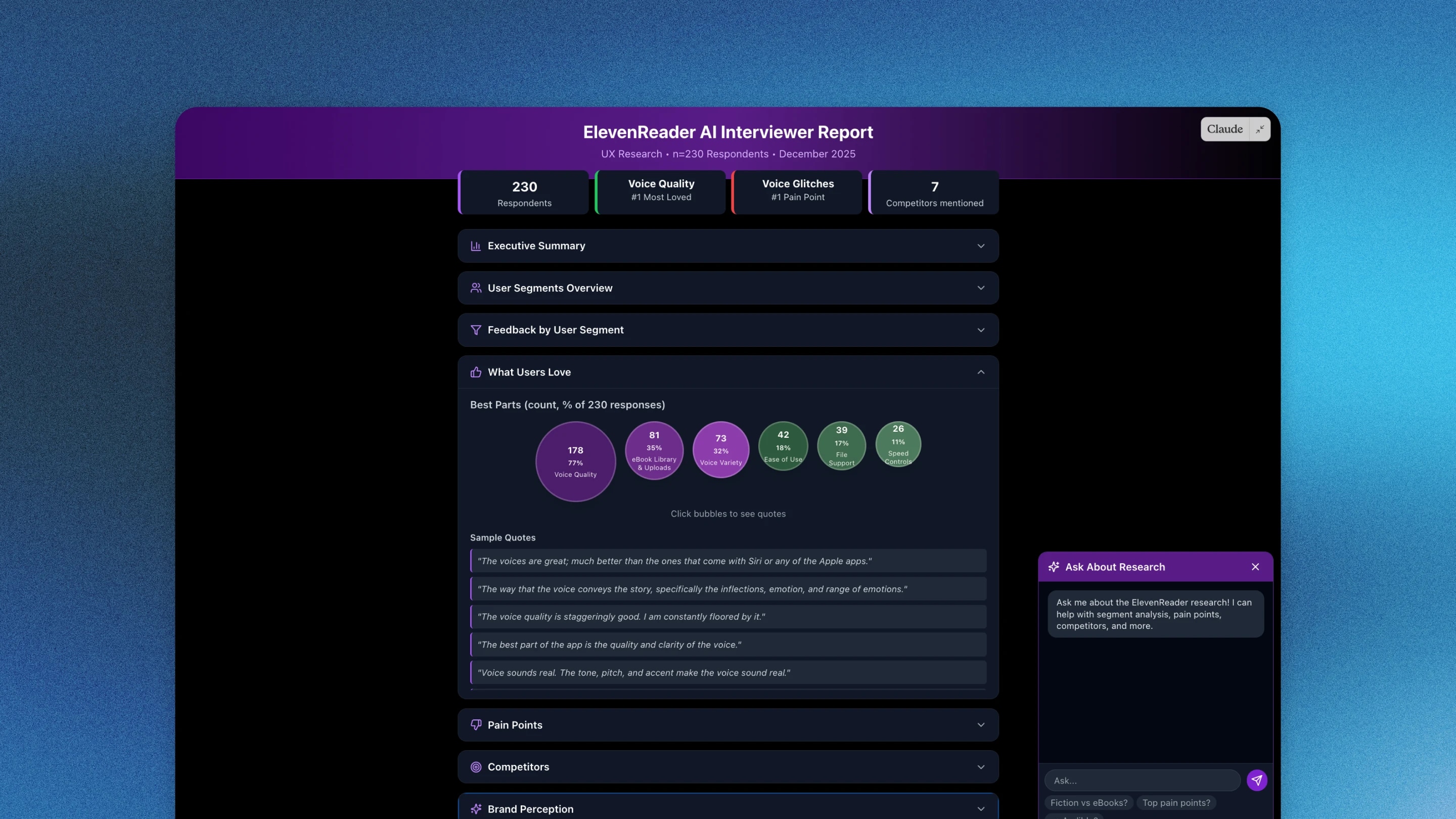

24時間以内に、合計36時間以上の会話データを収集できました。

データ分析

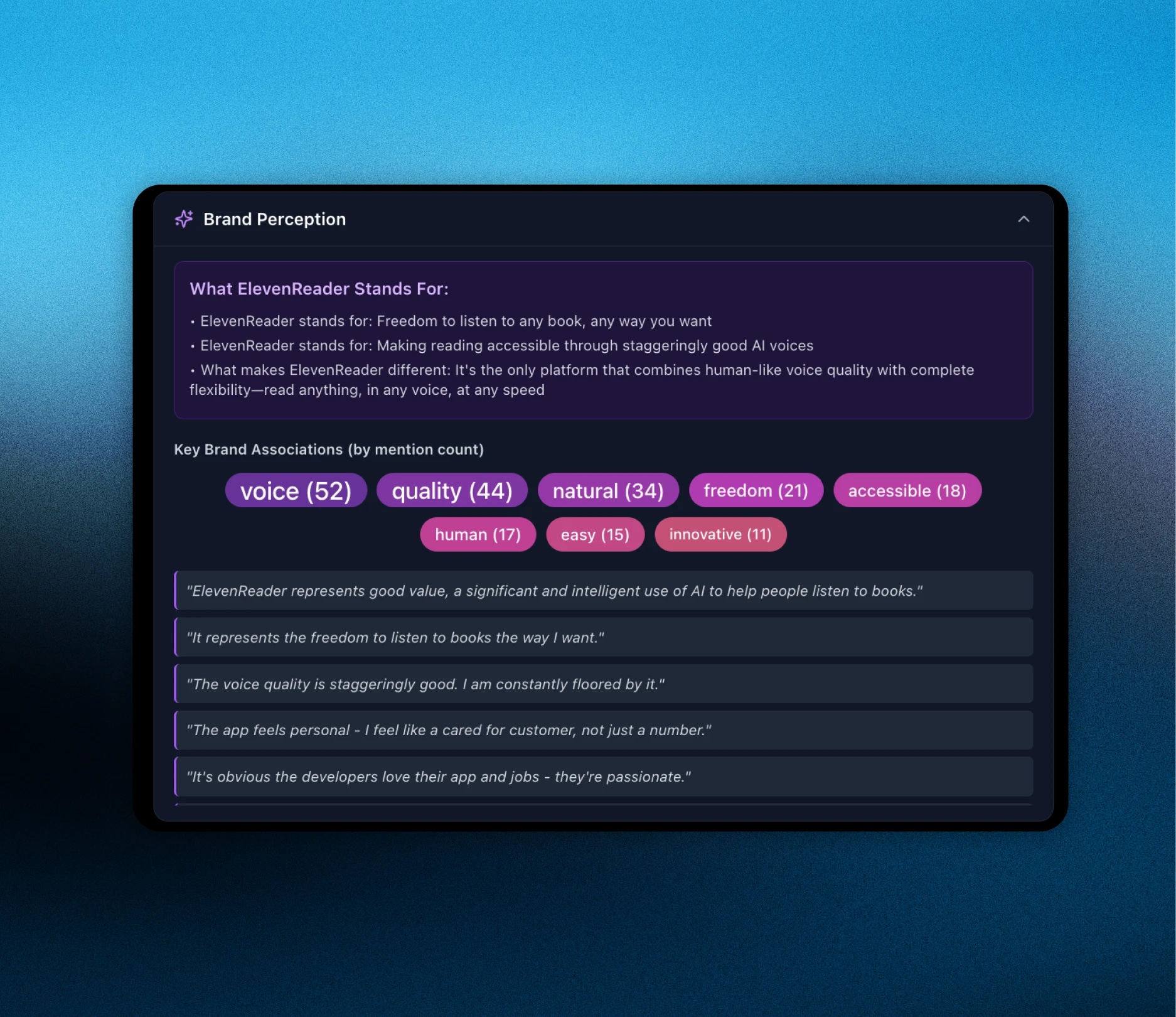

Claude Opus 4.5を使い、36時間分の文字起こしデータをUXリサーチの観点から分析しました。

モデルで大まかな傾向を抽出し、追加プロンプトでユーザーのセグメント分けやナビゲーションのフィードバック、地域ごとの価格感度など、より細かなインサイトも明らかにしました。

主な発見

ユーザーはAIとの会話に抵抗がなく、95%近くが会話型エージェントだと意識せずにインタビュアーとやり取りしていました。あるユーザーの声:

「このカスタマーサービスのインタビューは、今まで体験した中で最も印象的なAI体験です。すべてのアンケートがこうだったらいいのに、すべてのカスタマーサービスもこうだったらいいのにと思います。」

今後の展望

今後のユーザーリサーチは会話型が主流になります。AIボイスエージェントを使えば、世界中のユーザーと好きなタイミングで会話し、声を直接聞くことができます。

このテストで、AIエージェントが現実的かつ確実に大規模なインタビューを実施できることがわかりました。LLMによるテキスト分析と組み合わせることで、数百件の回答からパターンを発見でき、手作業では難しいインサイトも得られました。ElevenLabs Agentsで同様のAIインタビュアーを作成できます。今すぐ始めるまたは詳細はこちらからお問い合わせください。

.webp&w=3840&q=95)

30以上の言語で24時間対応可能なエージェントが即座に応答し、ミーティングを予約