Eleven v3 オーディオタグ: AIオーディオに状況認識を

Eleven v3 オーディオタグでAI音声を強化。トーン、感情、テンポをコントロールし、自然な会話を実現。テキスト読み上げに状況認識を追加。

ElevenLabsのオーディオタグでAI音声の感情、話し方、サウンドエフェクトをコントロールできます。

リリースされたEleven v3によって、オーディオプロンプトが必須スキルになりました。AI音声に話してほしい言葉を入力するだけでなく、新たな機能であるオーディオタグを使って、感情から話し方まで自在にコントロールできます。

Eleven v3はアルファ版のリサーチプレビューとなる新モデルです。従来モデルよりもプロンプトエンジニアリングが必要ですが、その生成結果は圧倒的です。

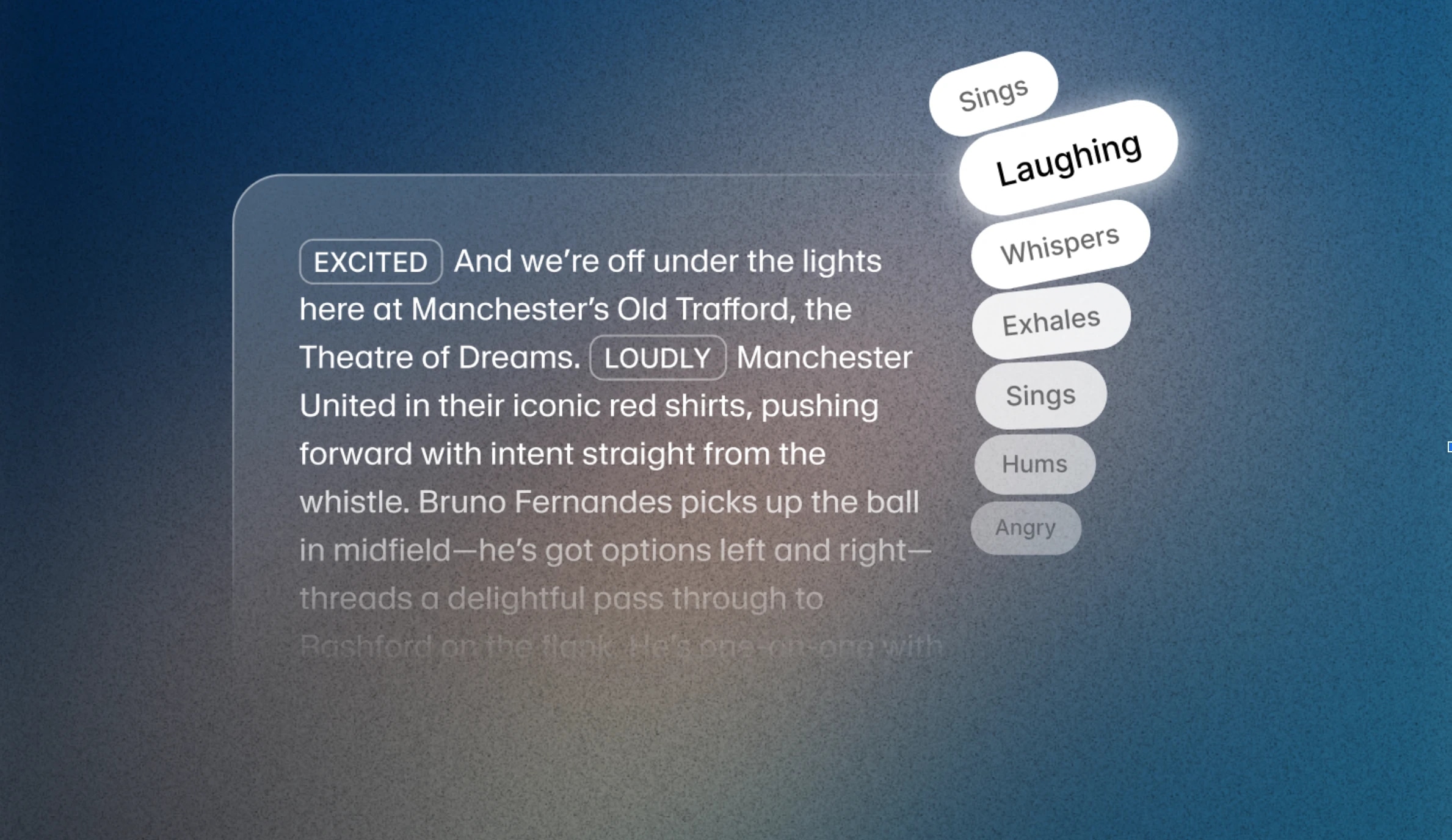

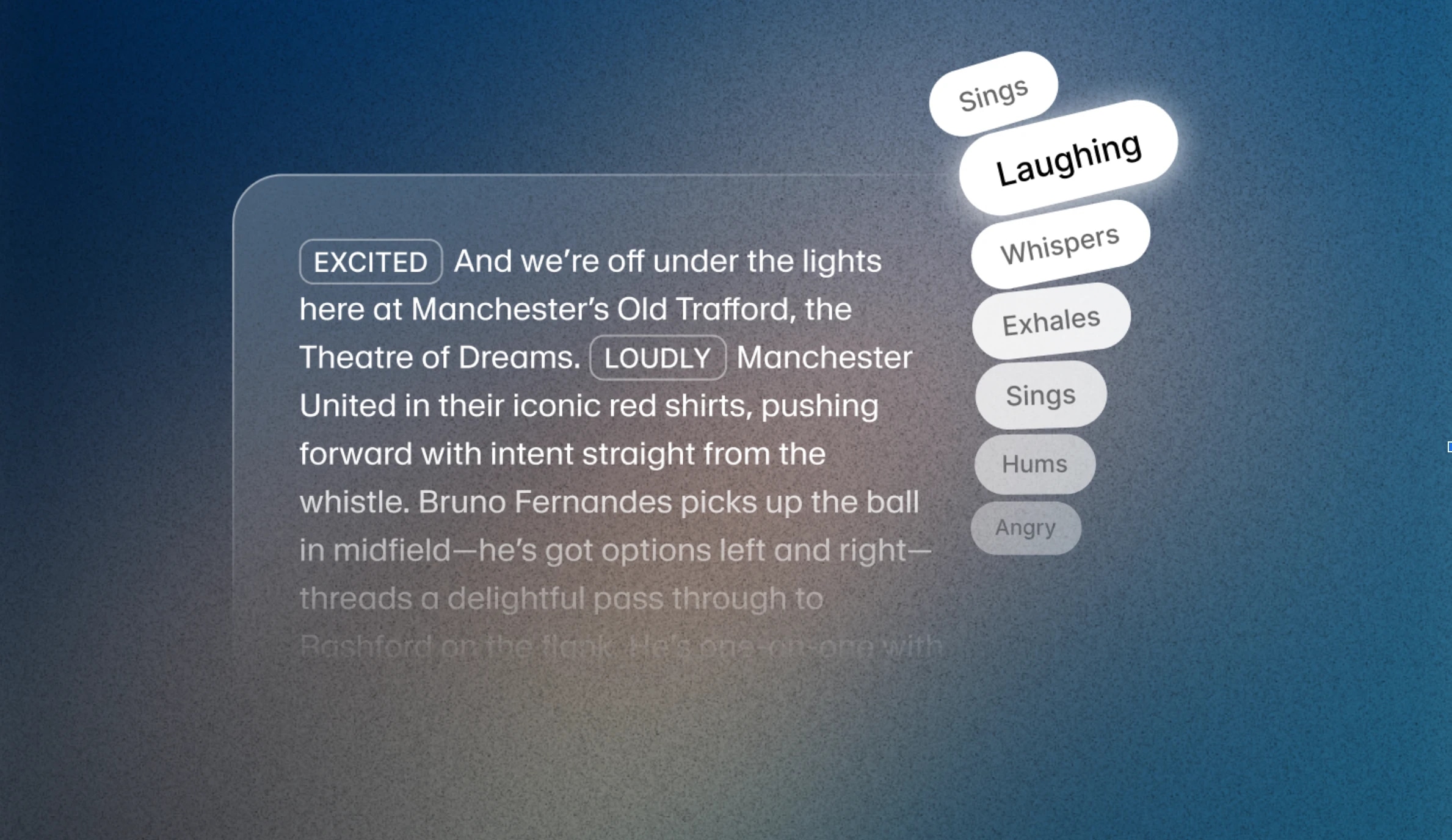

ElevenLabsオーディオタグは、角括弧で囲んだ単語で、新しいEleven v3モデルが解釈し、音声表現を指示できます。[excited]、[whispers]、[sighs]から、[gunshot]、[clapping]、[explosion]まで、さまざまなタグが使えます。

オーディオタグを使えば、AI音声のトーンや間、話し方など、非言語的なニュアンスまで調整できます。オーディオブックやインタラクティブなキャラクター、会話型メディアなど、感情や表現を細かくコントロールしたい場面で役立つシンプルなスクリプト用ツールです。

オーディオタグはスクリプトのどこにでも挿入でき、リアルタイムで話し方を調整できます。タグを組み合わせて使うことも、1文の中で複数使うことも可能です。タグは主に以下のカテゴリに分かれます:

これらのタグで声の感情的なトーンを設定できます。たとえば、[sad]、[angry]、[happily]、[sorrowful]などを単独または組み合わせて使えます。

こちらはトーンやパフォーマンスの調整に使います。ボリュームやエネルギーを変えて、抑えたい場面や強調したい場面に合わせられます。例:[whispers]、[shouts]、[x accent]など。

自然な会話にはリアクションが欠かせません。たとえば、[laughs]、[clears throat]、[sighs]などを挿入して、リアルな自然な瞬間を演出できます。

これらの機能の背景には、v3の新しいアーキテクチャがあります。モデルがテキストの文脈をより深く理解できるため、感情の変化やトーンの切り替え、話者の交代も自然に表現できます。オーディオタグと組み合わせることで、従来のTTSでは難しかった表現力が実現します。

さらに、複数話者のダイアログも自然に作成可能です。割り込みやムードの変化、会話のニュアンスも最小限のプロンプトで表現できます。

プロフェッショナルボイスクローン(PVC)は現時点ではEleven v3に最適化されていないため、従来モデルよりクローン品質が下がる場合があります。v3の機能を使いたい場合は、リサーチプレビュー期間中はインスタントボイスクローン(IVC)やデザインボイスの利用をおすすめします。PVCのv3最適化は今後予定されています。

Eleven v3はElevenLabsのUIで利用でき、6月末まで80%オフでご提供中です。Eleven v3(アルファ)のパブリックAPIも利用可能です。試してみたい方も、大規模導入を検討中の方も、今が新しい可能性を探るチャンスです。

AI音声を「読む」だけでなく「演じる」ためには、オーディオタグの使いこなしがカギです。タグの使い方をわかりやすく解説した7つのガイドを用意しました。たとえば、【ささやき】, 【小さく笑う】や、【フランス語なまり】などのタグで、文脈や感情、話し方、複数キャラクターの会話まで、1つのモデルで自在に表現できます。

【ささやき】, 【叫ぶ】、【ため息】などのタグで、その場の雰囲気に合わせて緊張感を高めたり、警告を和らげたり、サスペンスのために間を取ったりできます。【海賊風】から、【フランス語なまり】まで、タグを使えばナレーションがロールプレイに変わります。途中でキャラクターを切り替えたり、モデルを変えずに本格的な演技も可能です。【ため息】, 【興奮気味】や、【疲れた様子】などのキューで、その瞬間ごとの感情を表現し、緊張や安心、ユーモアを重ねられます。録り直しは不要です。【間】, 【感嘆】や、【ドラマチックな口調】などのタグでリズムや強調を調整し、AI音声がリスナーを物語の流れに導きます。【話を遮る】, 【重なって話す】やトーンの切り替えで、重なり合うセリフやテンポの良い掛け合いも表現できます。1つのモデルで多彩な声を使い分け、自然な会話を実現します。【間】, 【急ぎ気味】や、【引き延ばす】などのタグでテンポを自在に操り、テキストをパフォーマンスに変えます。【アメリカ英語なまり】, 【イギリス英語なまり】, 【アメリカ南部なまり】など、多様な文化的ニュアンスをモデルを変えずに表現できます。

Eleven v3 オーディオタグでAI音声を強化。トーン、感情、テンポをコントロールし、自然な会話を実現。テキスト読み上げに状況認識を追加。