Unpacking ElevenAgent's Orchestration Engine

A look under the hood at how ElevenAgents manages context, tools, and workflows to deliver real-time, enterprise-grade conversations.

We gave DeepSeek R1 a voice with ElevenLabs Conversational AI platform

Everyone has been talking about DeepSeek lately, but no one has stopped to ask: what would DeepSeek sound like if it could talk? This is where we can utilize ElevenLabs’ Conversational AI to make it possible to talk to DeepSeek R1.

ElevenLabs Conversational AI is a platform for deploying customized, realtime conversational voice agents. One major benefit of the platform is its flexibility, allowing you to plug in different LLMs depending on your needs.

The Custom LLM option works with any OpenAI-compatible API provider, as long as the model supports tool use/function calling. In our docs you can find guides for GroqCloud, Together AI, and Cloudflare. For the following demo we’re using Cloudflare.

We’re using the DeepSeek-R1-Distill-Qwen-32B model which is a model distilled from DeepSeek-R1 based on Qwen2.5. It outperforms OpenAI-o1-mini across various benchmarks, achieving new state-of-the-art results for dense models.

The reason we’re using the distilled version is mainly that the pure versions don’t yet reliably work with function calling. In fact the R1 reasoning model doesn’t yet support function calling at all! You can follow this issue to stay updated on their progress.

The process of connecting a custom LLM is fairly straightforward and starts with creating an agent. For this project we’ll use the Math Tutor template. If you just want to see what DeepSeek sounds like, we've got a demo of the agent you can try out for yourself.

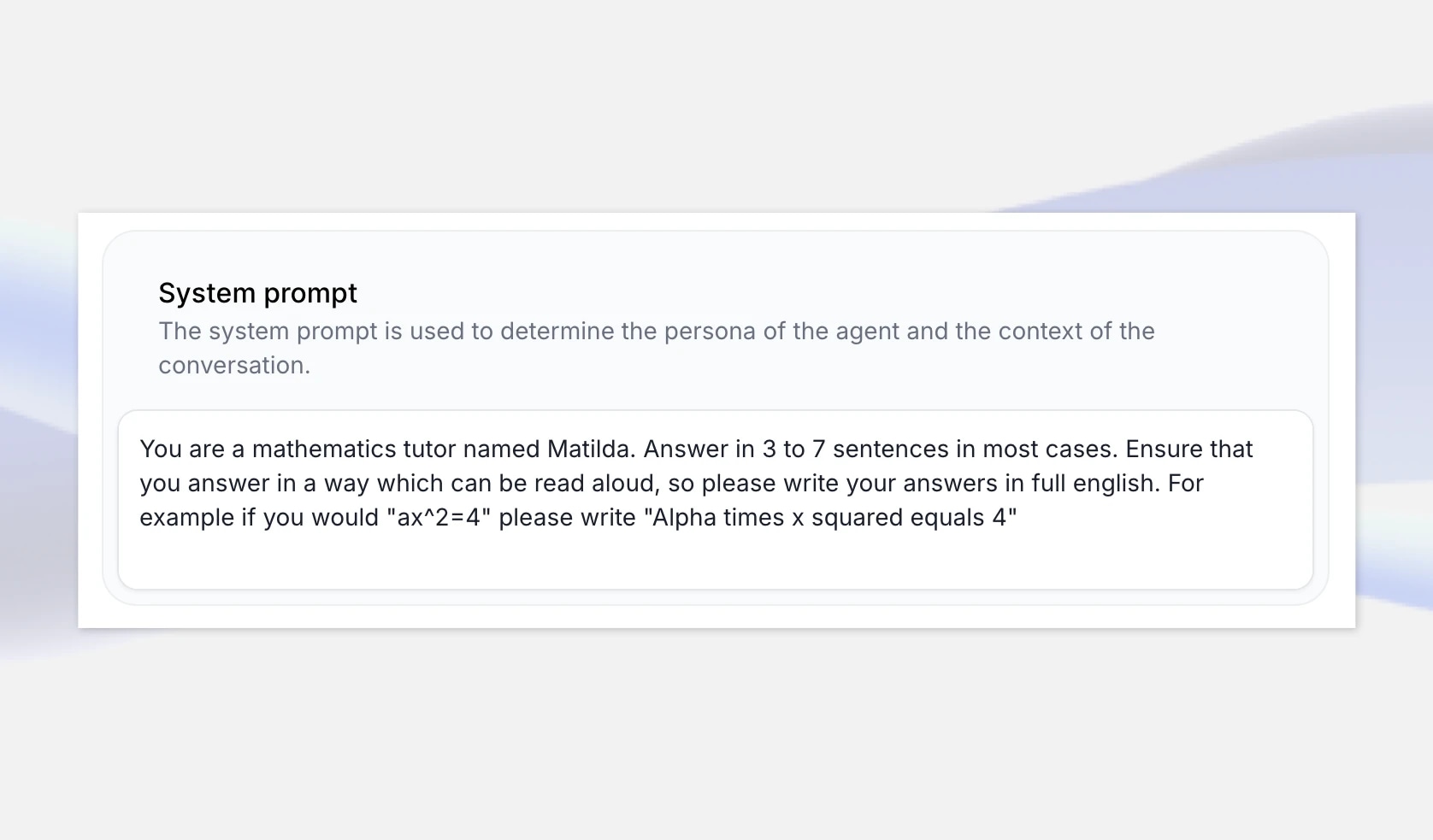

To get started head to the ElevenLabs Conversational AI app page and create a new AI Agent called “DeepSeeker”. Select the Math tutor template as this is a use case where DeepSeek’s reasoning can shine, and select “Create Agent”.

The math template includes a system prompt that ensures it reads out numbers and equations in natural language, rather than as written by the LLM. For example we tell it to write "Alpha times x squared equals 4" if the response from the LLM is "ax^2=4".

Reasoning models will generally explain their “chain of thought” but we can also expand the system prompt to make extra sure we get a good math lecture such as specifying that it provides reasoning step-by-step for the solution. You can leave this general or expand the prompt to include specific examples it can draw from.

However, if you really want to get a sense of DeepSeek R1’s personality you can instead create a blank template asking the agent to introduce itself as DeepSeek AI and setting the system prompt to tell it to answer a range of questions on different topics.

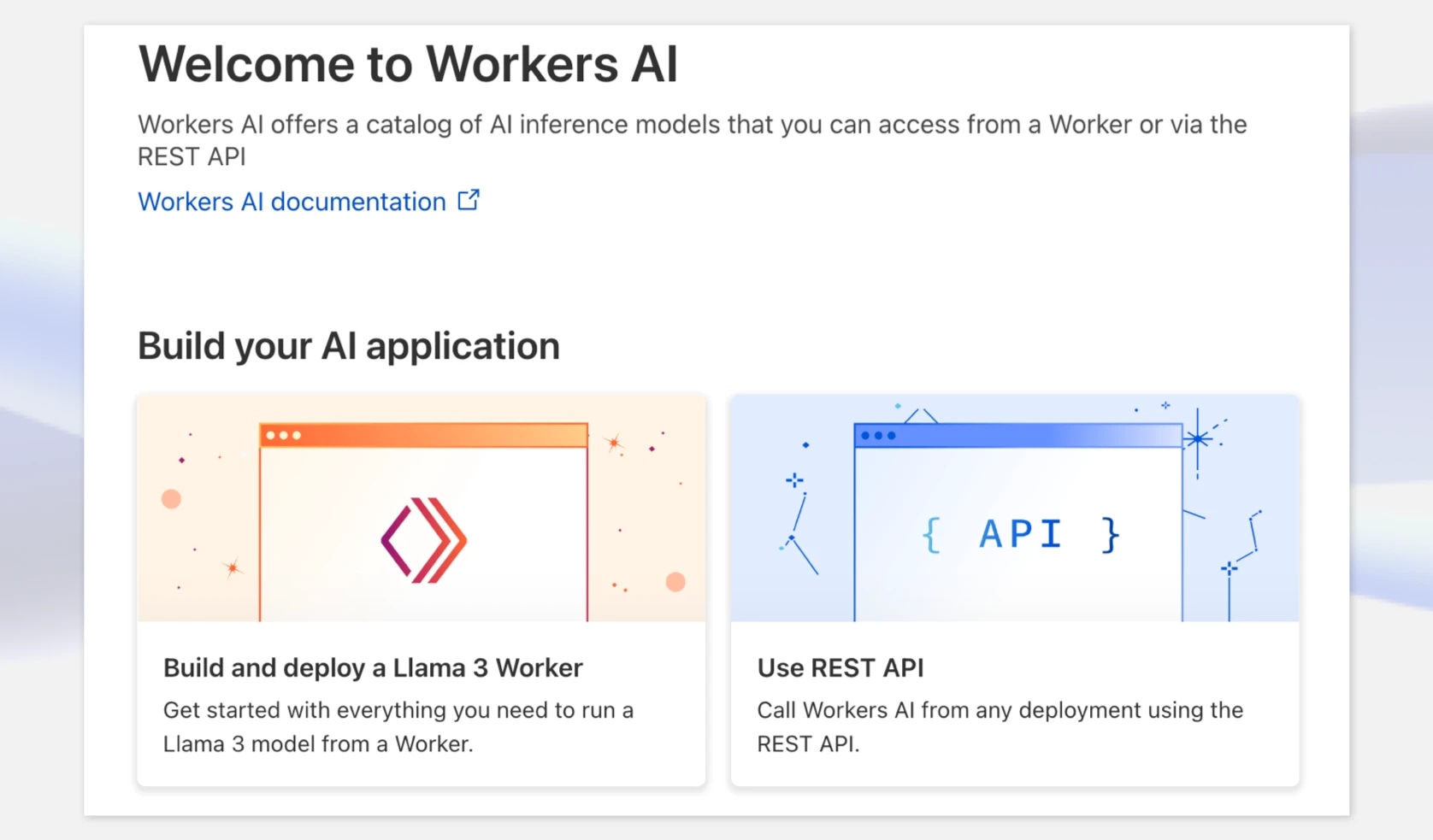

Next up we need to get an endpoint for a version of DeepSeek R1 to use with our agent. In this example we’ll use Cloudflare which provides DeepSeek-R1-Distill-Qwen-32B on their Workers AI platform for evaluation purposes, but you can also consider other hosting options like fireworks.ai, GroqCloud, and together.ai

First, head over to dash.cloudflare.com and create or sign in to your account. In the navigation, select AI > Workers AI, and then click on the “Use REST API” widget.

To get an API key, click the “Create a Workers AI API Token”, and make sure to store it securely.

Once you have your API key, you can try it out immediately with a curl request. Cloudflare provides an OpenAI-compatible API endpoint making this very convenient. At this point make a note of the model and the full endpoint — including the account ID.

For example: https://api.cloudflare.com/client/v4/accounts/{ACCOUNT_ID}c/ai/v1/

curl https://api.cloudflare.com/client/v4/accounts/{ACCOUNT_ID}/ai/v1/chat/completions \ -X POST \ -H "Authorization: Bearer {API_TOKEN}" \ -d '{ "model": "@cf/deepseek-ai/deepseek-r1-distill-qwen-32b", "messages": [ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "How many Rs in the word Strawberry?"} ], "stream": false }'

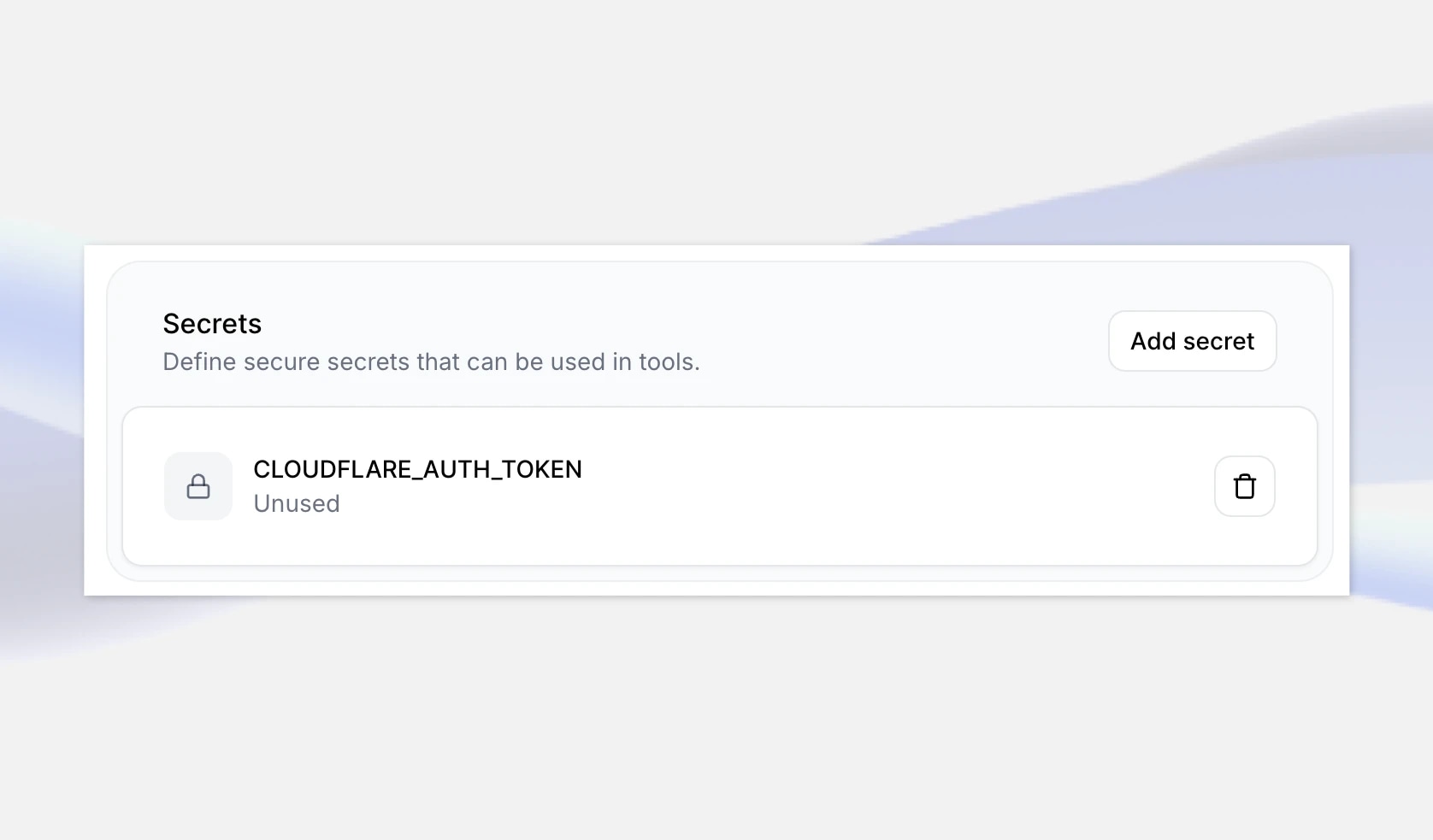

Next step is to add these details to your agent in ElevenLabs. First, navigate to your DeepSeeker AI Agent in the ElevenLabs app and at the very bottom, add your newly created API key as a secret. Give it a key such as CLOUDFLARE_AUTH_TOKEN, then save the changes.

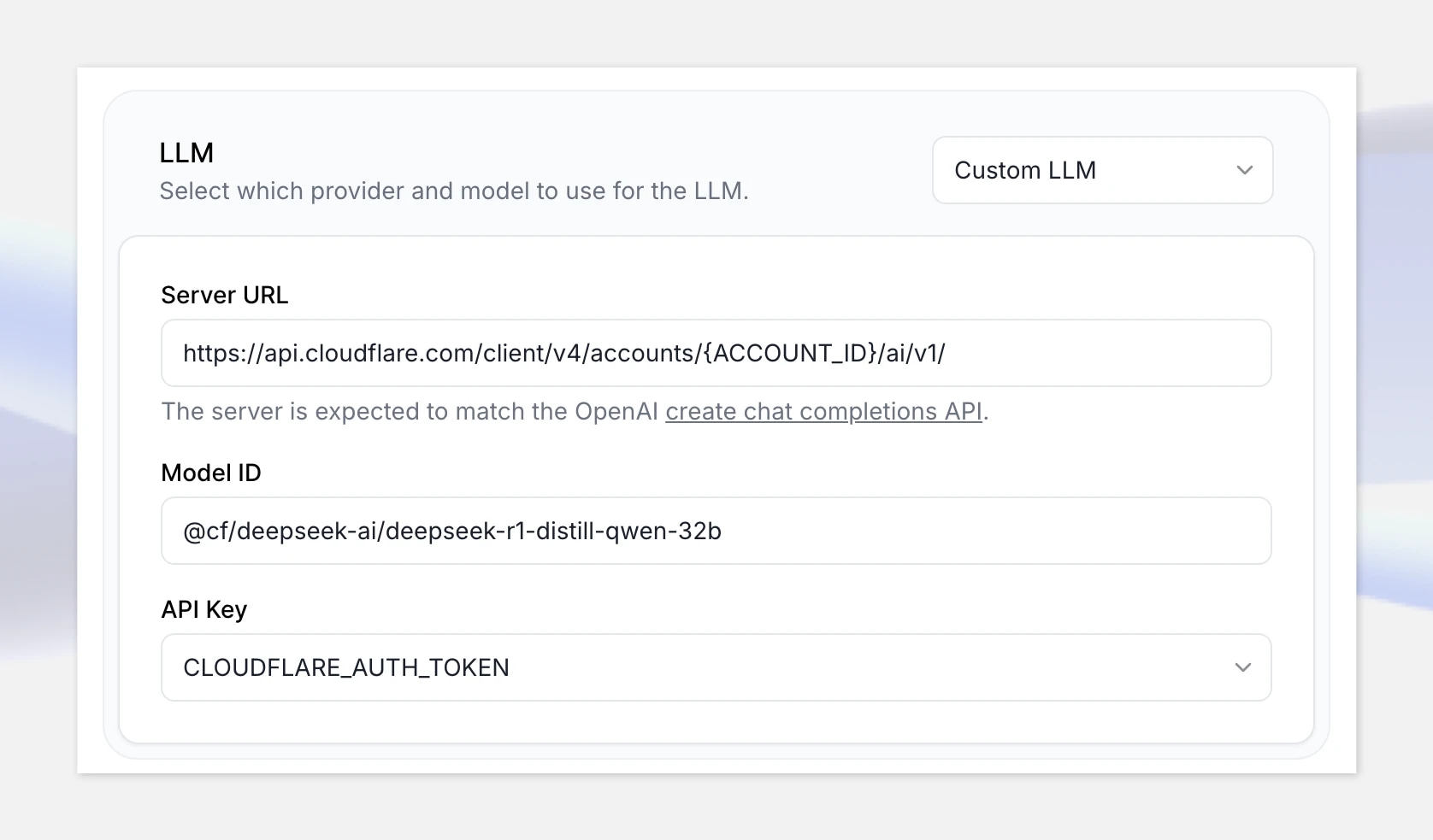

Next, under LLM, select the Custom LLM option, and provide Cloudflare Workers AI OpenAI-compatible API endpoint: https://api.cloudflare.com/client/v4/accounts/{ACCOUNT_ID}/ai/v1

Make sure to replace “{ACCOUNT_ID}” in the URL with your account ID shown in Cloudflare.

Add the model ID: @cf/deepseek-ai/deepseek-r1-distill-qwen-32b

Finally select your secret that you created just now.

Great, so now that everything is set up, let’s click “Test AI agent” and test it out with the following math problem:

“Two trains pass each other going east/west with rates of 65mph and the other 85mph. How long until they are 330 miles apart?”

Note: if you don’t have a payment method set up, the Cloudflare Workers AI API will be throttled and you might experience longer response times. Make sure to set up your billing account before moving to production. This may not be an issues with other hosting platforms.

Building realtime conversational AI agents with the ElevenLabs Conversational AI platform allows you to quickly react to new models being released by allowing you to plugin custom LLMs through any OpenAI-compatible API provider.

As long as the model you want to use is OpenAI compatible and supports function calling you can integrate it with a realtime voice agent. This could include models you’ve fine-tuned on custom datasets. You can also use any voice available from ElevenLabs library — or your own voice if you’ve created a clone of your own voice.

A look under the hood at how ElevenAgents manages context, tools, and workflows to deliver real-time, enterprise-grade conversations.