Groq Cloud

Overview

Groq Cloud provides easy access to fast AI inference, giving you OpenAI-compatible API endpoints in a matter of clicks.

Use leading Openly-available Models like Llama, Mixtral, and Gemma as the brain for your ElevenLabs agents in a few easy steps.

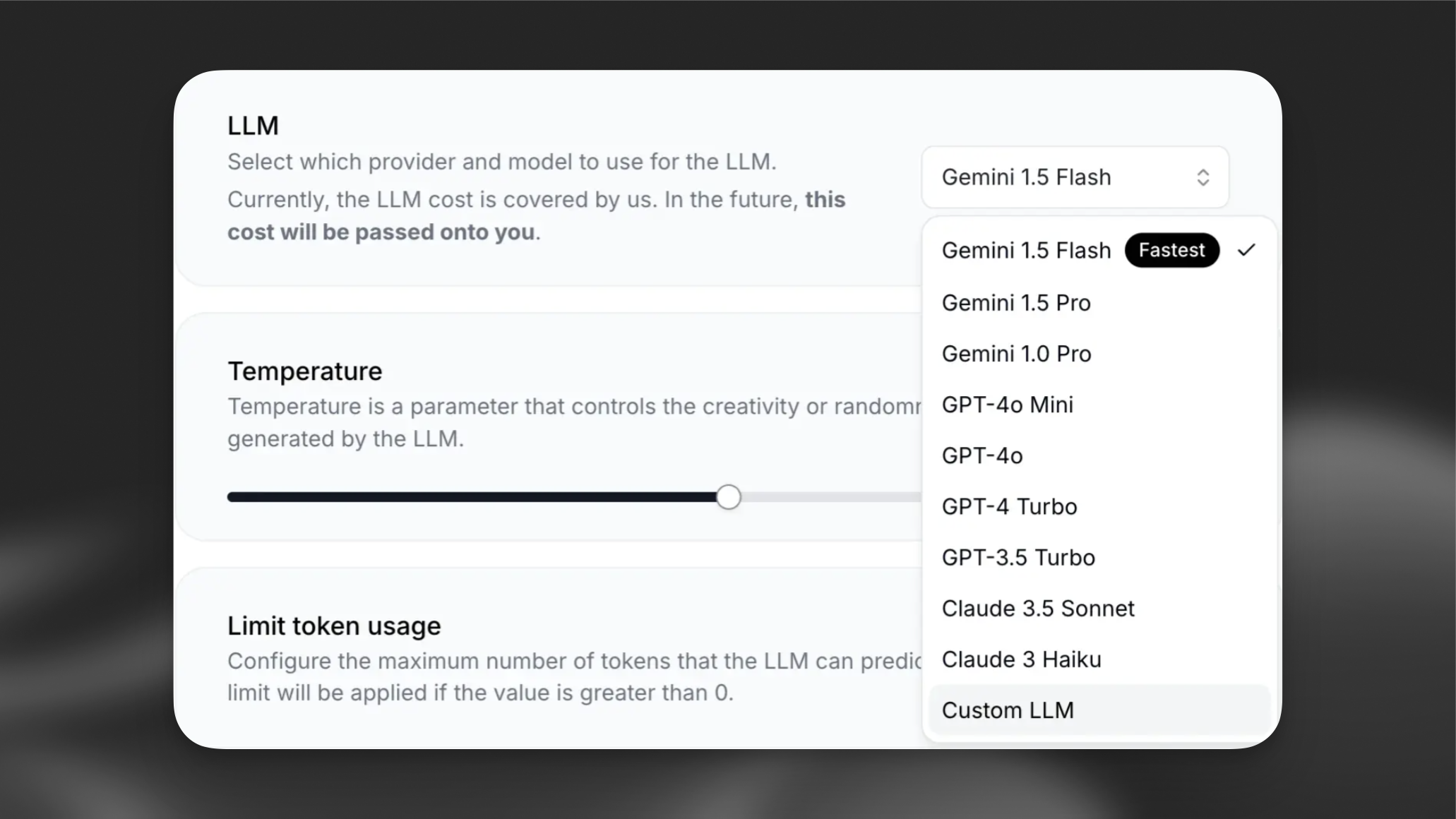

Choosing a model

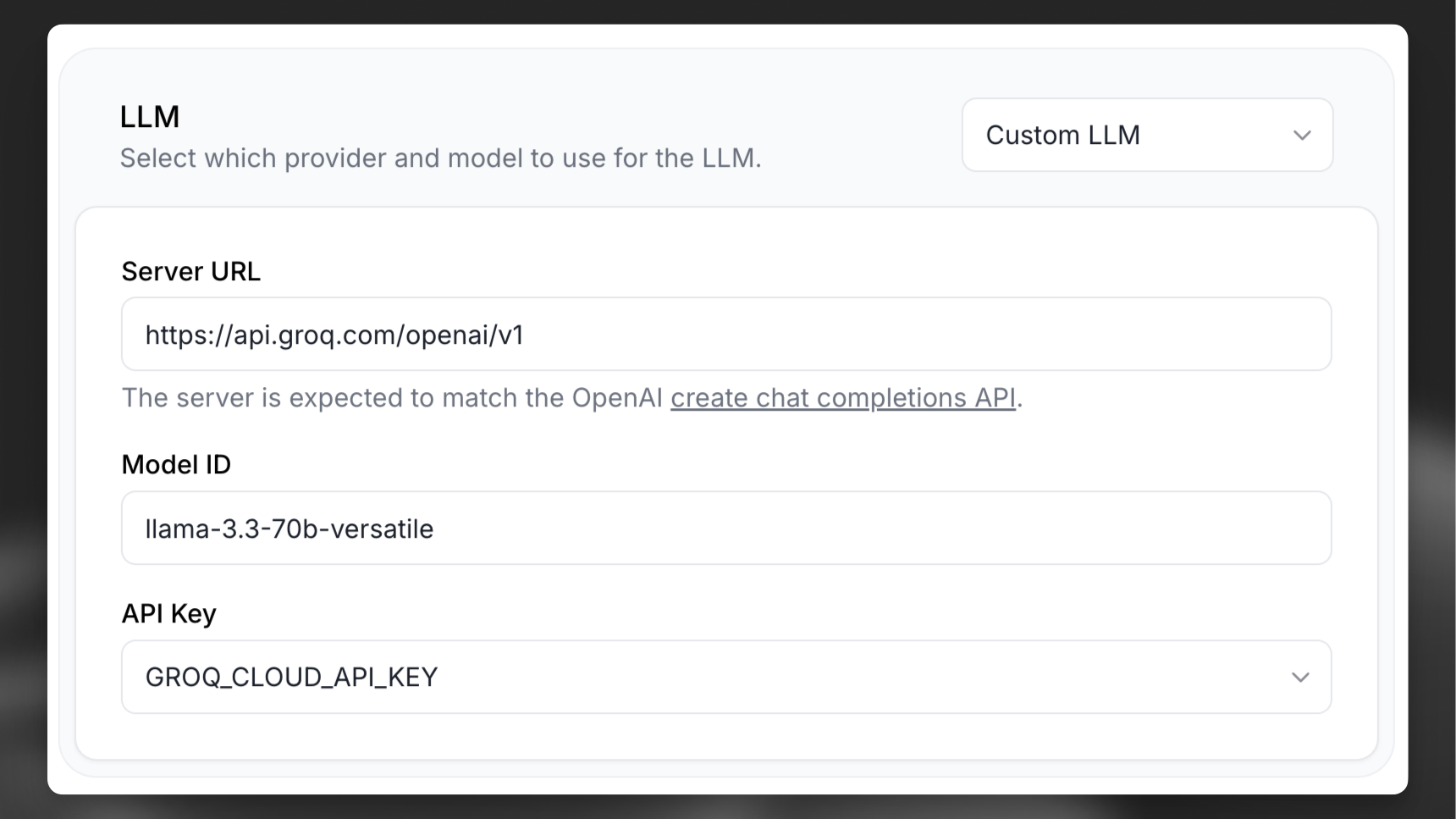

To make use of the full power of ElevenLabs agents you need to use a model that supports tool use and structured outputs. Groq recommends the following Llama-3.3 models their versatility and performance:

- meta-llama/llama-4-scout-17b-16e-instruct (10M token context window) and support for 12 languages (Arabic, English, French, German, Hindi, Indonesian, Italian, Portuguese, Spanish, Tagalog, Thai, and Vietnamese)

- llama-3.3-70b-versatile (128k context window | 32,768 max output tokens)

- llama-3.1-8b-instant (128k context window | 8,192 max output tokens)

With this in mind, it’s recommended to use meta-llama/llama-4-scout-17b-16e-instruct for your ElevenLabs Agents agent.

Set up Llama 3.3 on Groq Cloud

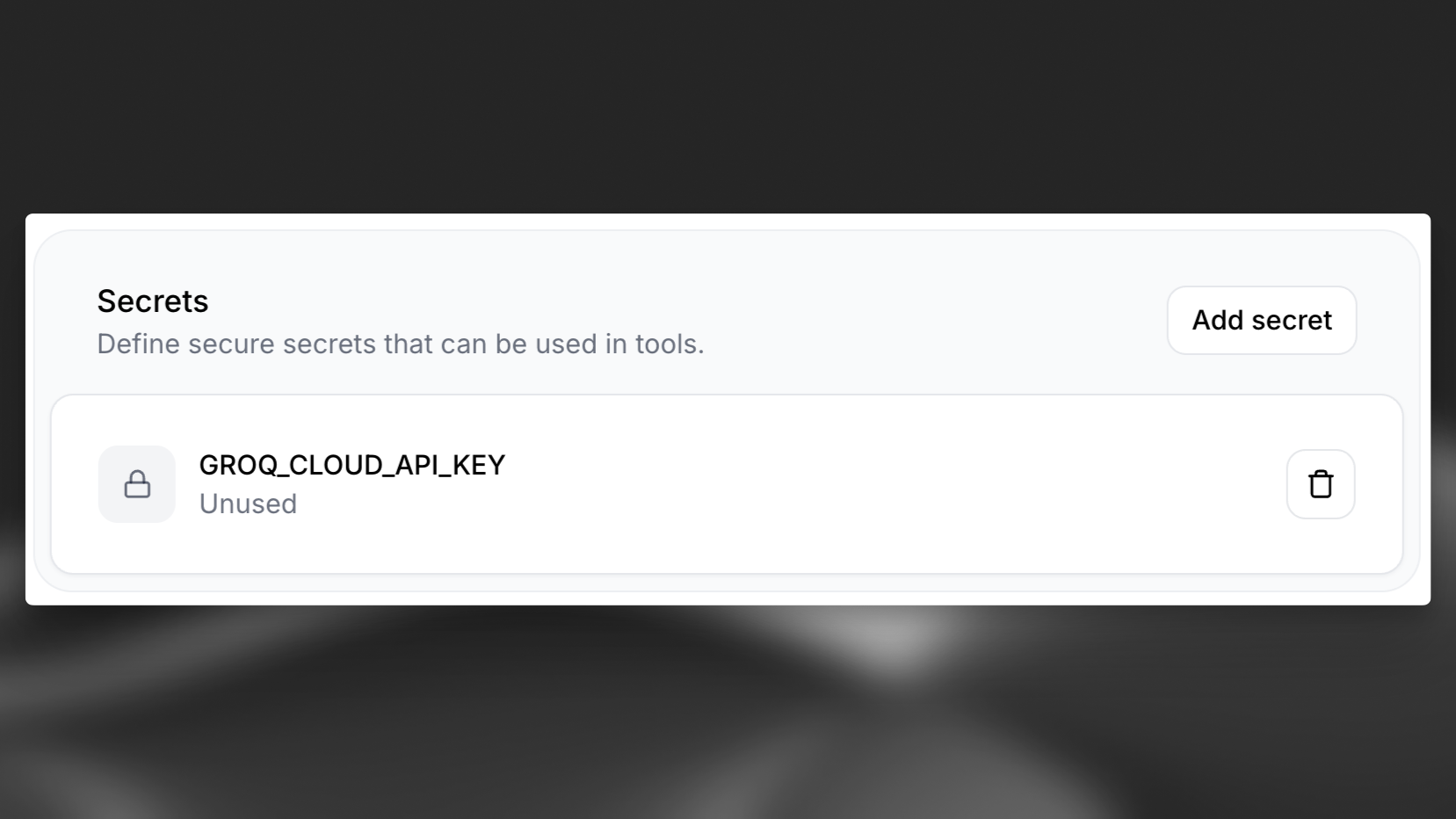

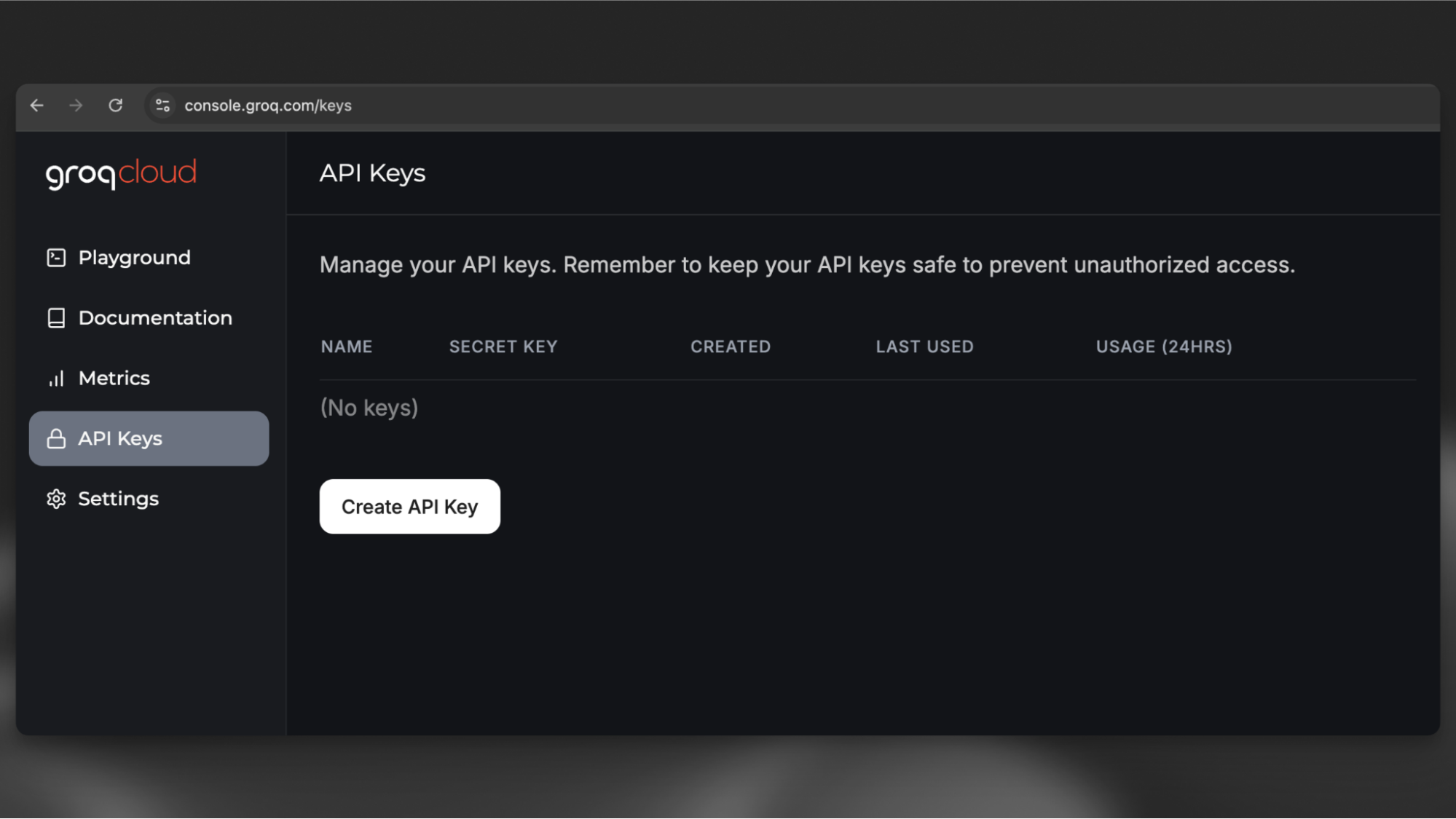

Navigate to your AI Agent, scroll down to the “Secrets” section and select “Add Secret”. After adding the secret, make sure to hit “Save” to make the secret available to your agent.