Quickstart

In this guide, you’ll learn how to create your first conversational agent. This will serve as a foundation for building conversational workflows tailored to your business use cases.

Getting started

ElevenLabs Agents are managed either through the ElevenAgents dashboard, the ElevenLabs API or the Agents CLI.

Creating your first agent

In this quickstart guide we’ll start by creating an agent via the API or the web dashboard. Next we’ll test the agent, either by embedding it in your website or via the ElevenLabs dashboard.

Build an agent via the web dashboard

Build an agent via the CLI

Build an agent via the API

In this guide, we’ll create a conversational support assistant capable of answering questions about your product, documentation, or service. This assistant can be embedded into your website or app to provide real-time support to your customers.

Create a new assistant

In the ElevenLabs Dashboard, create a new assistant by entering a name and selecting the Blank template option.

Configure the assistant behavior

Go to the Agent tab to configure the assistant’s behavior. Set the following:

Add a knowledge base

Go to the Knowledge Base section to provide your assistant with context about your business.

This is where you can upload relevant documents & links to external resources:

- Include documentation, FAQs, and other resources to help the assistant respond to customer inquiries.

- Keep the knowledge base up-to-date to ensure the assistant provides accurate and current information.

Next we’ll configure the voice for your assistant.

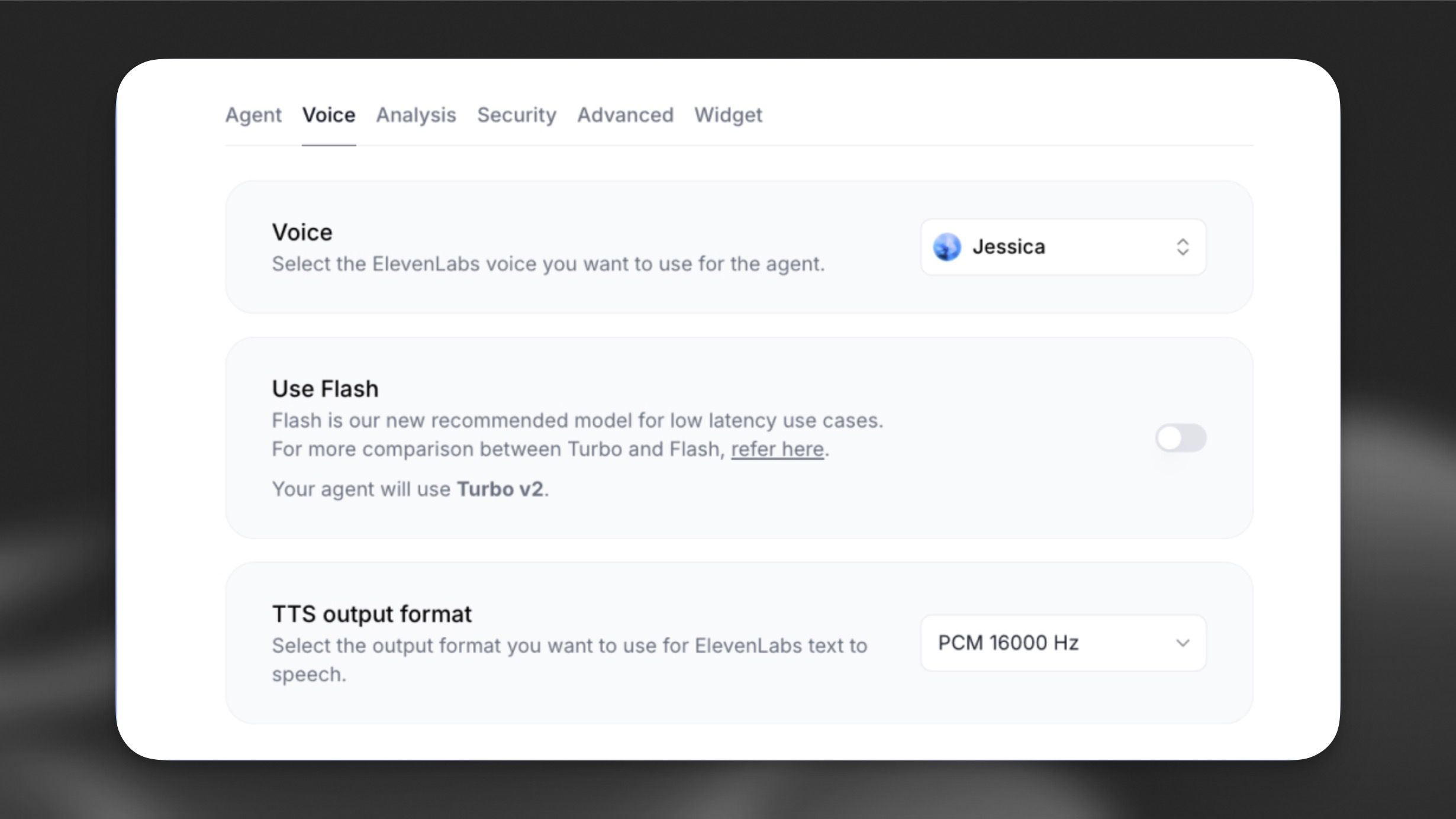

Select a voice

In the Voice tab, choose a voice that best matches your assistant from the voice library:

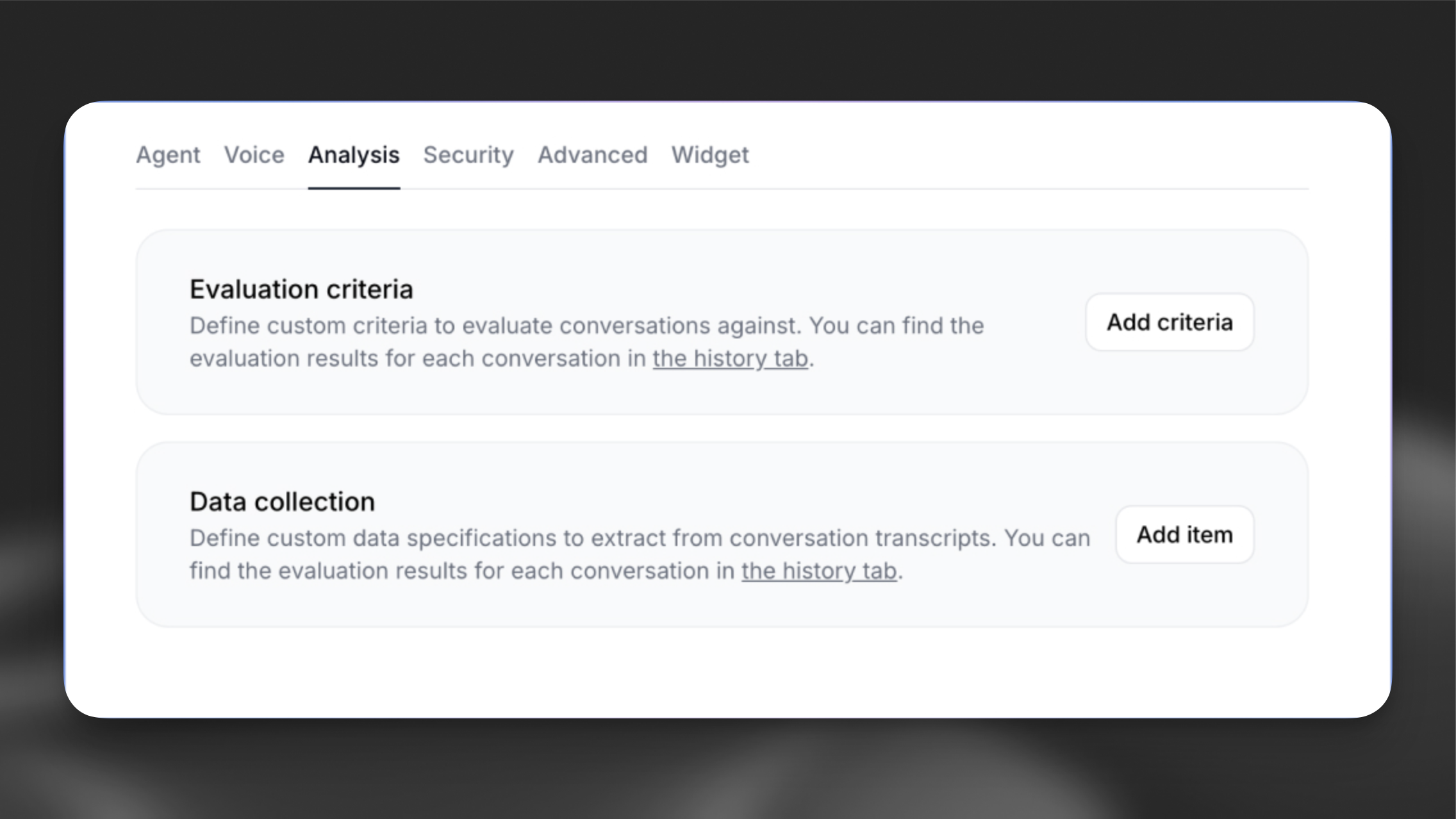

Configure evaluation criteria and data collection to analyze conversations and improve your assistant’s performance.

Configure evaluation criteria

Navigate to the Analysis tab in your assistant’s settings to define custom criteria for evaluating conversations.

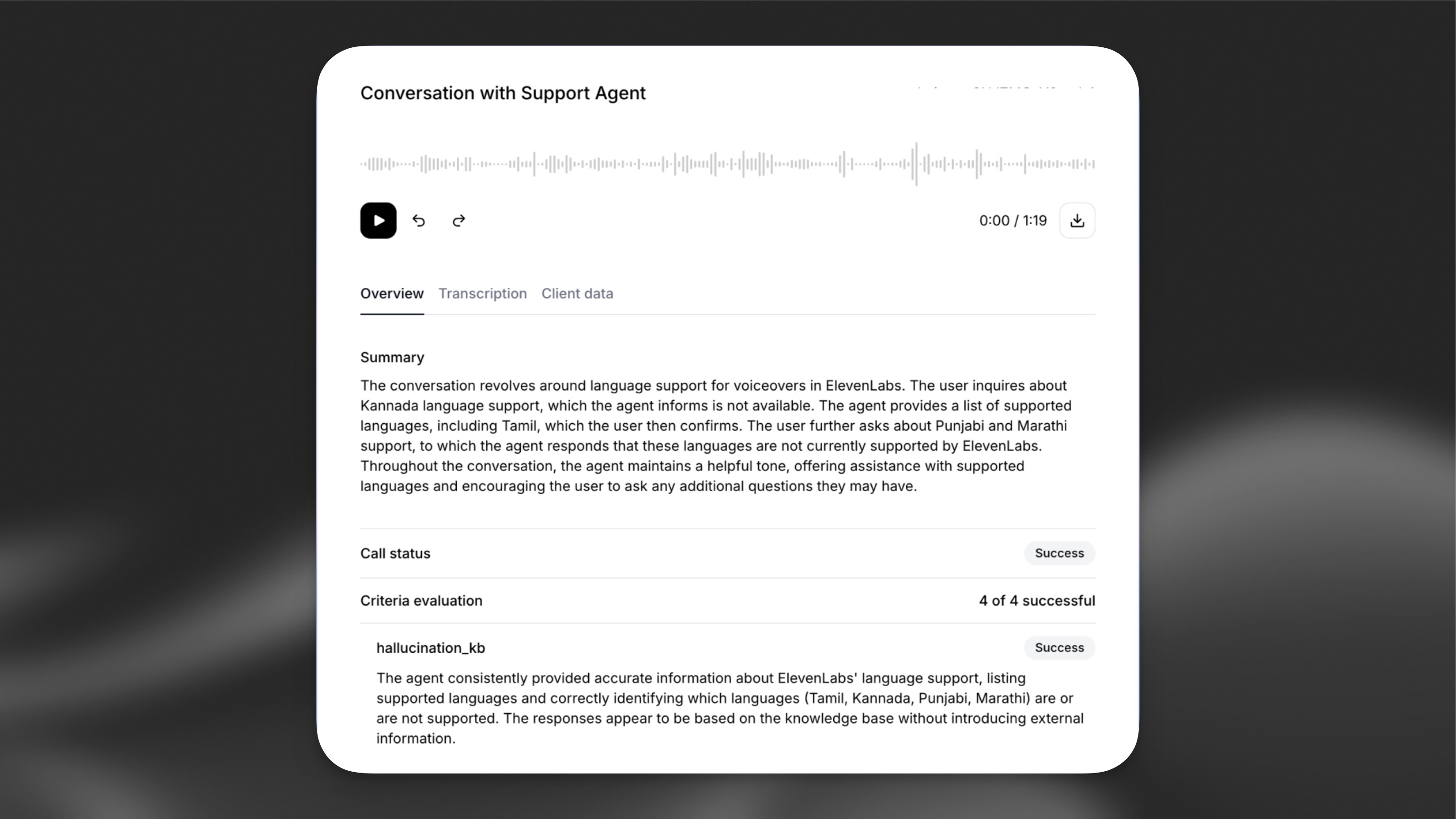

Every conversation transcript is passed to the LLM to verify if specific goals were met. Results will either be success, failure, or unknown, along with a rationale explaining the chosen result.

Let’s add an evaluation criteria with the name solved_user_inquiry:

Configure data collection

In the Data Collection section, configure details to be extracted from each conversation.

Click Add item and configure the following:

- Data type: Select “string”

- Identifier: Enter a unique identifier for this data point:

user_question - Description: Provide detailed instructions for the LLM about how to extract the specific data from the transcript:

The newly created agent can be tested in a variety of ways, but the quickest way is to use the ElevenLabs dashboard.

The web dashboard uses our React SDK under the hood to handle real-time conversations.

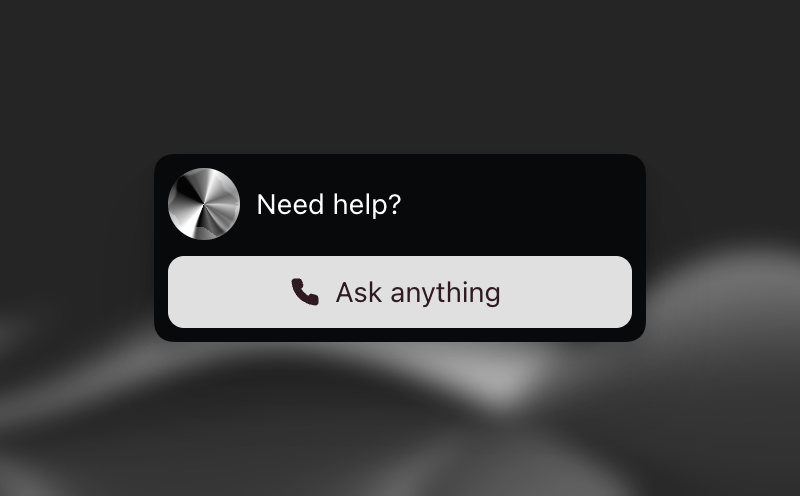

If instead you want to quickly test the agent in your own website, you can use the Agent widget. Simply paste the following HTML snippet into your website, taking care to replace agent-id with the ID of your agent.

Next steps

As a follow up to this quickstart guide, you can make your agent more effective by integrating:

- Knowledge bases to equip it with domain-specific information.

- Tools to allow it to perform tasks on your behalf.

- Authentication to restrict access to certain conversations.

- Success evaluation to analyze conversations and improve its performance.

- Data collection to collect data about conversations and improve its performance.

- Conversation retention to view conversation history and improve its performance.