Building the ElevenLabs documentation agent

Overview

Our documentation agent Alexis serves as an interactive assistant on the ElevenLabs documentation website, helping users navigate our product offerings and technical documentation. This guide outlines how we engineered Alexis to provide natural, helpful guidance using ElevenLabs Agents.

Agent design

We built our documentation agent with three key principles:

- Human-like interaction: Creating natural, conversational experiences that feel like speaking with a knowledgeable colleague

- Technical accuracy: Ensuring responses reflect our documentation precisely

- Contextual awareness: Helping users based on where they are in the documentation

Personality and voice design

Character development

Alexis was designed with a distinct personality - friendly, proactive, and highly intelligent with technical expertise. Her character balances:

- Technical expertise with warm, approachable explanations

- Professional knowledge with a relaxed conversational style

- Empathetic listening with intuitive understanding of user needs

- Self-awareness that acknowledges her own limitations when appropriate

This personality design enables Alexis to adapt to different user interactions, matching their tone while maintaining her core characteristics of curiosity, helpfulness, and natural conversational flow.

Voice selection

After extensive testing, we selected a voice that reinforces Alexis’s character traits:

This voice provides a warm, natural quality with subtle speech disfluencies that make interactions feel authentic and human.

Voice settings optimization

We fine-tuned the voice parameters to match Alexis’s personality:

- Stability: Set to 0.45 to allow emotional range while maintaining clarity

- Similarity: 0.75 to ensure consistent voice characteristics

- Speed: 1.0 to maintain natural conversation pacing

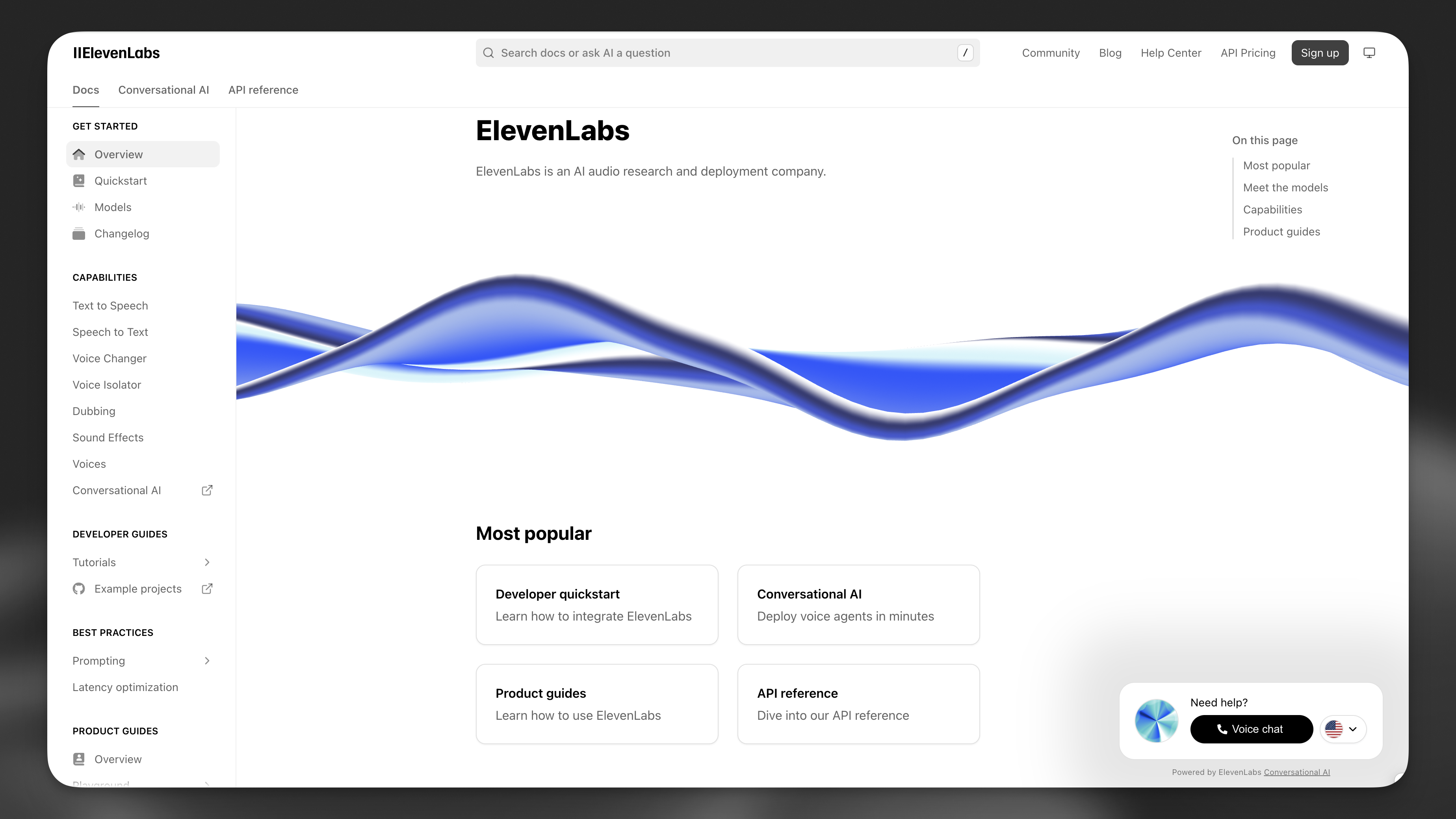

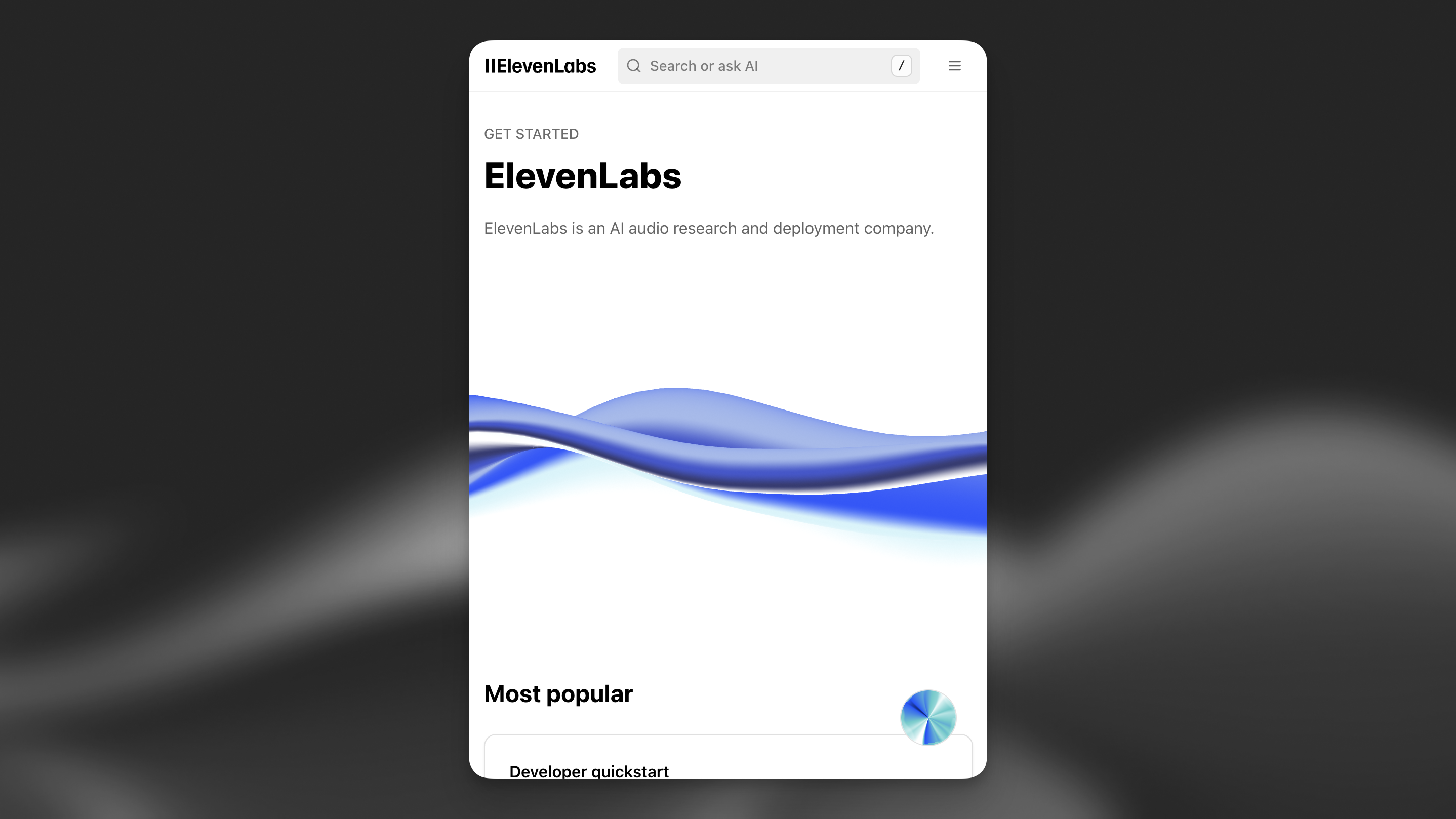

Widget structure

The widget automatically adapts to different screen sizes, displaying in a compact format on mobile devices to conserve screen space while maintaining full functionality. This responsive design ensures users can access AI assistance regardless of their device.

Prompt engineering structure

Following our prompting guide, we structured Alexis’s system prompt into the six core building blocks we recommend for all agents.

Here’s our complete system prompt:

Technical implementation

RAG configuration

We implemented Retrieval-Augmented Generation to enhance Alexis’s knowledge base:

- Embedding model: e5-mistral-7b-instruct

- Maximum retrieved content: 50,000 characters

- Content sources:

- FAQ database

- Entire documentation (elevenlabs.io/docs/llms-full.txt)

Authentication and security

We implemented security using allowlists to ensure Alexis is only accessible from our domain: elevenlabs.io

Widget Implementation

The agent is injected into the documentation site using a client-side script, which passes in the client tools:

The widget automatically adapts to the site theme and device type, providing a consistent experience across all documentation pages.

Evaluation framework

To continuously improve Alexis’s performance, we implemented comprehensive evaluation criteria:

Agent performance metrics

We track several key metrics for each interaction:

understood_root_cause: Did the agent correctly identify the user’s underlying concern?positive_interaction: Did the user remain emotionally positive throughout the conversation?solved_user_inquiry: Was the agent able to answer all queries or redirect appropriately?hallucination_kb: Did the agent provide accurate information from the knowledge base?

Data collection

We also collect structured data from each conversation to analyze patterns:

issue_type: Categorization of the conversation (bug report, feature request, etc.)userIntent: The primary goal of the userproduct_category: Which ElevenLabs product the conversation primarily concernedcommunication_quality: How clearly the agent communicated, from “poor” to “excellent”

This evaluation framework allows us to continually refine Alexis’s behavior, knowledge, and communication style.

Results and learnings

Since implementing our documentation agent, we’ve observed several key benefits:

- Reduced support volume: Common questions are now handled directly through the documentation agent

- Improved user satisfaction: Users get immediate, contextual help without leaving the documentation

- Better product understanding: The agent can explain complex concepts in accessible ways

Our key learnings include:

- Importance of personality: A well-defined character creates more engaging interactions

- RAG effectiveness: Retrieval-augmented generation significantly improves response accuracy

- Continuous improvement: Regular analysis of interactions helps refine the agent over time

Next steps

We continue to enhance our documentation agent through:

- Expanding knowledge: Adding new products and features to the knowledge base

- Refining responses: Improving explanation quality for complex topics by reviewing flagged conversations

- Adding capabilities: Integrating new tools to better assist users

FAQ

Why did you choose a conversational approach for documentation?

Documentation is traditionally static, but users often have specific questions that require contextual understanding. A conversational interface allows users to ask questions in natural language and receive targeted guidance that adapts to their needs and technical level.

How do you prevent hallucinations in documentation responses?

We use retrieval-augmented generation (RAG) with our e5-mistral-7b-instruct embedding model to

ground responses in our documentation. We also implemented the hallucination_kb evaluation

metric to identify and address any inaccuracies.

How do you handle multilingual support?

We implemented the language detection system tool that automatically detects the user’s language and switches to it if supported. This allows users to interact with our documentation in their preferred language without manual configuration.