Eleven v3 Audio Tags: Świadomość sytuacyjna dla audio AI

Ulepsz mowę AI z Eleven v3 Audio Tags. Kontroluj ton, emocje i tempo dla naturalnej rozmowy. Dodaj świadomość sytuacyjną do zamiany tekstu na mowę.

Tagi audio ElevenLabs pozwalają sterować emocjami, tempem i efektami dźwiękowymi głosu AI.

Wraz z premierą Eleven v3, promptowanie audio stało się kluczową umiejętnością. Zamiast tylko wpisywać tekst, który ma przeczytać głos AI, możesz teraz użyć nowej funkcji — tagów audio — żeby sterować wszystkim: od emocji po sposób wypowiedzi.

Eleven v3 to wersja alfa podgląd badawczy nowego modelu. Wymaga więcej promptowania niż wcześniejsze modele — ale efekty są niesamowite.

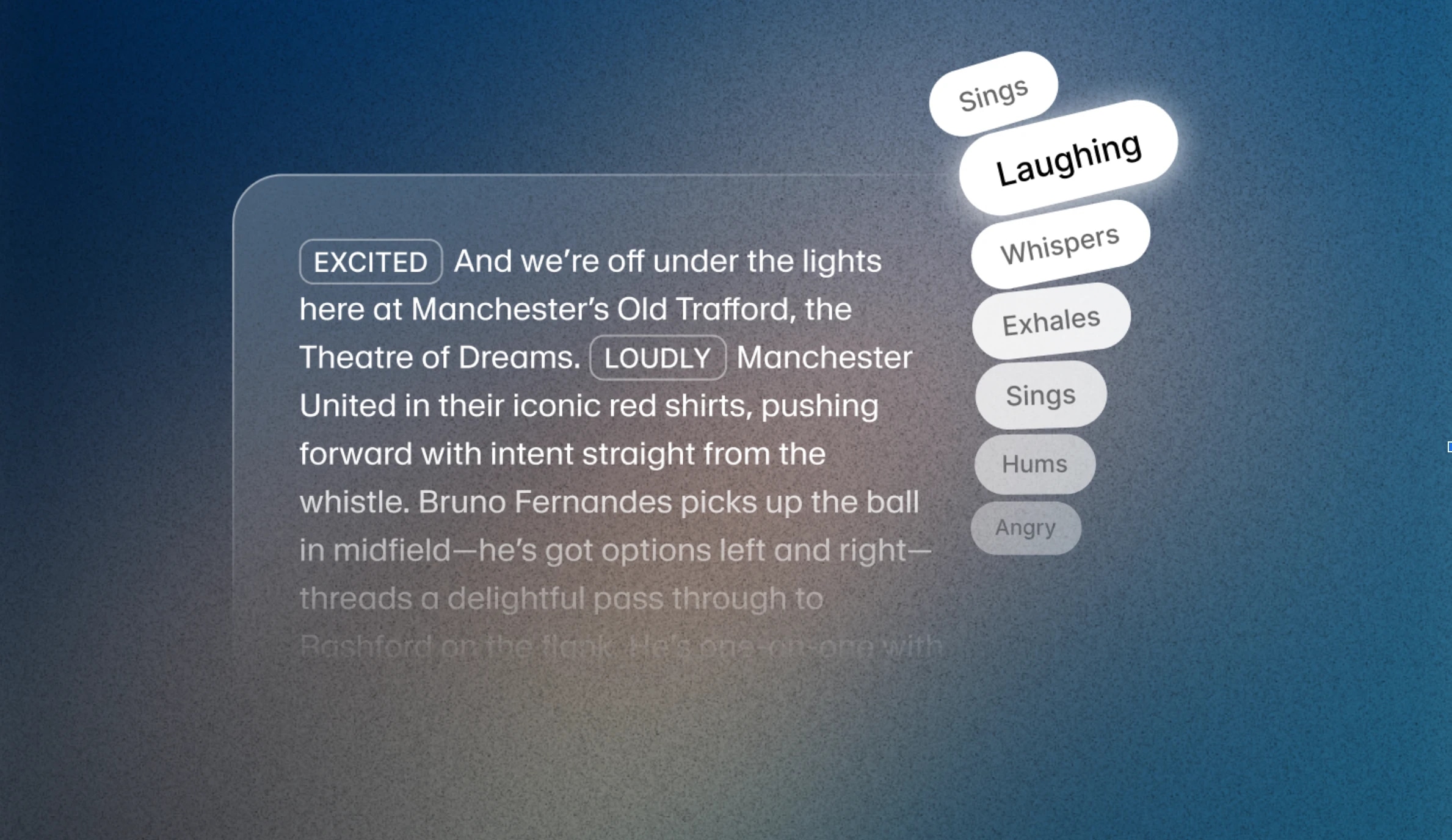

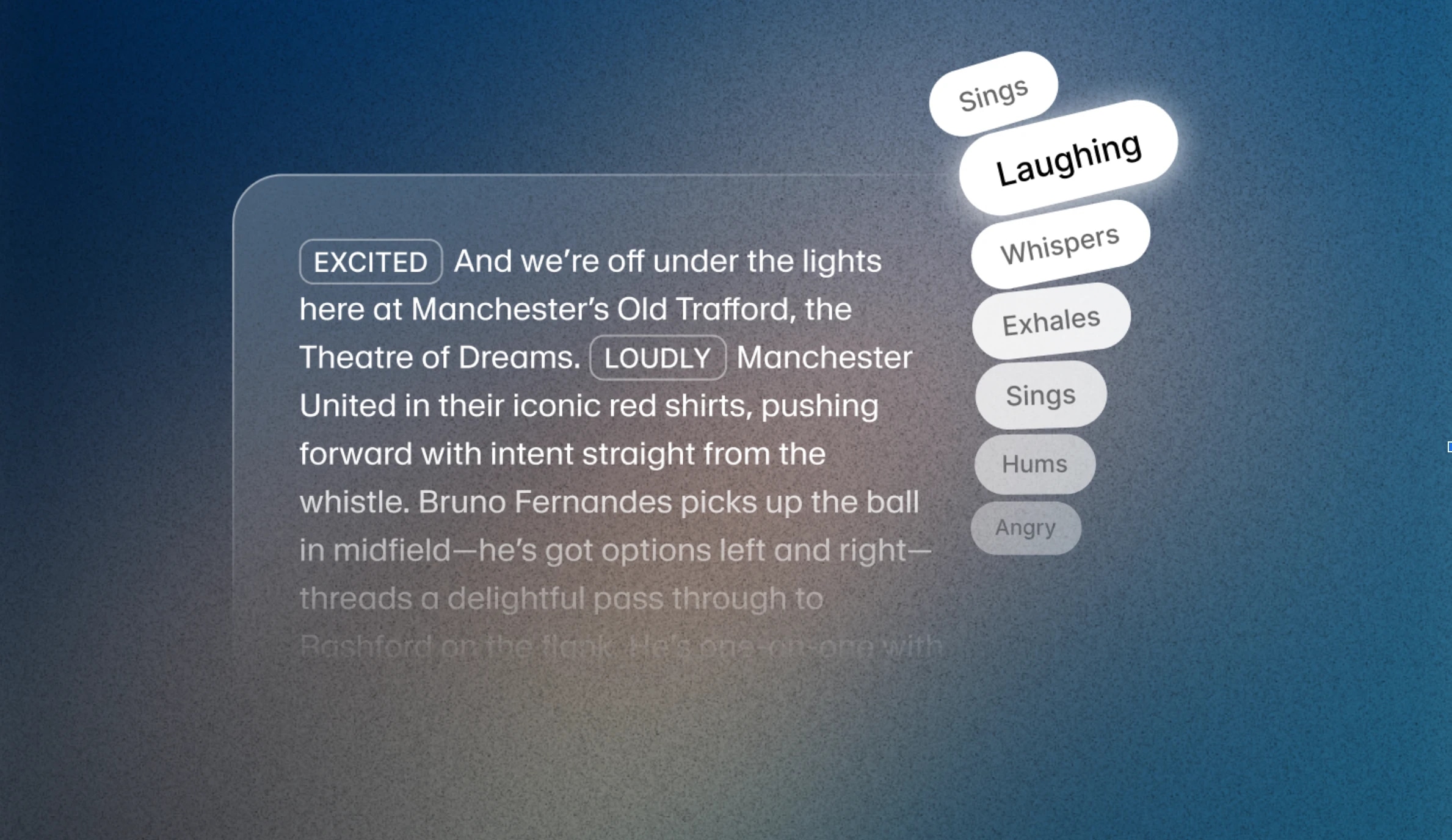

Tagi audio ElevenLabs to słowa w nawiasach kwadratowych, które nowy model Eleven v3 rozumie i wykorzystuje do sterowania dźwiękiem. Mogą to być np. [excited], [whispers], [sighs], ale też [gunshot], [clapping] czy [explosion].

Tagi audio pozwalają ci kształtować brzmienie głosu AI, także sygnały niewerbalne jak ton, pauzy czy tempo. Niezależnie czy tworzysz audiobooki, interaktywne postacie czy media oparte na dialogach, te proste narzędzia dają ci pełną kontrolę nad emocjami i sposobem wypowiedzi.

Możesz wstawiać tagi audio w dowolnym miejscu skryptu, by na bieżąco zmieniać sposób wypowiedzi. Możesz też łączyć tagi w jednym skrypcie, a nawet zdaniu. Tagi dzielą się na główne kategorie:

Te tagi pozwalają ustawić emocjonalny ton głosu — czy ma być poważny, intensywny czy radosny. Możesz użyć np. [sad], [angry], [happily] albo [sorrowful], osobno lub razem.

Te tagi dotyczą tonu i wykonania. Pozwalają zmienić głośność i energię w scenach wymagających ciszy lub siły. Przykłady: [whispers], [shouts], a nawet [x accent].

Naturalna mowa to także reakcje. Dzięki nim dodasz realizmu, wstawiając spontaniczne momenty do wypowiedzi. Przykłady: [laughs], [clears throat], [sighs].

Za tymi funkcjami stoi nowa architektura v3. Model lepiej rozumie kontekst tekstu, więc naturalniej podąża za emocjami, zmianami tonu i przejściami między postaciami. W połączeniu z tagami audio daje to większą ekspresję niż wcześniej w TTS.

Możesz też tworzyć dialogi z wieloma postaciami, które brzmią naturalnie — z przerwami, zmianami nastroju i niuansami rozmowy, bez skomplikowanego promptowania.

Profesjonalne klony głosu (PVC) nie są jeszcze w pełni zoptymalizowane pod Eleven v3, więc ich jakość może być niższa niż w poprzednich modelach. W tej fazie podglądu najlepiej wybrać Instant Voice Clone (IVC) lub zaprojektowany głos, jeśli chcesz korzystać z funkcji v3. Optymalizacja PVC dla v3 pojawi się wkrótce.

Eleven v3 jest dostępny w interfejsie ElevenLabs, a my oferujemy 80% zniżki do końca czerwca. Publiczne API dla Eleven v3 (alfa) też jest dostępne. Niezależnie czy testujesz, czy wdrażasz na większą skalę — teraz jest dobry moment, by sprawdzić nowe możliwości.

Tworzenie mowy AI, która gra — a nie tylko czyta — to kwestia opanowania tagów audio. Przygotowaliśmy siedem krótkich, praktycznych przewodników, które pokazują, jak tagi takie jak [SZEPT], [CICHO SIĘ ŚMIEJE], czy [francuski akcent] pozwalają ci sterować kontekstem, emocjami, tempem, a nawet dialogiem wielu postaci jednym modelem.

[SZEPT], [KRZYK], czy [WESTCHNIENIE] pozwalają Eleven v3 reagować na sytuację — podkręcać napięcie, łagodzić ostrzeżenia czy robić pauzy dla efektu.[głos pirata] po [francuski akcent], tagi zamieniają narrację w odgrywanie ról. Możesz zmienić postać w trakcie zdania i reżyserować całe sceny bez zmiany modelu.[westchnienie], [podekscytowany], czy [zmęczony] pozwalają sterować emocjami w każdej chwili — budować napięcie, ulgę czy humor, bez ponownych nagrań.[pauza], [zachwyt], czy [dramatyczny ton] sterują rytmem i akcentami, by głos AI prowadził słuchacza przez każdą scenę.[przerywa], [nakładające się głosy], albo zmianami tonu. Jeden model, wiele głosów — naturalna rozmowa za jednym podejściem.[pauza], [pośpiech], czy [przeciągnięte] pozwalają precyzyjnie sterować tempem i zamienić zwykły tekst w prawdziwe wykonanie.[amerykański akcent], [brytyjski akcent], [południowy akcent USA] i inne — dla bogatszej mowy bez zmiany modelu.

Ulepsz mowę AI z Eleven v3 Audio Tags. Kontroluj ton, emocje i tempo dla naturalnej rozmowy. Dodaj świadomość sytuacyjną do zamiany tekstu na mowę.