Integrate your own model

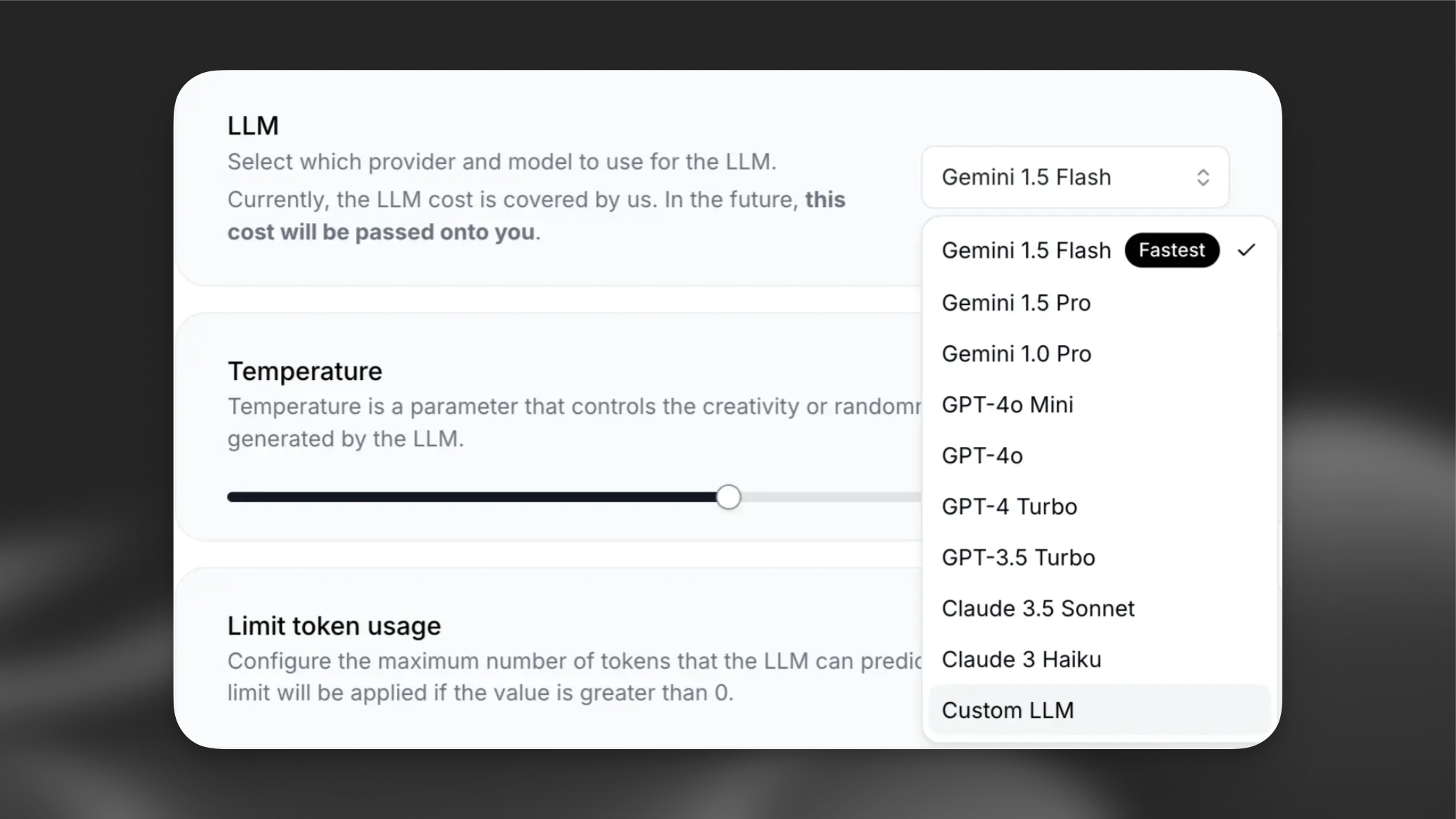

Custom LLM allows you to connect your conversations to your own LLM via an external endpoint. ElevenLabs also supports natively integrated LLMs

Custom LLMs let you bring your own OpenAI API key or run an entirely custom LLM server.

Overview

By default, we use our own internal credentials for popular models like OpenAI. To use a custom LLM server, it must align with the OpenAI create chat completion request/response structure.

The following guides cover both use cases:

- Bring your own OpenAI key: Use your own OpenAI API key with our platform.

- Custom LLM server: Host and connect your own LLM server implementation.

You’ll learn how to:

- Store your OpenAI API key in ElevenLabs

- host a server that replicates OpenAI’s create chat completion endpoint

- Direct ElevenLabs to your custom endpoint

- Pass extra parameters to your LLM as needed

Using your own OpenAI key

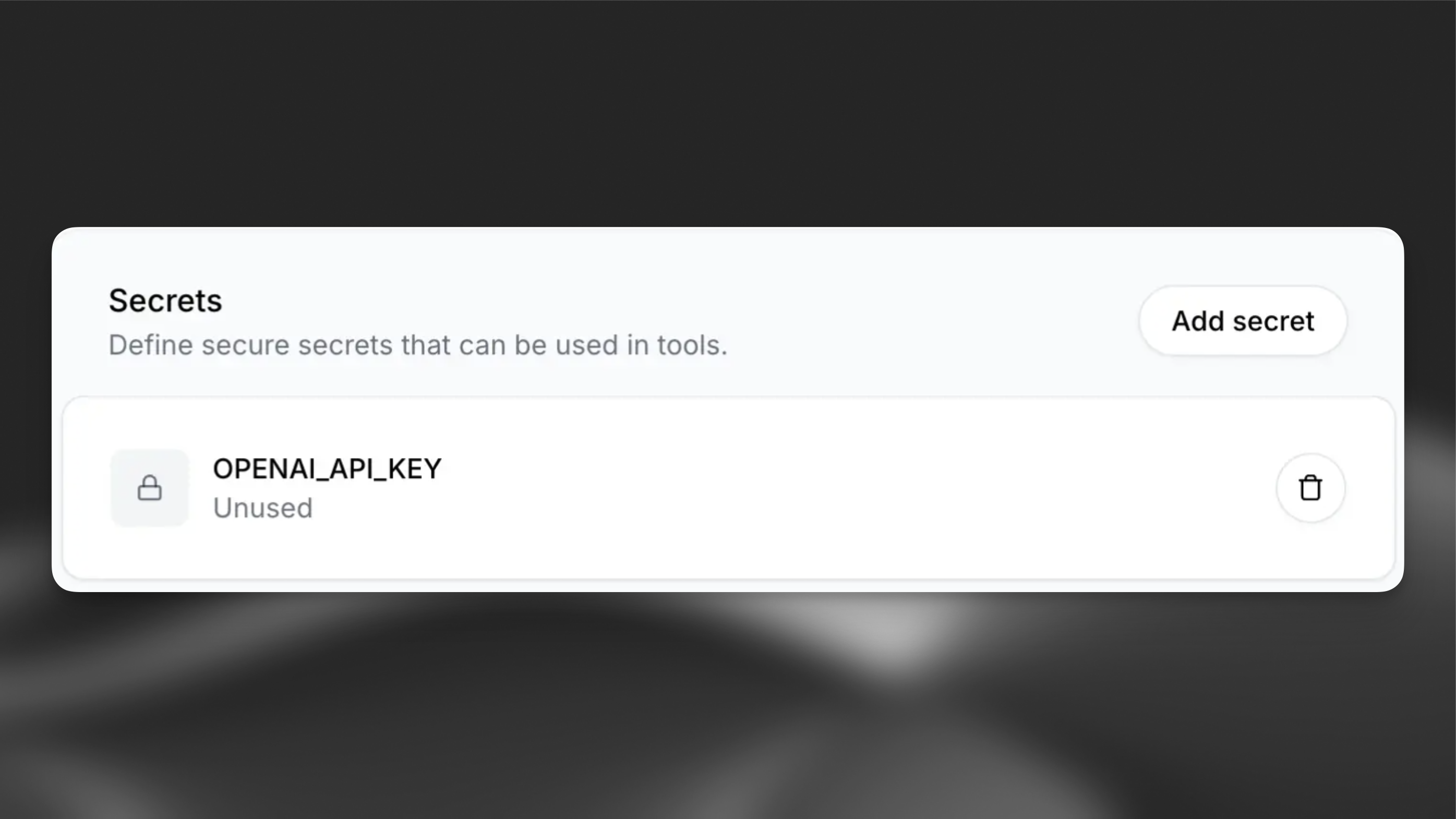

To integrate a custom OpenAI key, create a secret containing your OPENAI_API_KEY:

Custom LLM Server

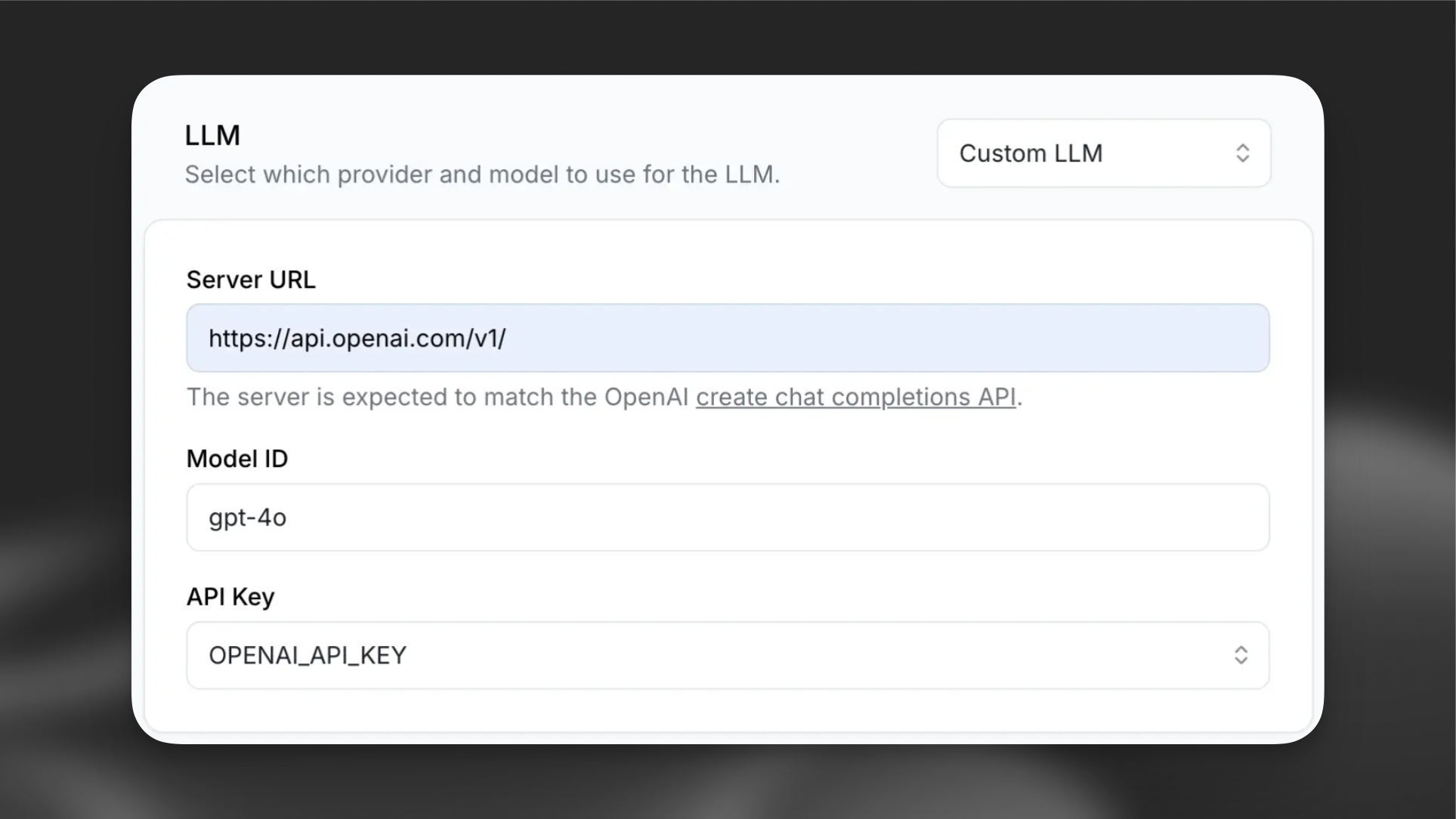

To bring a custom LLM server, set up a compatible server endpoint using OpenAI’s style, specifically targeting create_chat_completion.

Here’s an example server implementation using FastAPI and OpenAI’s Python SDK:

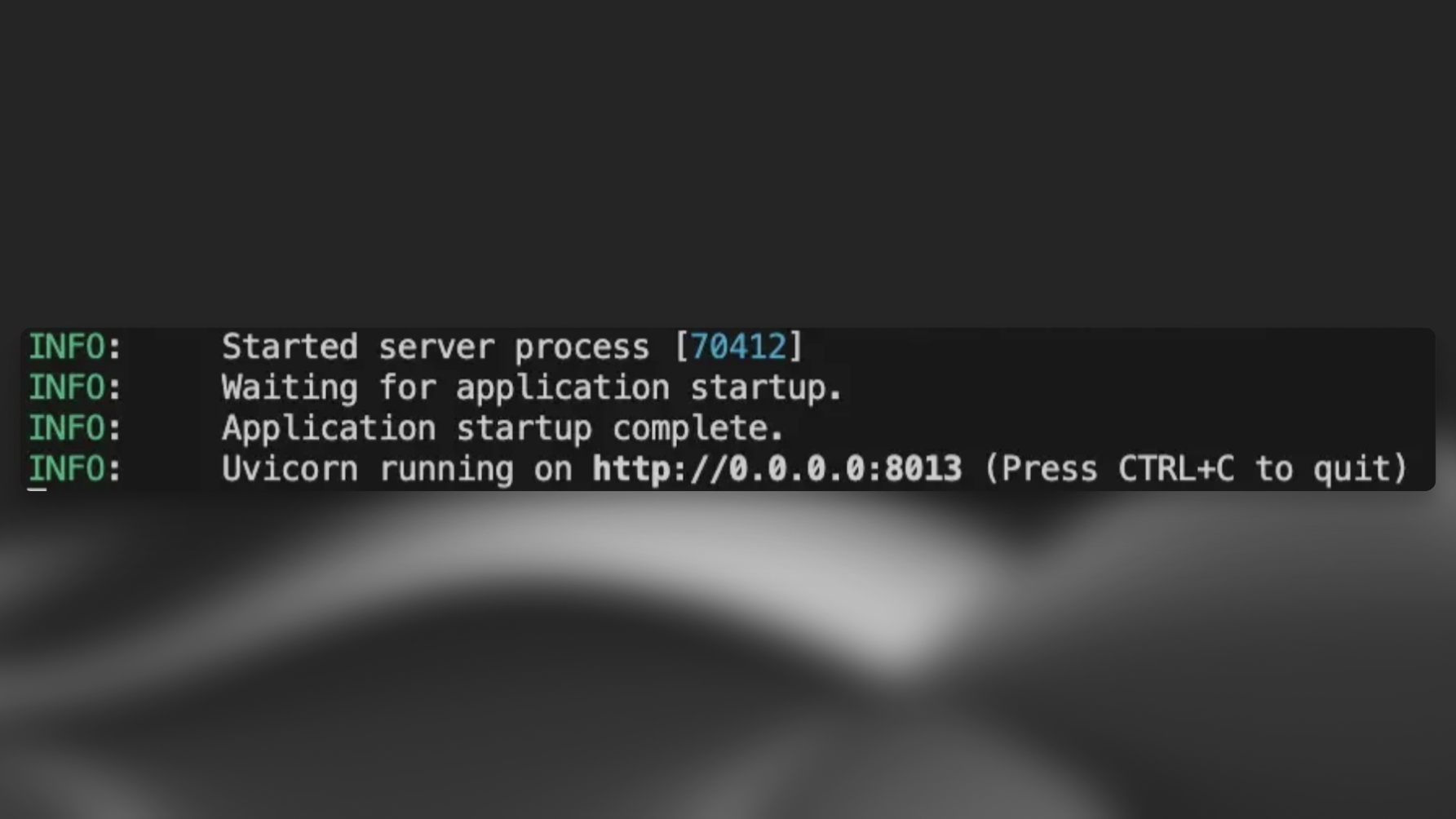

Run this code or your own server code.

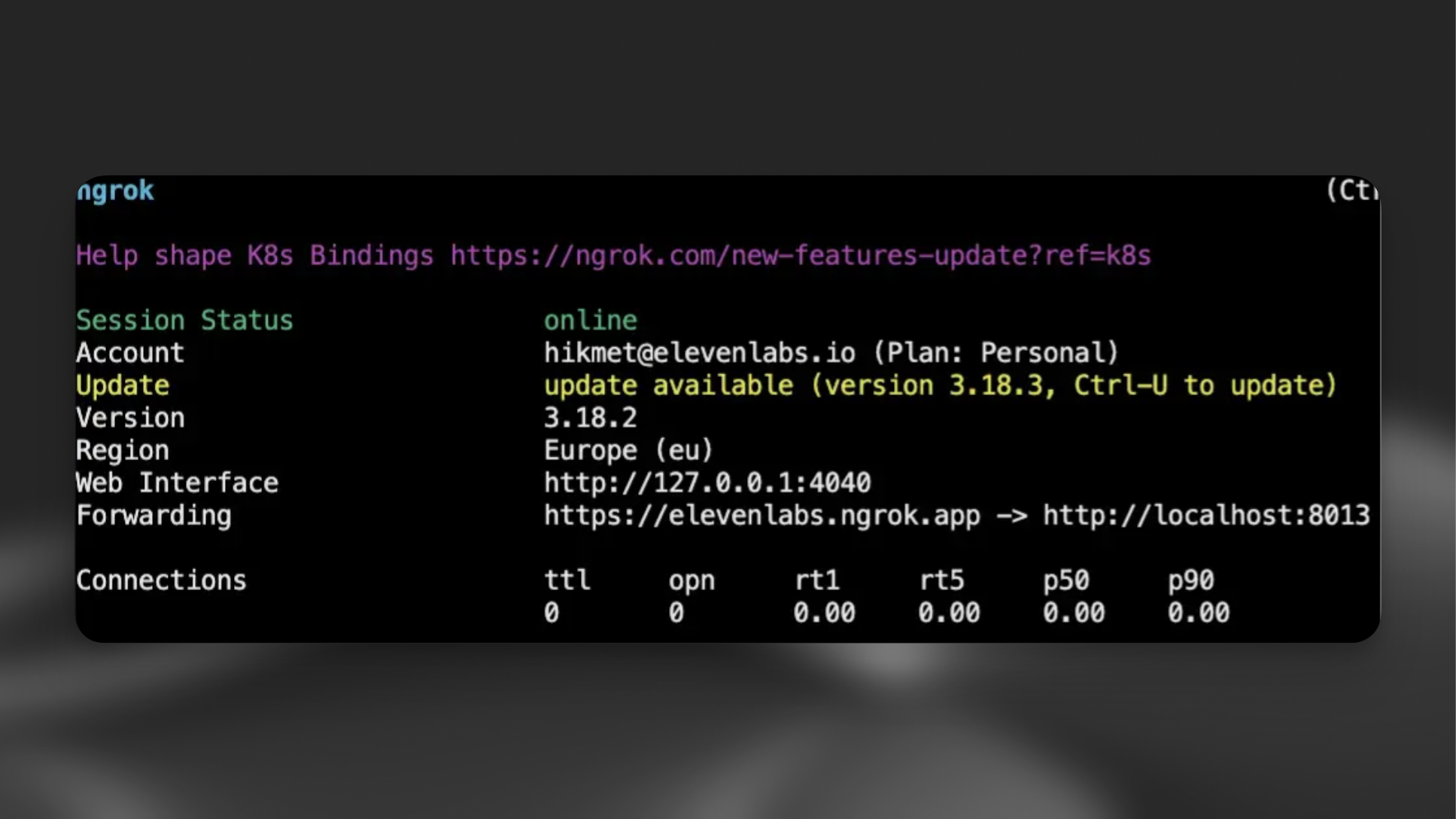

Setting Up a Public URL for Your Server

To make your server accessible, create a public URL using a tunneling tool like ngrok:

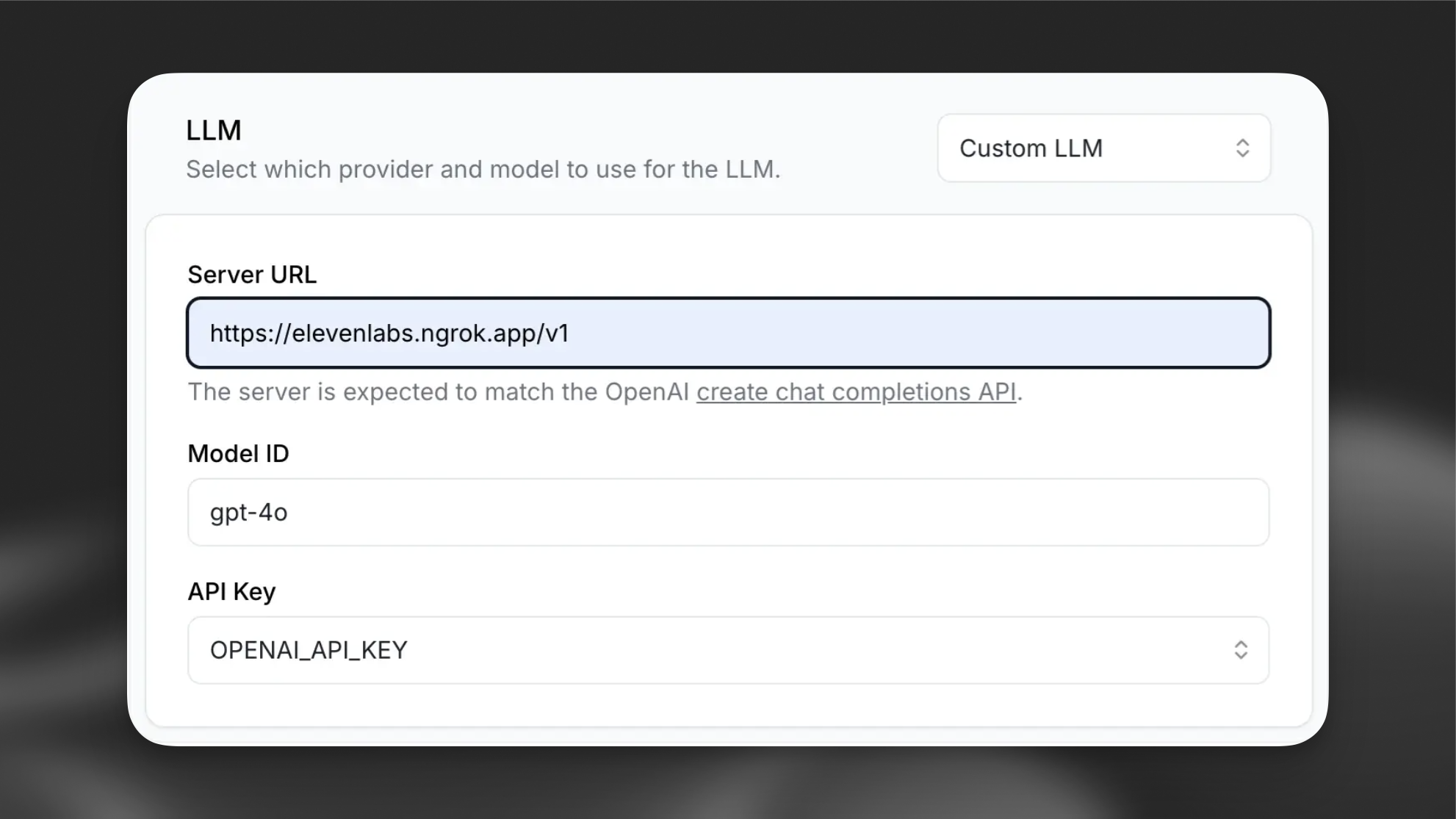

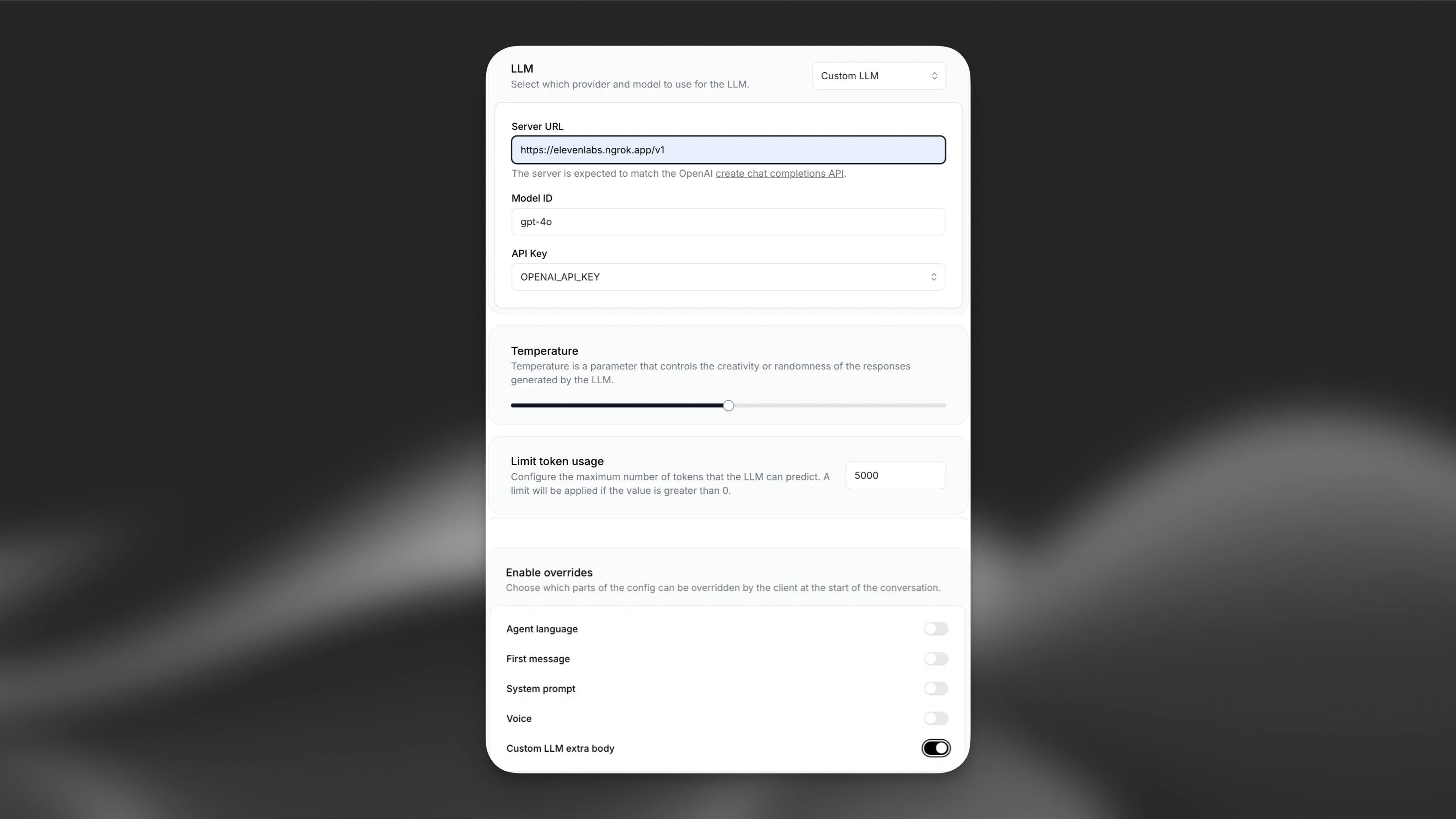

Configuring Elevenlabs CustomLLM

Now let’s make the changes in Elevenlabs

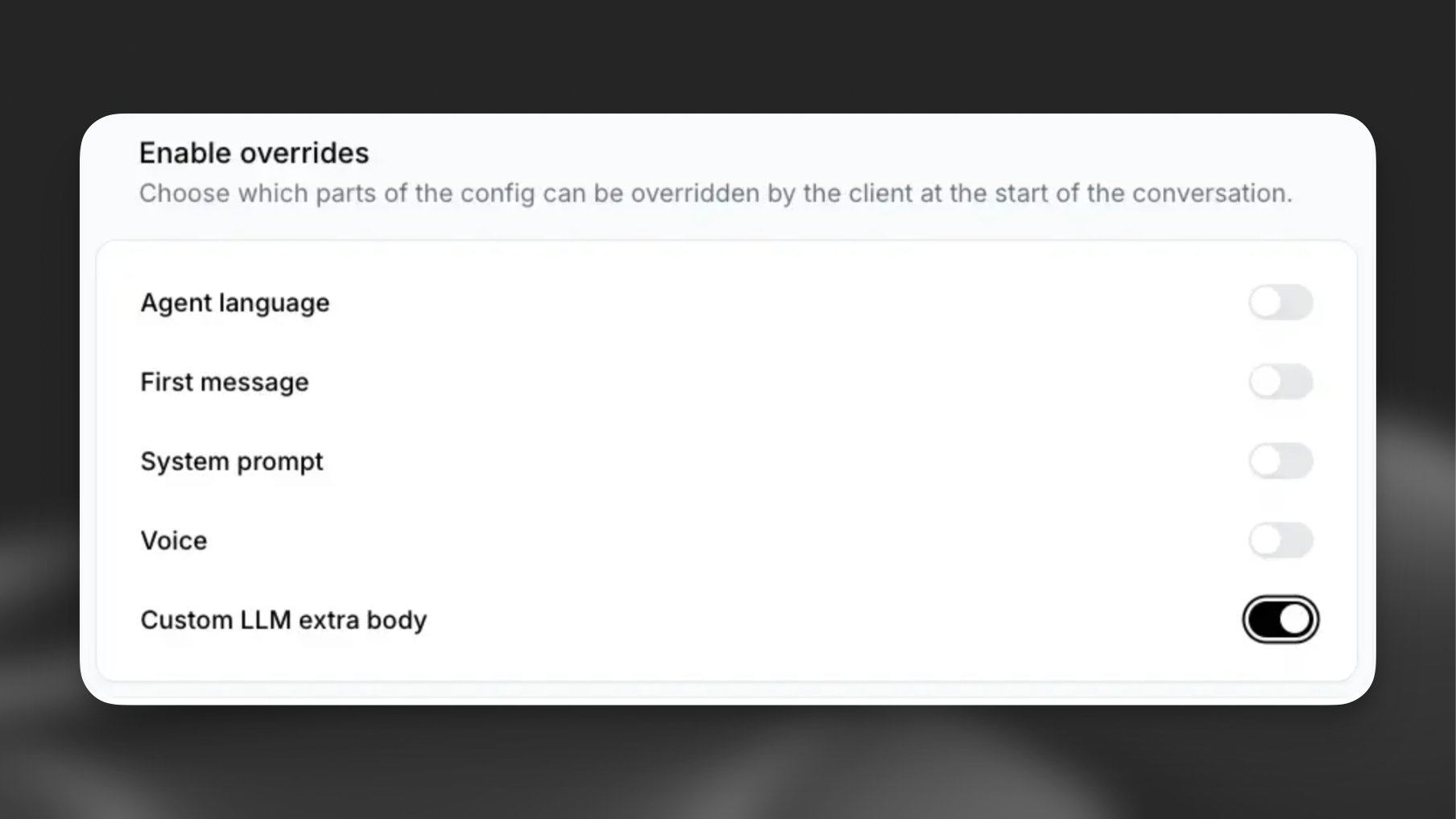

Direct your server URL to ngrok endpoint, setup “Limit token usage” to 5000 and set “Custom LLM extra body” to true.

You can start interacting with Conversational AI with your own LLM server

Optimizing for slow processing LLMs

If your custom LLM has slow processing times (perhaps due to agentic reasoning or pre-processing requirements) you can improve the conversational flow by implementing buffer words in your streaming responses. This technique helps maintain natural speech prosody while your LLM generates the complete response.

Buffer words

When your LLM needs more time to process the full response, return an initial response ending with "... " (ellipsis followed by a space). This allows the Text to Speech system to maintain natural flow while keeping the conversation feeling dynamic.

This creates natural pauses that flow well into subsequent content that the LLM can reason longer about. The extra space is crucial to ensure that the subsequent content is not appended to the ”…” which can lead to audio distortions.

Implementation

Here’s how to modify your custom LLM server to implement buffer words:

System tools integration

Your custom LLM can trigger system tools to control conversation flow and state. These tools are automatically included in the tools parameter of your chat completion requests when configured in your agent.

How system tools work

- LLM Decision: Your custom LLM decides when to call these tools based on conversation context

- Tool Response: The LLM responds with function calls in standard OpenAI format

- Backend Processing: ElevenLabs processes the tool calls and updates conversation state

For more information on system tools, please see our guide

Available system tools

End call

Purpose: Automatically terminate conversations when appropriate conditions are met.

Trigger conditions: The LLM should call this tool when:

- The main task has been completed and user is satisfied

- The conversation reached natural conclusion with mutual agreement

- The user explicitly indicates they want to end the conversation

Parameters:

reason(string, required): The reason for ending the callmessage(string, optional): A farewell message to send to the user before ending the call

Function call format:

Implementation: Configure as a system tool in your agent settings. The LLM will receive detailed instructions about when to call this function.

Learn more: End call tool

Language detection

Purpose: Automatically switch to the user’s detected language during conversations.

Trigger conditions: The LLM should call this tool when:

- User speaks in a different language than the current conversation language

- User explicitly requests to switch languages

- Multi-language support is needed for the conversation

Parameters:

reason(string, required): The reason for the language switchlanguage(string, required): The language code to switch to (must be in supported languages list)

Function call format:

Implementation: Configure supported languages in agent settings and add the language detection system tool. The agent will automatically switch voice and responses to match detected languages.

Learn more: Language detection tool

Agent transfer

Purpose: Transfer conversations between specialized AI agents based on user needs.

Trigger conditions: The LLM should call this tool when:

- User request requires specialized knowledge or different agent capabilities

- Current agent cannot adequately handle the query

- Conversation flow indicates need for different agent type

Parameters:

reason(string, optional): The reason for the agent transferagent_number(integer, required): Zero-indexed number of the agent to transfer to (based on configured transfer rules)

Function call format:

Implementation: Define transfer rules mapping conditions to specific agent IDs. Configure which agents the current agent can transfer to. Agents are referenced by zero-indexed numbers in the transfer configuration.

Learn more: Agent transfer tool

Transfer to human

Purpose: Seamlessly hand off conversations to human operators when AI assistance is insufficient.

Trigger conditions: The LLM should call this tool when:

- Complex issues requiring human judgment

- User explicitly requests human assistance

- AI reaches limits of capability for the specific request

- Escalation protocols are triggered

Parameters:

reason(string, optional): The reason for the transfertransfer_number(string, required): The phone number to transfer to (must match configured numbers)client_message(string, required): Message read to the client while waiting for transferagent_message(string, required): Message for the human operator receiving the call

Function call format:

Implementation: Configure transfer phone numbers and conditions. Define messages for both customer and receiving human operator. Works with both Twilio and SIP trunking.

Learn more: Transfer to human tool

Skip turn

Purpose: Allow the agent to pause and wait for user input without speaking.

Trigger conditions: The LLM should call this tool when:

- User indicates they need a moment (“Give me a second”, “Let me think”)

- User requests pause in conversation flow

- Agent detects user needs time to process information

Parameters:

reason(string, optional): Free-form reason explaining why the pause is needed

Function call format:

Implementation: No additional configuration needed. The tool simply signals the agent to remain silent until the user speaks again.

Learn more: Skip turn tool

Voicemail detection

Custom LLM integration

Parameters:

reason(string, required): The reason for detecting voicemail (e.g., “automated greeting detected”, “no human response”)

Function call format:

Learn more: Voicemail detection tool

Example Request with System Tools

When system tools are configured, your custom LLM will receive requests that include the tools in the standard OpenAI format:

Your custom LLM must support function calling to use system tools. Ensure your model can generate proper function call responses in OpenAI format.

Additional Features

Custom LLM Parameters

You may pass additional parameters to your custom LLM implementation.