.webp&w=3840&q=95)

ElevenLabs partners with the UK Government on voice AI safety research

UK AI Security Institute researchers will explore the implications of AI voice technology

Patterns for integrating ElevenLabs voice orchestration with complex and stateful agents

Frontier agent orchestrators are increasingly able to handle complex tasks and operate across the suite of enterprise tooling. This requires diligent management of application, conversation and system state. For modalities other than voice, common patterns have emerged under the umbrella term of context engineering, which aims to build consistent practices around an agent’s system prompt as an interaction progresses. Onboarding voice not only introduces an additional layer of state to manage components of the voice interaction, but ideally also permits the reuse of artifacts from previous work on other modalities.

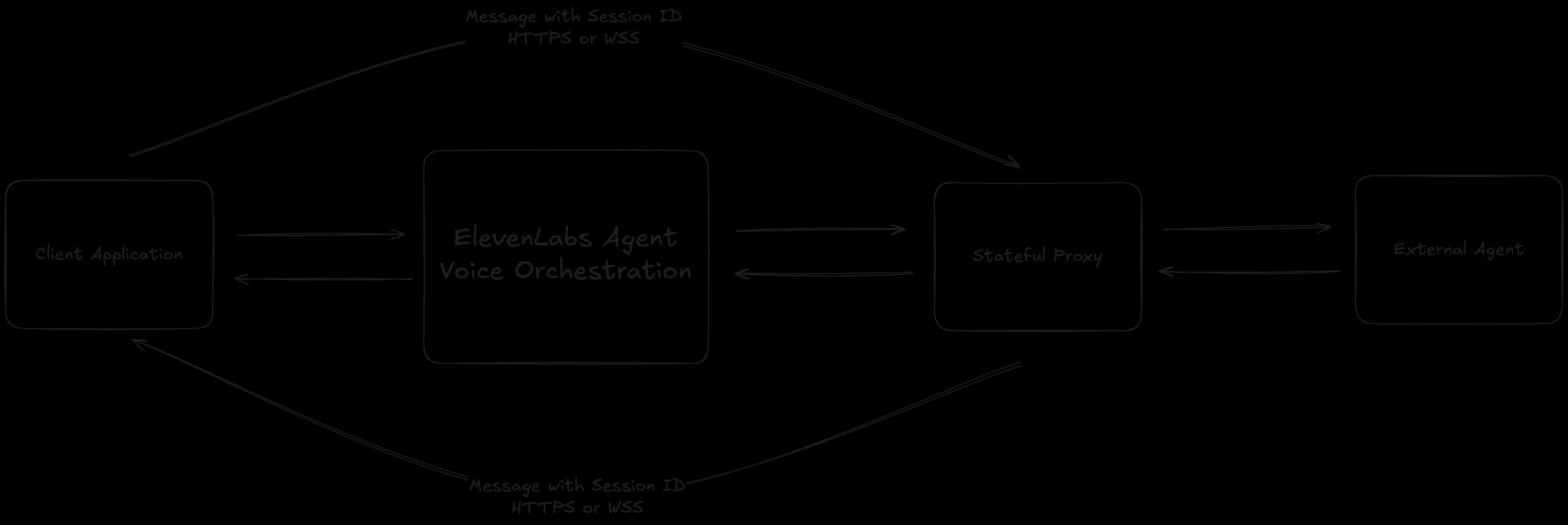

In this post, we outline how ElevenLabs Agents support external agents and the patterns that enable fine-grained control over their integration. These mechanisms allow customers to leverage ElevenLabs’ best-in-class voice orchestration while maintaining full ownership of their broader orchestration.

ElevenLabs Agents

In its simplest form, an ElevenLabs Agent is accessible through a Websocket client. Information representing server and client events in the conversation passes to and from the agent as JSON objects. When the agent transcribes user speech, it greedily triggers a generation request. We support most major model providers and allow customers to bring their own Custom LLM. When bringing a more complex orchestrator (agents) to answer generation requests behind the Custom LLM, customers must ensure that it supports either OpenAI’s Chat Completions or Responses API. Luckily, this API formatting specification is easily supported by most major agent building frameworks (CrewAI, LangChain, LangGraph, HayStack, LlamaIndex, ...).

Once integrated, these agents often require the ability to read and update their internal and external state at any given time regardless of the voice orchestrator they sit behind. Managing this effectively ensures consistency with existing text-only agents.

State management

By definition, the data an agent must track to navigate its environment efficiently is highly task-specific. For ElevenLabs Agents powered by an external agent, it is useful to maintain state across a few well-defined categories.

Internal state governs the dynamics of the conversation. Examples of elements tracked as part of the agent’s internal state include:

External state, on the other hand, primarily focuses on relevant systems and individuals the agent interacts with or influences. Examples of elements tracked as part of the agent’s external state include:

We outline a common pattern for reliably maintaining this information throughout the lifecycle of an agent’s relationship with a user.

Overview

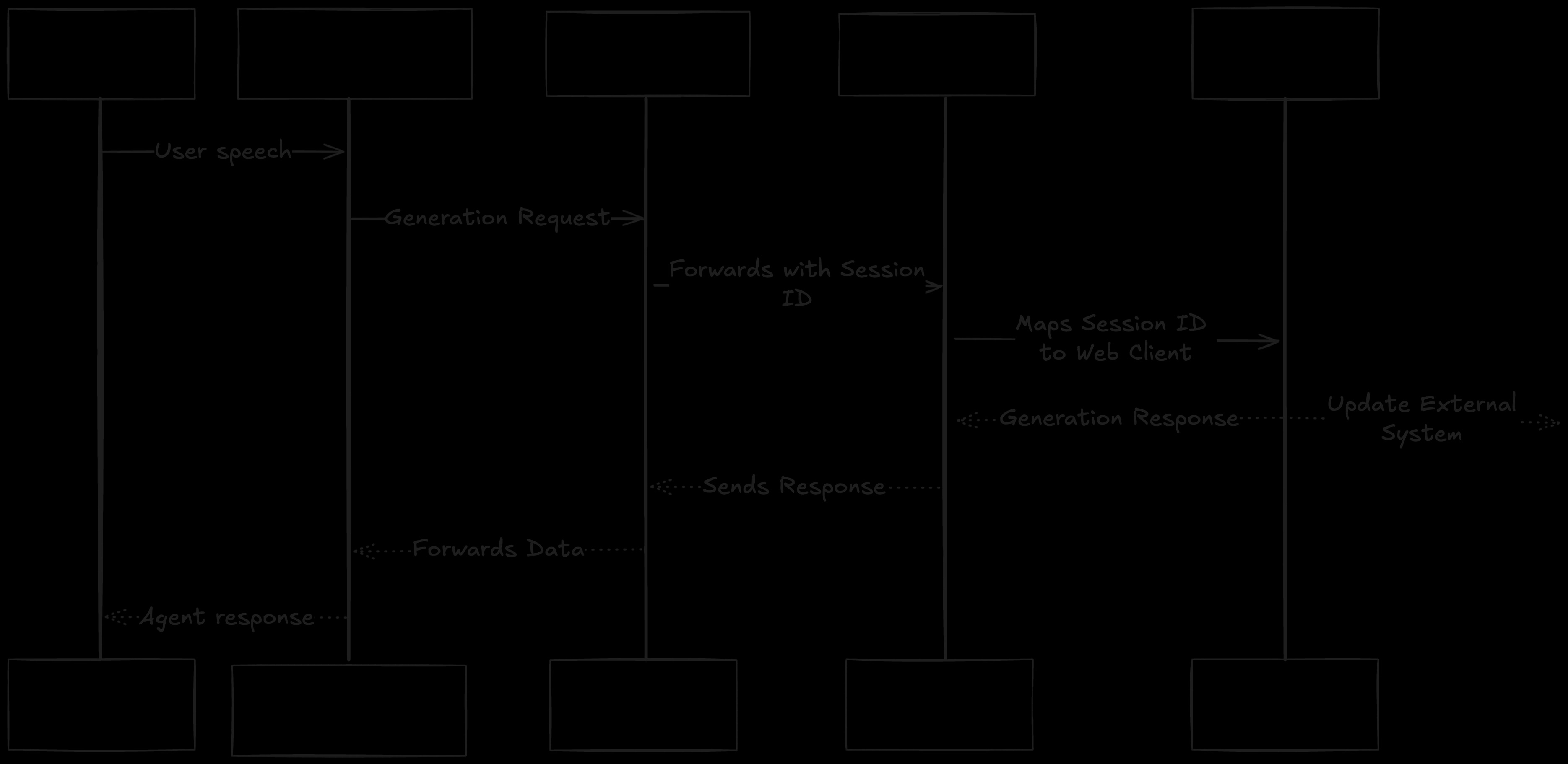

In this section, we cover the architecture components and implementation details required to successfully integrate complex external agents. At the core of this approach is the ability to proxy an arbitrary, but unique identifier representing a session across all services. For ElevenLabs Agents using custom LLMs, this can be done simply by passing the requisite identifier as an LLM parameter within the extra body object passed as part of the conversation overrides during call initiation. Doing this allows the identifier to flow through the ElevenLabs Agent from the user down to the external agent.

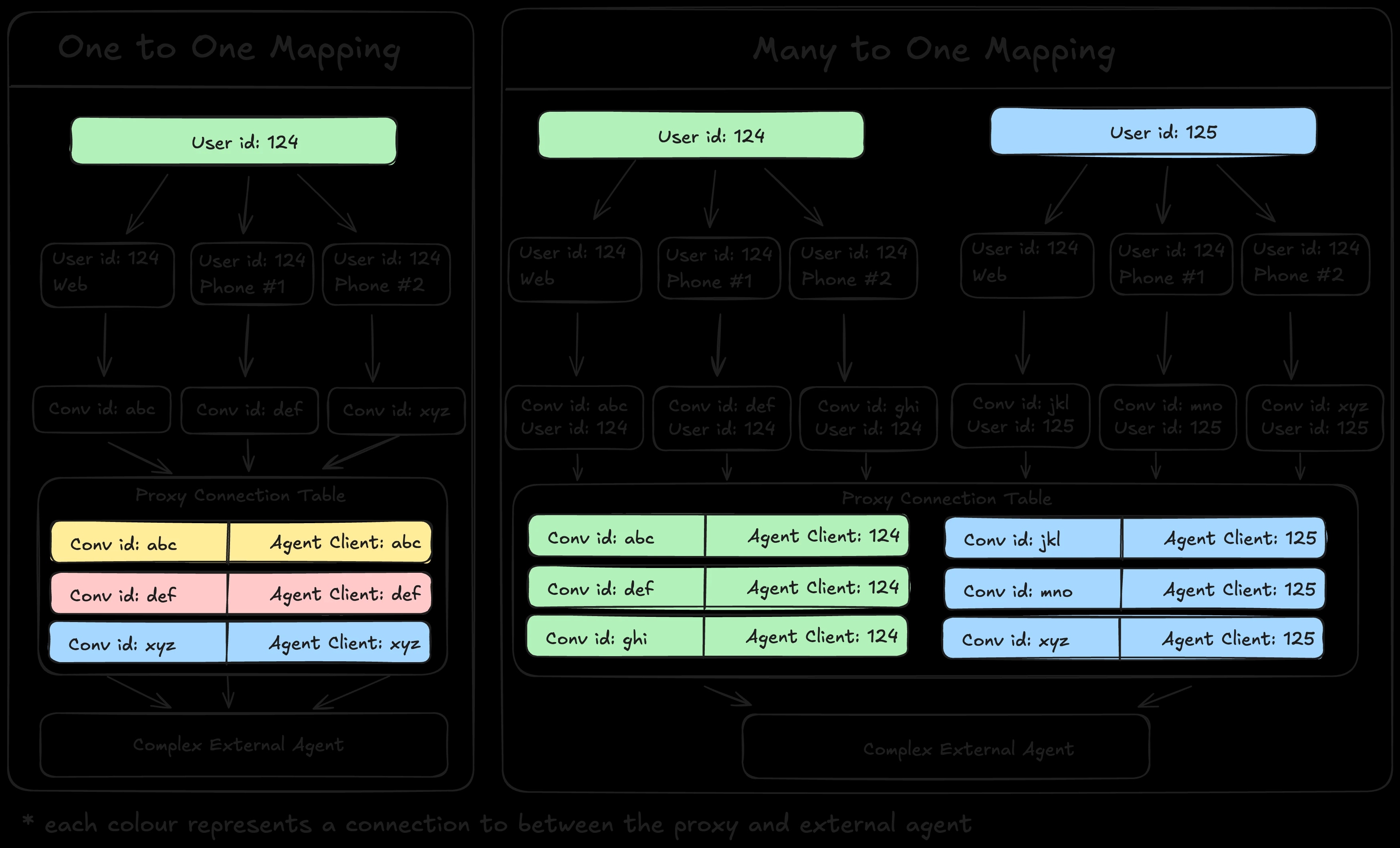

Notice the stateful proxy behind the custom LLM. This service, which is not generally present, allows us to map individual generation requests to arbitrary identifiers representing connections with the external agent. Ownership of the implementation of this service is in the hands of the external agent developers. In its simplest form, the proxy manages connections represented by unique identifiers mapping to ElevenLabs conversations or call SIDs (for telephony). Whereas, more advanced versions can introduce hierarchy in the mapping of conversations to more intricate customer relationships that span multiple interactions.

In these more advanced configurations, the proxy maintains additional identifiers that go beyond a single request tied to a single downstream session. Instead of each identifier representing only one conversation or call SID, the proxy can associate a single identifier with multiple related interactions. This allows the system to follow customer journeys that move across channels, reuse historical context, and coordinate several interactions at once. For example, a single mapping may group multiple web chat sessions, a follow-up voice call, and an internal support workflow under the same logical customer identifier. The proxy can then route requests to the correct identifier based on simple rules while preserving a unified state behind the custom LLM. This enables more flexible and persistent multi-step interactions managed by the external agent.

Message passing

Beyond successfully mapping generation requests to higher order entities, the stateful proxy can support bidirectional message passing to external sources such as the application frontend or a separate router service through API requests. In applications where this is necessary, ElevenLabs Agents do not require awareness that messages are being passed to other services.

For instance, it’s often useful for external agents to have visibility into ongoing voice activity, so that it can determine whether the user is speaking, for how long and whether it should take any action preemptively. These insights can be directly derived and actioned on by passing processed voice activity detection (VAD) scores provided by ElevenLabs Agents as client events received through the conversation websocket. When receiving scores from ElevenLabs, the client application can forward VAD client events to the stateful proxy based on application requirements ensuring it includes the arbitrary session identifier in the message. It is necessary for the stateful proxy to implement request mapping logic that optimally identifies the existing connection for the session.

This pattern can be extended to accommodate any event from the client, provided that it can be expressed as a block of JSON. However, it is also useful to expose events that originate from the agent itself. A common example involves the lifecycle of tool calls or knowledge base queries that represent operations on external systems. These mechanisms are fundamental to the agents enterprises are building today.

When integrating external agents through a custom LLM, ElevenLabs’ tool calling and retrieval augmented generation (RAG) features are often bypassed in favour of the external agent’s own implementation. As a result, ownership of these components lies entirely with the external agent provider. Applications still benefit from visibility into tool activity, since it enables them to surface the agent’s progress and update the end-user experience accordingly.

To provide this visibility, the external agent emits messages whenever tools are invoked, both for requests and responses. These messages are forwarded by the stateful proxy to client applications, which handle them through a dedicated message queue. This mirrors the mechanisms used by ElevenLabs Agents’ client events and ensures that applications can track when the agent reads from or modifies an external system.

Thus, using these core components and enabling the bidirectional passing of messages between the proxy and the client application allows customers to integrate external agents within ElevenLabs Agents to strictly use the voice orchestration it provides while retaining ownership over all parts of the LLM orchestration.

Linking it back to state

Supporting complex external agents effectively requires a clear division of responsibilities between the proxy and the agent especially when it comes to state management. In this model, the proxy is responsible for maintaining a table of relevant interactions, grouped according to the needs of the application, and for routing messages between itself and the agent using logic that remains stateless. In turn, the external agent should handle and store all substantive internal and external pieces of information that contribute to the overall state.

Although relaxing this separation may further reduce rework of an existing solution, maintaining a strict boundary generally leads to more robust and scalable outcomes as the agent's task set grows.

As organizations mature in their adoption of voice and non-voice enabled agents, we expect patterns for the information required by these agents to crystallize allowing us to simplify the development and ownership of the services described in this post. In the meantime, we are continuing to build for the requirements that have already emerged. Our Forward Deployed Engineering team is partnering closely with customers to translate these emerging needs into concrete product capabilities and ensure our solutions evolve in lockstep with real-world deployments.

If you are already working with an existing agent and are looking to enable voice with ElevenLabs Agents while retaining ownership over your LLM orchestration, try out this approach and let us know what you think!

.webp&w=3840&q=95)

UK AI Security Institute researchers will explore the implications of AI voice technology

.webp&w=3840&q=95)