.webp&w=3840&q=95)

Introducing ElevenLabs Conversational AI 2.0

Conversational AI 2.0 launches with advanced features and enterprise readiness.

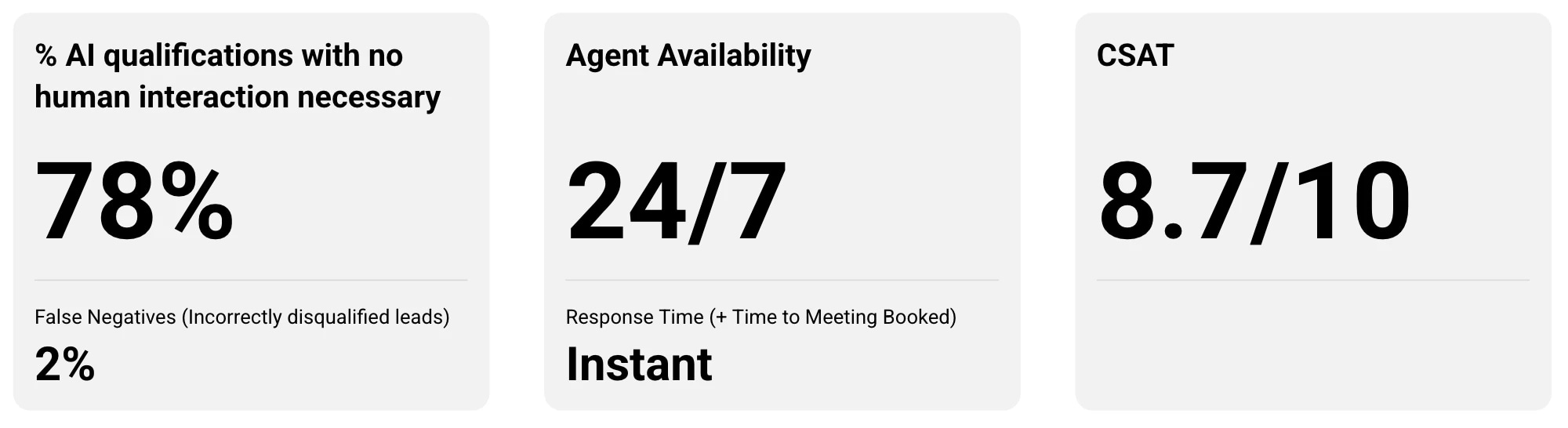

Available 24/7 in 30+ languages, the agent can respond and book meetings instantly

Every week, hundreds of people fill out the enterprise sales form on our website to learn more about our platform and pricing. However, most of these leads are better suited to our self-serve plans, which cater to the majority of our users and have detailed documentation on our website.

While our sales team would like to meet with every lead directly, their focus is on enterprise deployments that require customized solutions. Instead, they manually review each submission to identify which are suited for an enterprise conversation and which are better guided toward self-serve. Many forms, however, include incomplete details such as vague descriptions of the lead’s use case, which requires our team to follow up for context before making a decision. This process can slow down response times and take focus away from higher-priority enterprise opportunities.

For enterprise-qualified leads, the delay from reviewing form submissions also means lost momentum. Ideally, an enterprise lead should be able to book a meeting as soon as they submit the contact sales form. Otherwise, if a form is submitted on Friday evening, the lead may not hear back until our sales team reviews it on Monday, which pushes the first meeting to later that week.

To close this gap between demand and team capacity, we built an inbound AI sales development representative (SDR) using our Agents Platform. The AI SDR delivers faster, more personalized experiences for inbound leads while enabling our team to focus on the most impactful opportunities.

We built the inbound SDR around three pillars: behavior, capabilities, and data.

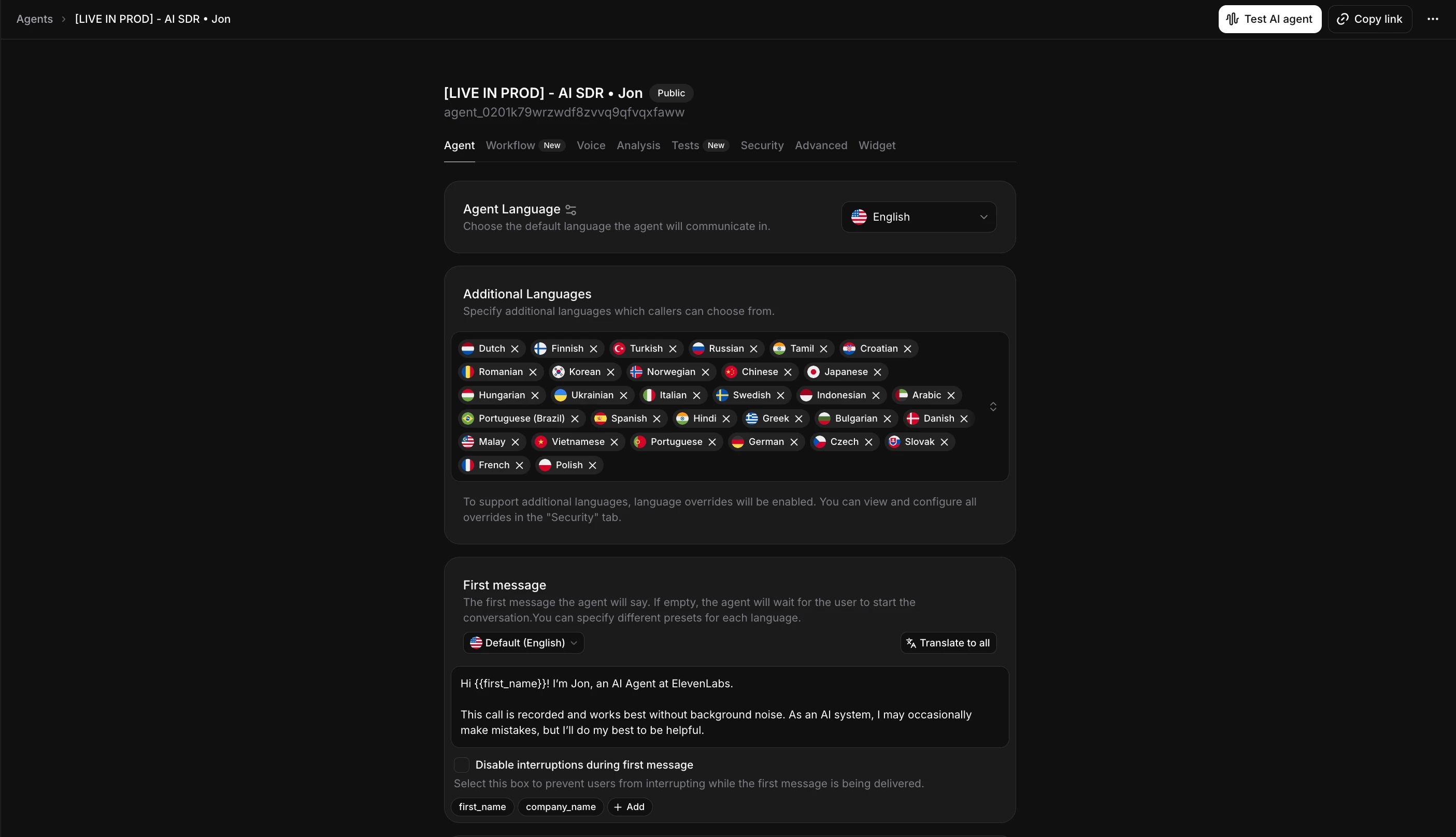

We defined how our SDR, “Jon,” behaves using the system prompt in our Agents Platform. In it, we outlined Jon’s core personality traits, such as being “a warm, consultative sales representative who makes complex AI topics feel approachable.” We also defined his main goal and the scenarios in which he should take action, such as booking a meeting or sending a message to our team. To learn more about the exact structure we followed, see our prompting guide.

We equipped Jon with an extensive knowledge base, including more than one hundred frequently asked questions and an overview of our product capabilities from our website documentation. For now, we update Jon’s knowledge base manually as new products or features are released. Soon, it will be possible to add a target URL and have the knowledge base update automatically on a regular schedule.

After launch, we worked with sales leaders across our GTM team to review call transcripts, identify mistakes, and improve the agent’s performance. Early learnings included:

Jon has access to the same tools a human SDR would use to manage inbound conversations independently.

At the start of each call, he reviews the contact form submission to gather context. During the call, he can then qualify leads and book meetings in real time by checking availability across time zones. After a meeting is booked, he sends our team a summary of the call.

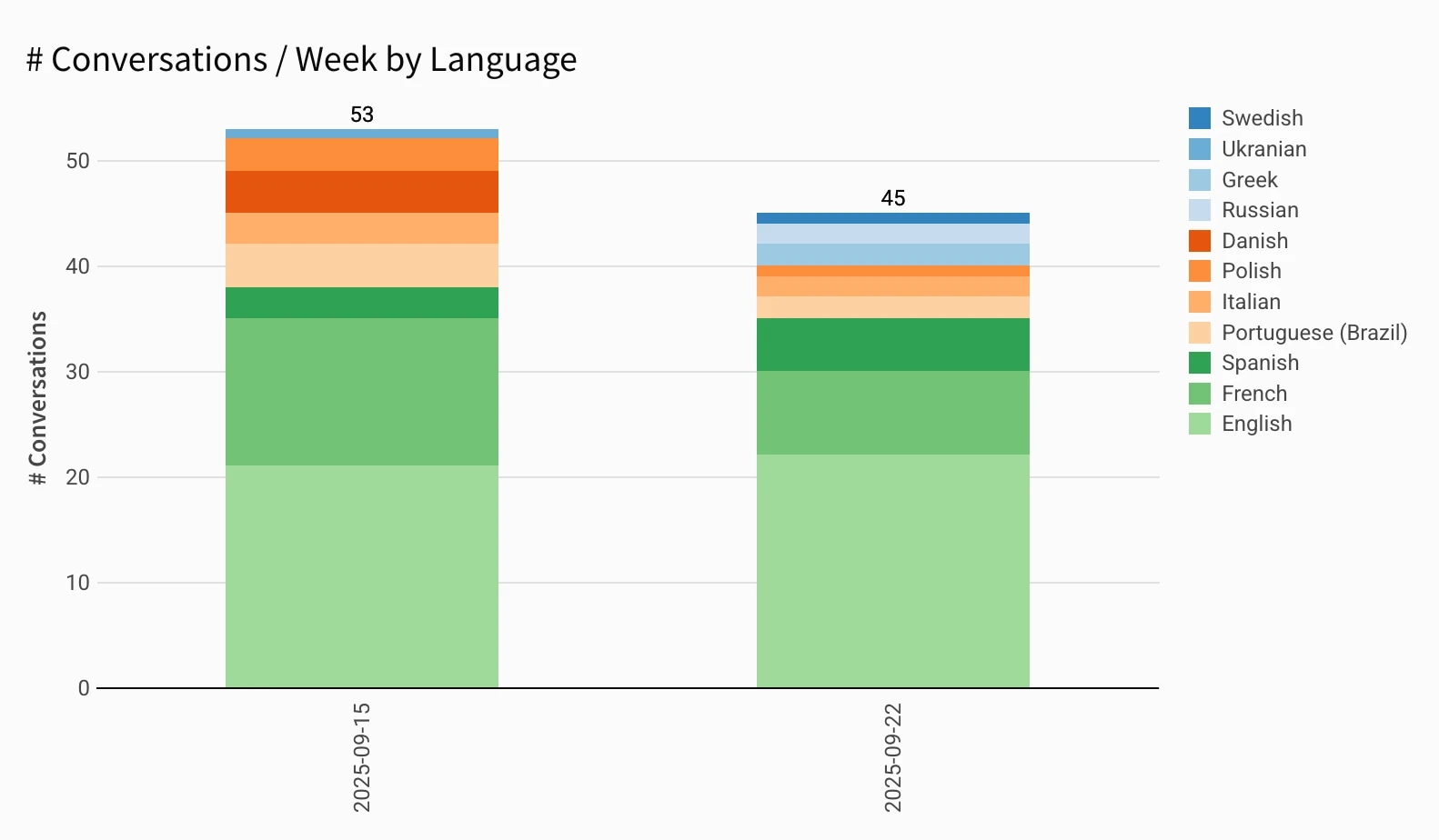

Jon can also switch seamlessly between 32 languages, with optimized voices for each locale. In a typical week, he uses more than eight languages.

At the end of each call, Jon captures the lead’s use case and qualification decision, writing this data directly to our CRM. This gives our team full visibility into every sales interaction.

Jon also records structured data such as customer satisfaction scores. At the end of a conversation, he asks leads to rate their experience on a scale of 1-10. We review the highest and lowest-rated conversations daily to understand what worked and what didn’t.

These behaviors, capabilities, and data pipelines ensure Jon operates with both conversational fluency and operational efficiency, handling the same workflows as a human SDR.

Since launch, the AI SDR has been deployed across 38 countries with 24/7 availability. It now handles 50+ calls per week, equivalent to two full-time SDRs.

78% of its qualification decisions require no human intervention. In the remaining 22%, the agent mostly makes “false positive” qualifications where it qualifies leads that should have been disqualified. We prefer this outcome to the alternative of disqualifying leads that should have been qualified.

These false positives typically occur when leads estimate but cannot confirm their expected volume or launch timing. False negatives are rare and usually happen when a lead ends the call before the agent has enough information to decide. Going forward, the agent will return "N/A" for incomplete cases like these instead of disqualifying the lead.

"We’ve seen a fundamental shift in how inbound sales can work at scale. With our AI SDR, anyone interested in our platform can now start a personalized conversation in their own language, at any time. The best way to understand our platform is to experience it first-hand, and qualified leads are now booking meetings in minutes instead of days." – Jonathan Chemouny, EMEA Sales Lead, ElevenLabs

With an average CSAT score of 8.7, early conversations show how quickly perceptions are changing about what conversational agents can achieve. Here are a few excerpts:

Over the next few months, we will expand the agent’s capabilities through our new workflows feature. Workflows are designed to handle more complex, dynamic conversations and support more reliable decision-making. This will also unlock new agent use cases such as outbound sales and re-engaging unresponsive leads.

Today, our team reviews every decision made by the AI SDR. Soon, the system will book meetings directly on our account executives' calendars with scheduling logic that automatically routes each meeting to the correct owner.

Our AI SDR has already reshaped our inbound sales process by meeting prospect expectations and giving our team more time to focus where it matters. We see this as another step toward a future where voice is the primary interface for technology.

Looking to build your own conversational agents? Get started here.

.webp&w=3840&q=95)

Conversational AI 2.0 launches with advanced features and enterprise readiness.

Successfully resolving >80% of user inquiries