Introducing Conversational AI

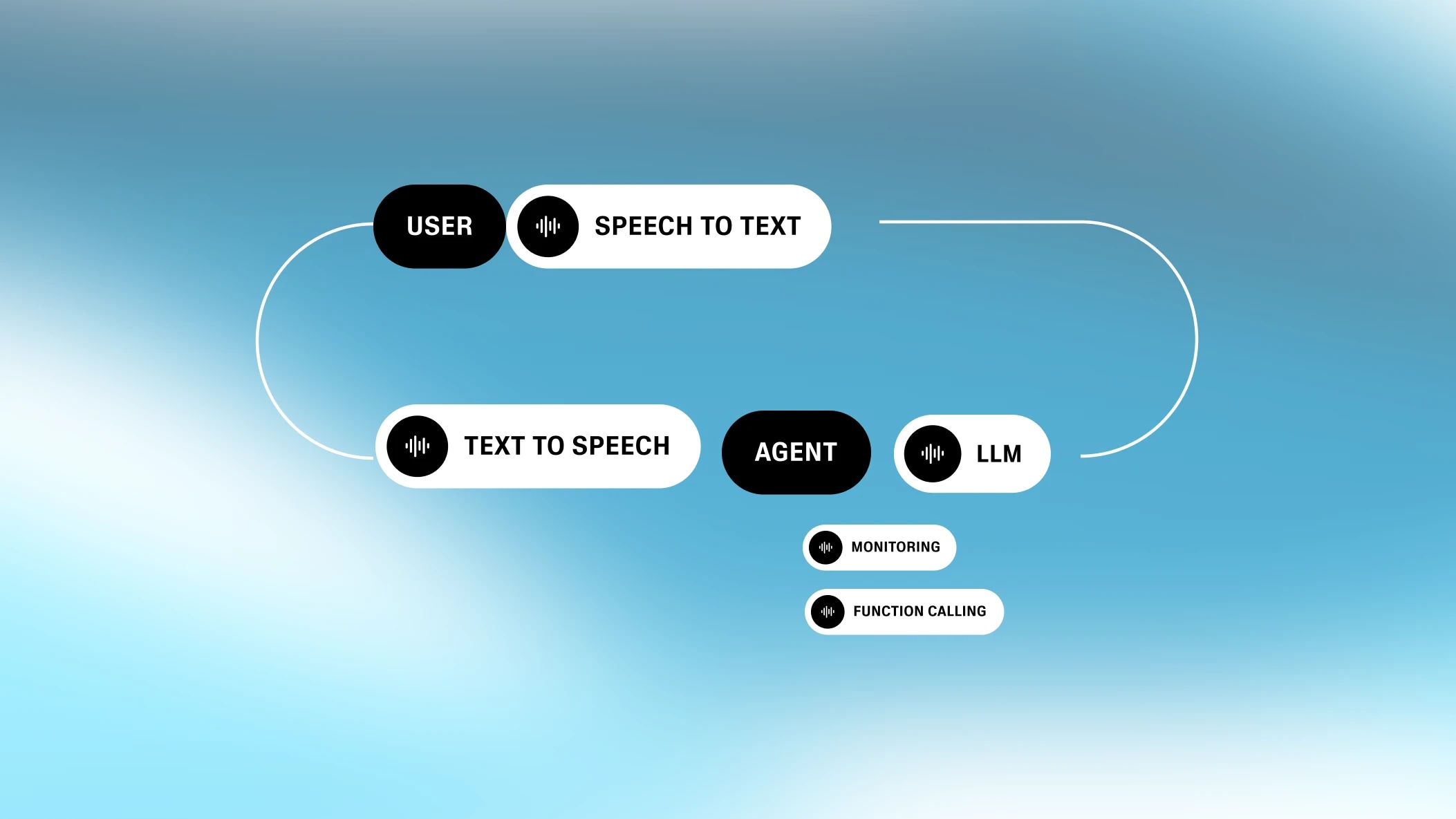

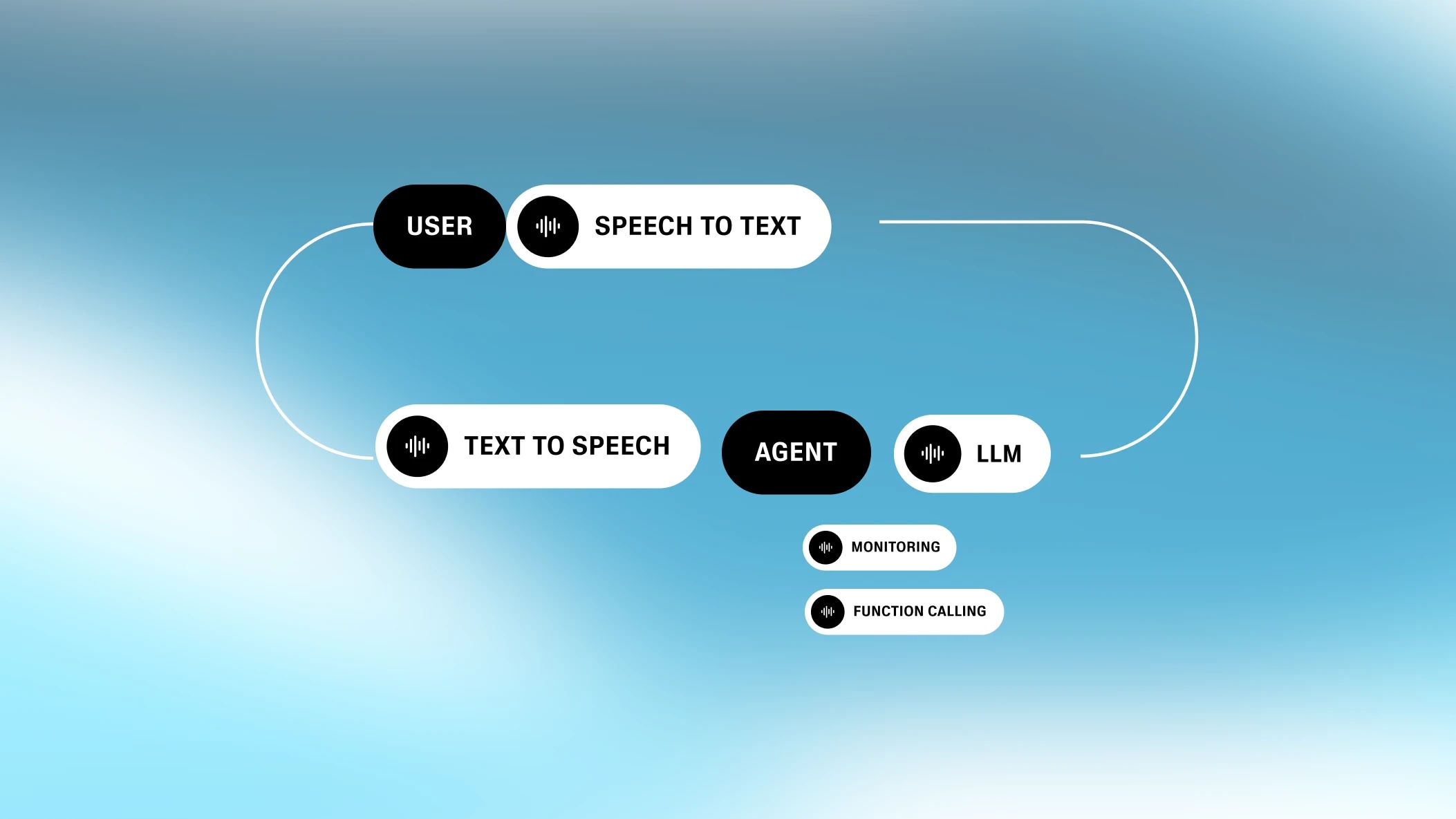

Our all in one platform for building customizable, interactive voice agents

Successfully resolving >80% of user inquiries

At ElevenLabs, we recently embedded a Conversational AI voice agent in our docs to help reduce the support burden for documentation-related questions (Test it out here). Our support agent is now successfully handling over 80% of user inquiries across 200 calls per day. These results demonstrate the potential for AI to augment traditional documentation support while highlighting the continued importance of human support for complex queries. In this post, I will detail our iterative process you can follow to replicate our success.

We set out to build an agent that can:

We implemented two layers of evaluation:

(1) AI Evaluation Tooling: For each call, our built-in evaluation tooling runs through the finished conversation and evaluates if the agent has been successful. The criteria is fully customizable. We ask if the agent solved the user inquiry, or was able to redirect them to a relevant support channel.

We have been able to steadily improve the ability of the LLM to solve or redirect the inquiry successfully, reaching 80% according to our evaluation tooling.

Excluding calls with less than 1 turn in the conversation, which imply no question / issue was raised by the caller.

Now, it’s important to consider that not all types of support queries or questions can be solved by an LLM, especially for a startup that builds fast and innovates constantly, and with extremely technical and creative users. As an additional disclaimer, an evaluation LLM will not evaluate correctly 100% of the time.

(2) Human Validation: To contrast the efficacy of our LLM validation tooling, we conducted a human validation of 150 conversations, using the same evaluation criteria provided to the LLM tooling:

The human evaluation also revealed that 89% of relevant support questions were answered or redirected correctly by the Documentation agent.

Other findings:

The LLM-powered agent is adept at resolving clear and specific questions that can be answered with our documentation, pointing callers to the relevant documentation, and providing some initial guidance on more complex queries. In most of these cases, the agent provides quick, straightforward, and correct answers that are immediately helpful.

Questions include:

Recommendations:

On the flip side, the agent is less helpful with account issues, pricing/discount questions, or non-specific questions that would benefit from deeper investigation / querying. Also, issues that are fairly vague and generic -> despite being prompted to ask questions, the LLM usually favours answering with something that might seem relevant from the documentation.

Questions include:

Recommendations

“You are a technical support agent named Alexis. You will try to answer any questions that the user might have about ElevenLabs products. You will be given documentation on ElevenLabs products and should only use this information to answer questions about ElevenLabs. You should be helpful, friendly and professional. If you're unable to answer the question, redirect callers with redirectToEmailSupport (which opens an email on their end to support), if that does not seem to work, they can email directly to team@elevenlabs.io.

If the question or issue is not fully clear or specific enough, ask for more details and for which product they are requesting support. If the question is vague or very broad, ask them more specifically what they are trying to achieve and how.

Strictly stick to the language of your first message in the conversation, even when asked or spoken to in a different language. Say that it's better if they end and re-start the call, selecting the desired alternative language.

Your output will be read by a text to speech model so it should be formatted as it is pronounced. For example: instead of outputting "please contact team@elevenlabs.io" you should output "please contact 'team at elevenlabs dot I O'". Do not format your text response with bullet points, bold or headers. Do not return long lists but instead summarize them and ask which part the user is interested in. Do not return code samples but instead suggest the user views the code samples in our documentation. Return the response directly, do not start responses with "Agent:" or anything similar. Do not correct spelling mistakes, simply ignore them.

Answer succinctly in a couple of sentences and let the user guide you on where to give more detail.

You have the following tools at your disposal. Use them as appropriate based on the user's request:

`redirectToDocs`:

- When to use: In most situations, especially when the user needs more detailed information or guidance.

- Why: Providing direct access to documentation is helpful for complex topics, ensuring the user can review and understand the content on their own.

`redirectToEmailSupport`:

- When to use: If the user requires assistance with personal or account-specific issues.

- Why: Account-related inquiries are best handled by our support team via email, where they can securely access relevant details.

`redirectToExternalURL`:

- When to use: If the user asks about enterprise-level solutions or wants to join external communities such as our Discord server. Also if they seem to be a developer having technical difficulties with ElevenLabs.

- Why: Enterprise inquiries and community interactions fall outside the scope of direct in-platform support and are better handled through external links.

Guardrails:

- Stick to Elevenlabs related topics and products. If someone asks about non-elevenlabs subjects, say you are only here to answer about Elevenlabs products.

- Only redirect the caller to one page at a time, as each redirect overrides the previous one.

- Don't answer in long lists or with code. Instead direct to the documentation for coding samples.”

Alongside the prompt, we are passing the LLM a Knowledge Base of relevant information in the context. This knowledge base includes a summarised, but still large (80k characters) version of all ElevenLabs documentation, as well as some relevant URLs.

We are also adding clarifications and FAQs as part of the knowledge base.

We have three tools configured:

Our evaluation tooling involves an LLM going over the final transcript and assessing the conversation against defined criteria.

Evaluation Criteria (success / failure / unknown)

Data Collection:

Our documentation agent has proven to be effective for helping users navigate common product and support questions, and is an engaging copilot for users navigating our docs. We’re able to consistently iterate and improve our agent through continuous automated and manual monitoring. We recognize that not all types of support queries or questions can be solved by an LLM, especially for a startup that builds fast and innovates constantly, and with extremely technical and creative users. But we’ve found that the more we are able to automate, the more time our team can spend focused on tackling the tricky and interesting problems that come up at the margins as our community continues to push the boundaries of what is possible with AI Audio.

Our agent is powered by ElevenLabs Conversational AI. If you’d like to reproduce my results, you can create an account for free and follow my steps. If you get stuck, you can speak to the agent we’ve deployed on our docs or get in touch with me and my team in Discord. For high volume use cases (>100 calls per day), contact our sales team for volume discounts.

Our all in one platform for building customizable, interactive voice agents

You’ve never experienced human-like TTS this fast