Eleven v3 Audio Tags: Giving situational awareness to AI audio

Enhance AI speech with Eleven v3 Audio Tags. Control tone, emotion, and pacing for natural conversation. Add situational awareness to your text to speech.

ElevenLabs' audio tags control AI voice emotion, pacing, and sound effects.

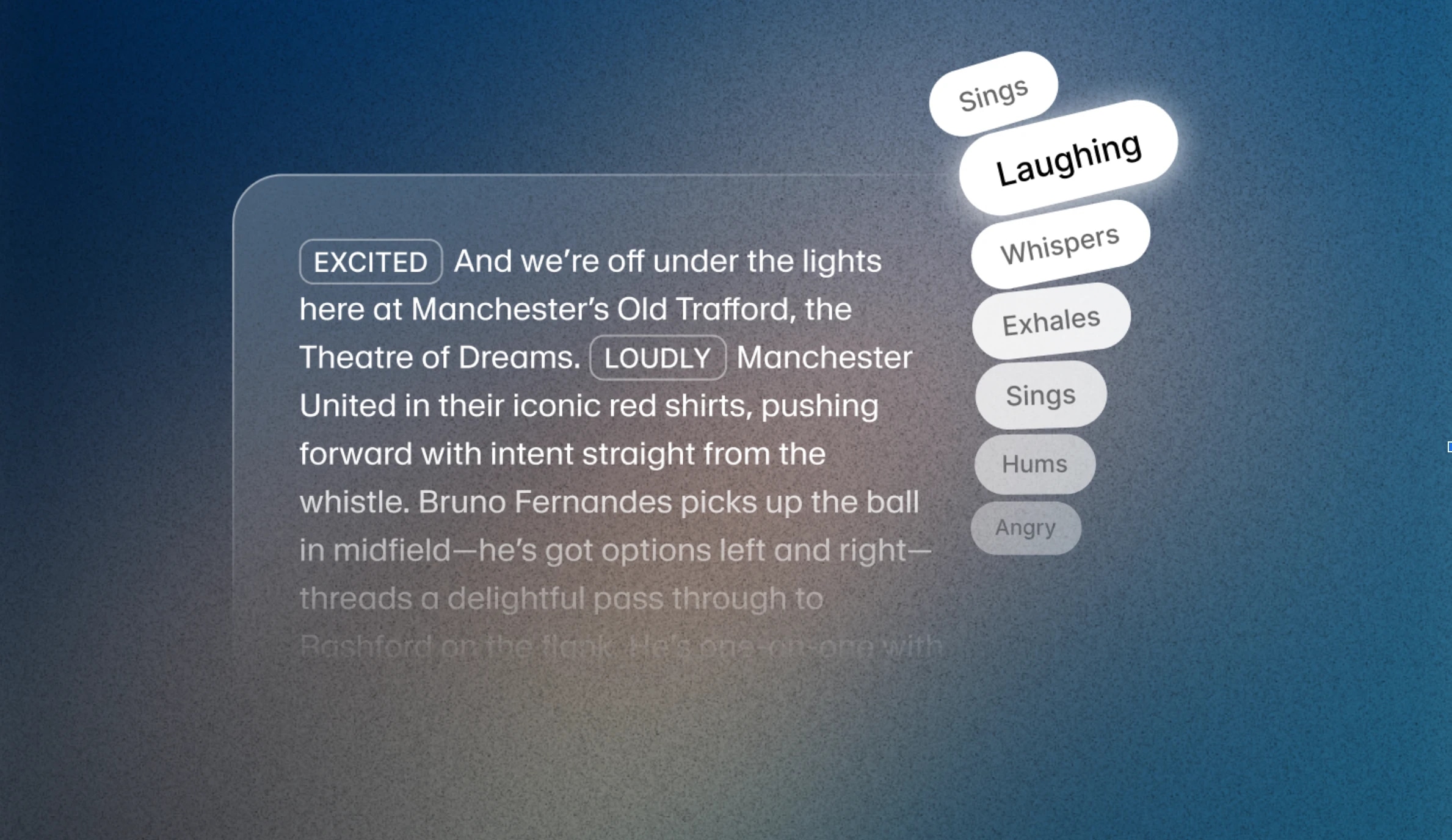

With the release of Eleven v3, audio prompting just became an essential skill. Instead of typing out or pasting the words you want the AI voice to say, you can now use a new capability — Audio Tags — to control everything from emotion to delivery.

Eleven v3 is an alpha release research preview of the new model. It requires more prompt engineering than previous models — but the generations are breathtaking.

ElevenLabs Audio Tags are words wrapped in square brackets that the new Eleven v3 model can interpret and use to direct the audible action. They can be anything from [excited], [whispers], and [sighs] through to [gunshot], [clapping] and [explosion].

Audio Tags let you shape how AI voices sound, including nonverbal cues like tone, pauses, and pacing. Whether you're building immersive audiobooks, interactive characters, or dialogue-driven media, these simple script-level tools give you precise control over emotion and delivery.

You can place Audio Tags anywhere in your script to shape delivery in real time. You can also use combinations of tags within a script or even a sentence. Tags fall into core categories:

These tags can help you set the emotional tone of the voice — whether it's somber, intense, or upbeat. For example you could use one or a combination of [sad], [angry], [happily] and [sorrowful].

These are more about the tone and performance. You can use these tags to adjust volume and energy for scenes that need restraint or force. Examples include: [whispers], [shouts] and even [x accent].

True natural speech includes reactions. For example, you can use this to add realism by embedding natural, unscripted moments into speech. For example: [laughs], [clears throat] and [sighs].

Underpinning these features is the new architecture behind v3. The model understands text context at a deeper level, which means it can follow emotional cues, tone shifts, and speaker transitions more naturally. Combined with Audio Tags, this unlocks greater expressiveness than was previously possible in TTS.

You can now also create multi-speaker dialogues that feel spontaneous — handling interruptions, shifting moods, and conversational nuance with minimal prompting.

Professional Voice Clones (PVCs) are currently not fully optimized for Eleven v3, resulting in potentially lower clone quality compared to earlier models. During this research preview stage it would be best to find an Instant Voice Clone (IVC) or designed voice for your project if you need to use v3 features. PVC optimization for v3 is coming in the near future.

Eleven v3 is available in the ElevenLAbs UI, and we’re offering 80% off until the end of June. Public API for Eleven v3 (alpha) is also available. Whether you’re experimenting or deploying at scale, now’s the time to explore what’s possible.

Creating AI speech that performs — not just reads — comes down to mastering Audio Tags. We've produced seven concise, hands-on guides that show how tags like [WHISPER], [LAUGHS SOFTLY], or [French accent] let you shape context, emotion, pacing, and even multi-character dialogue with a single model.

[WHISPER], [SHOUTING], and [SIGH] let Eleven v3 react to the moment—raising stakes, softening warnings, or pausing for suspense.[pirate voice] to [French accent], tags turn narration into role-play. Shift persona mid-line and direct full-on character performances without changing models.[sigh], [excited], or [tired] steer feelings moment by moment, layering tension, relief, or humour—no re-recording needed.[pause], [awe], or [dramatic tone] control rhythm and emphasis so AI voices guide the listener through each beat.[interrupting], [overlapping], or tone switches. One model, many voices—natural conversation in a single take.[pause], [rushed], or [drawn out] give precision over tempo, turning plain text into performance.[American accent], [British accent], [Southern US accent] and more—for culturally rich speech without model swaps.

Enhance AI speech with Eleven v3 Audio Tags. Control tone, emotion, and pacing for natural conversation. Add situational awareness to your text to speech.

A look under the hood at how ElevenAgents manages context, tools, and workflows to deliver real-time, enterprise-grade conversations.