RE:ANIME

DUBBING STUDIO

Localize content across 29 languages with AI dubbing

Translate audio and video while preserving the emotion, timing, tone and unique characteristics of each speaker

AI dubbing with original voices

Our dubbing tool maintains the original speaker’s voice and style across all supported languages, ensuring your content remains emotionally and audibly authentic to audiences worldwide

Instantly dub from any source

Upload or link your videos from any platform, be it YouTube, X/Twitter, TikTok, Vimeo, or a URL, and start translating videos immediately, no matter where your content lives

Fully managed dubbing with ElevenStudios

Expand your reach to global audiences by translating your content for foreign audiences. Let our AI and bilingual dubbing experts do the work for you.

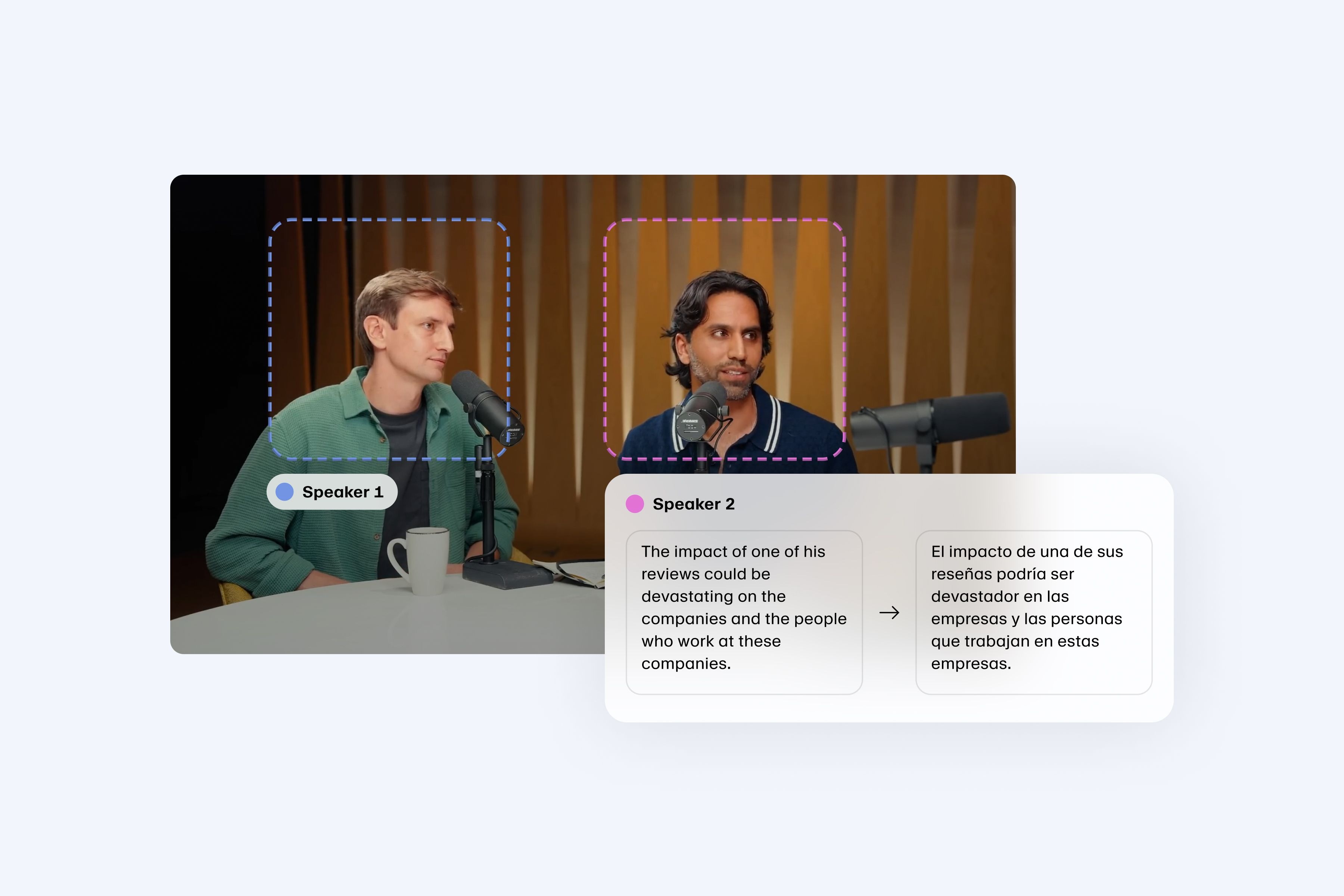

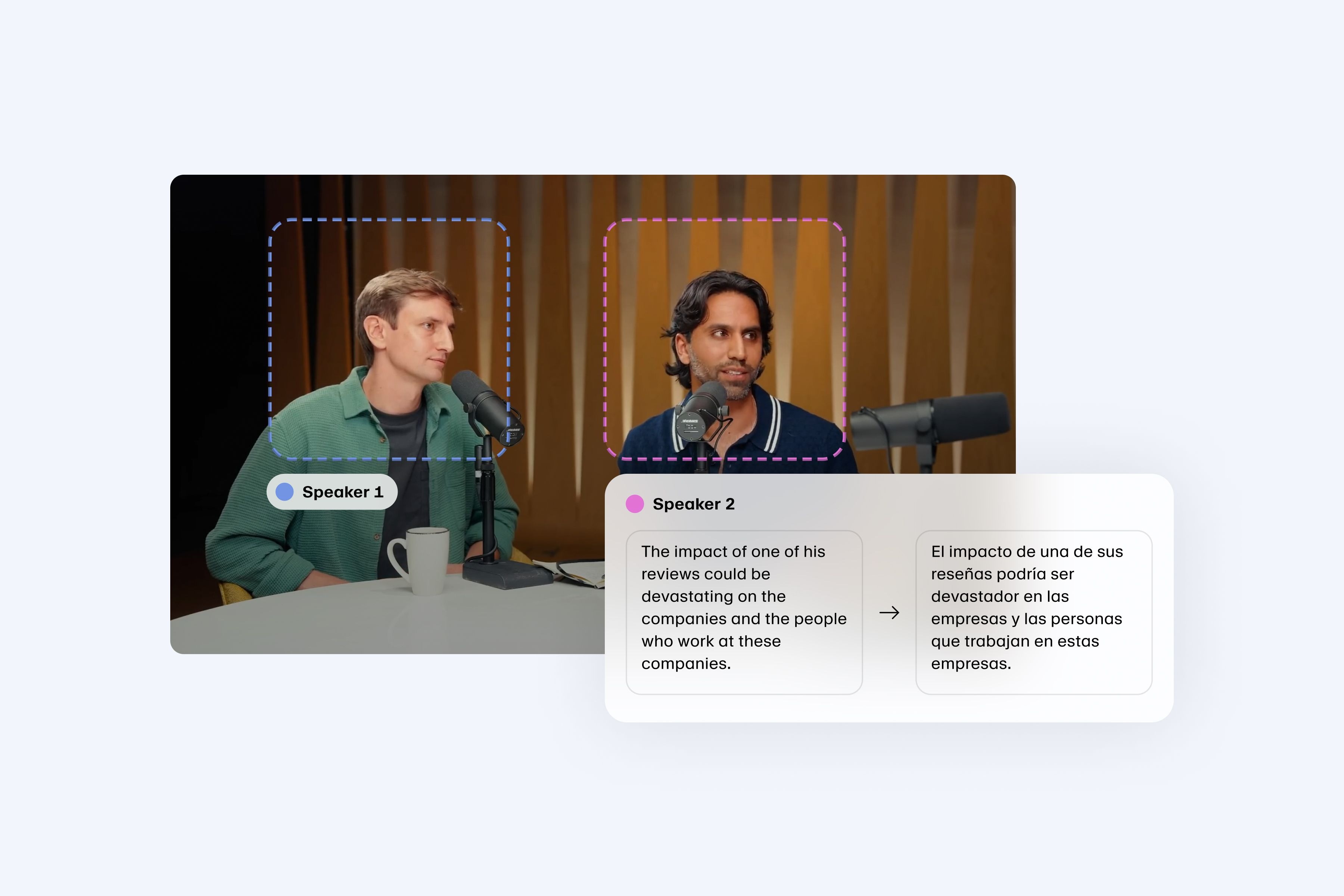

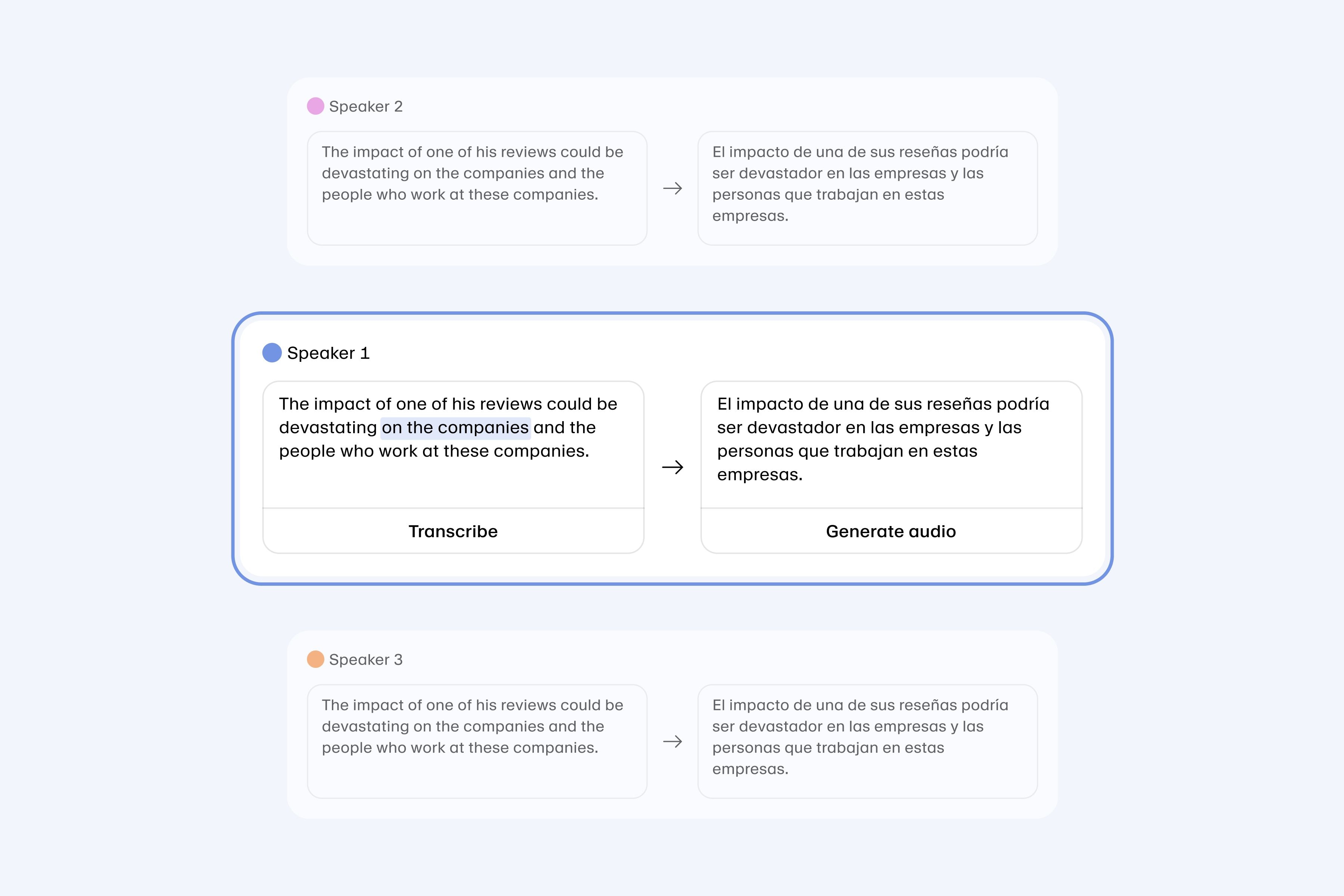

Automatic speaker detection

Our dubbing AI analyzes your video and automatically recognizes who speaks what when to ensure all voices match the original speakers in content, intonation, and speech duration

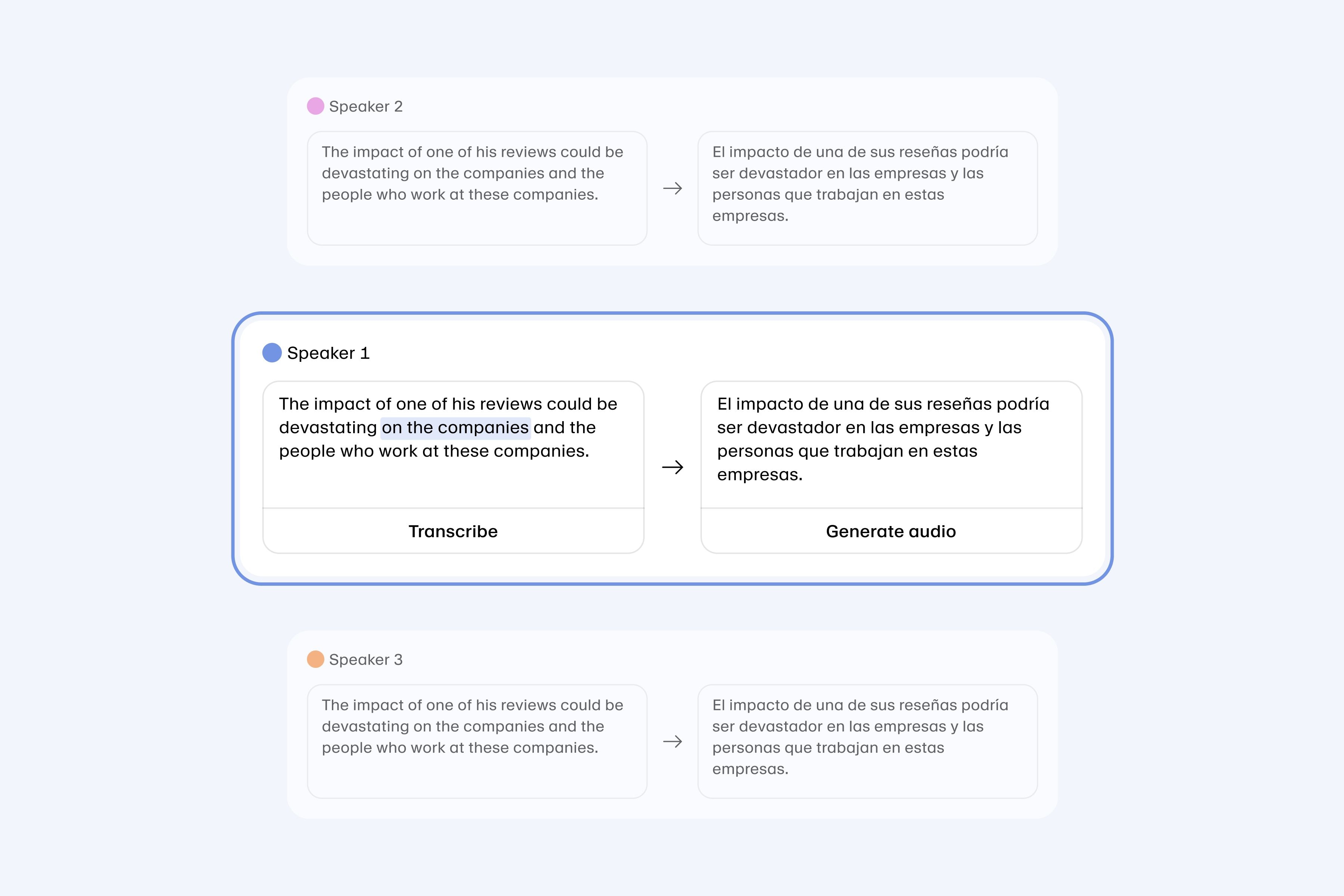

Video transcript and translation editing

Our AI video translator lets you manually edit transcripts and translations to ensure your content is properly synced and localized. Adjust the voice settings to tune delivery, and regenerate speech segments until the output sounds just right

Customize tracks

Personalize each audio track with specific settings like stability, similarity, and style, optimizing voice output for every character in your project

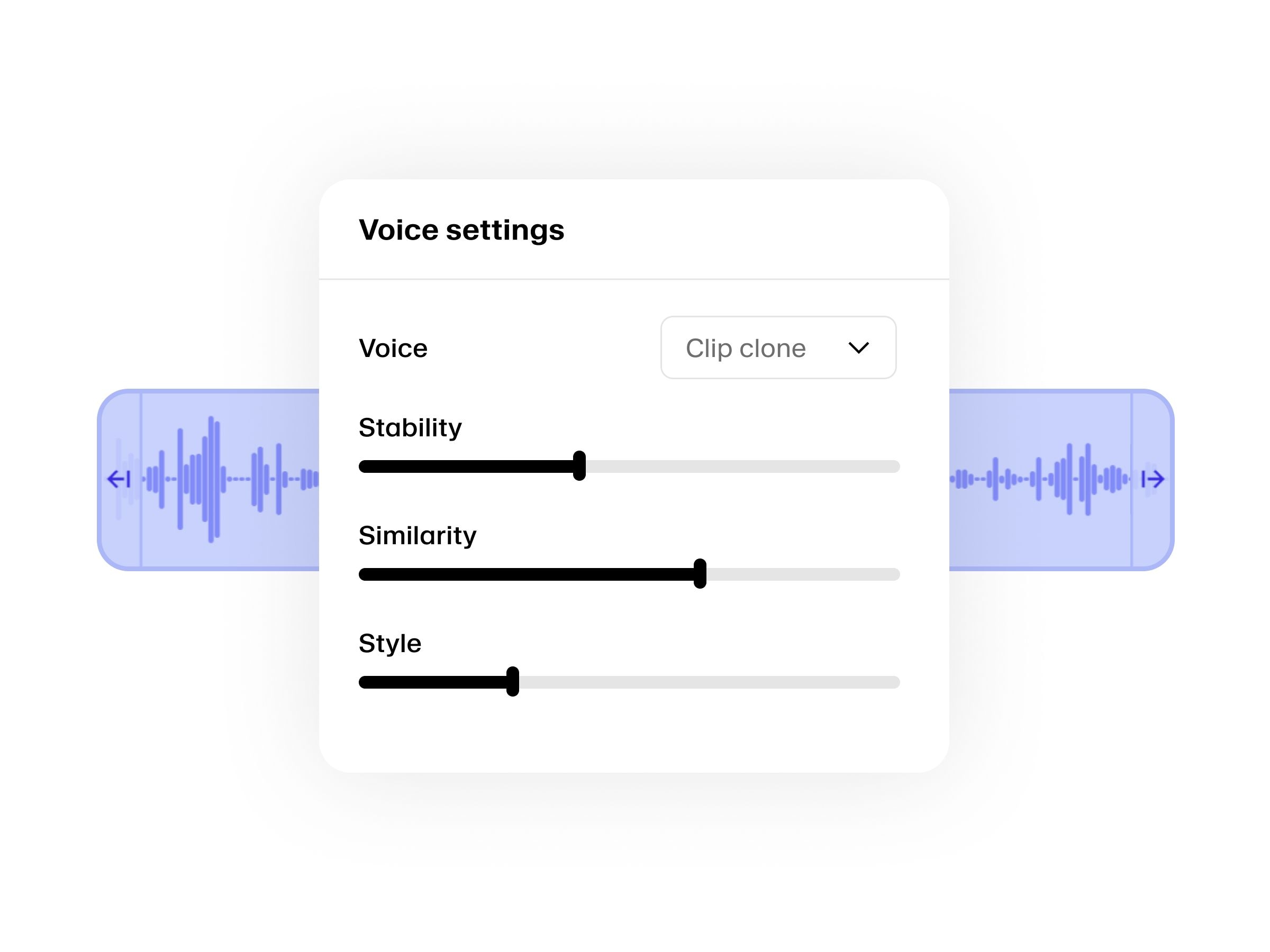

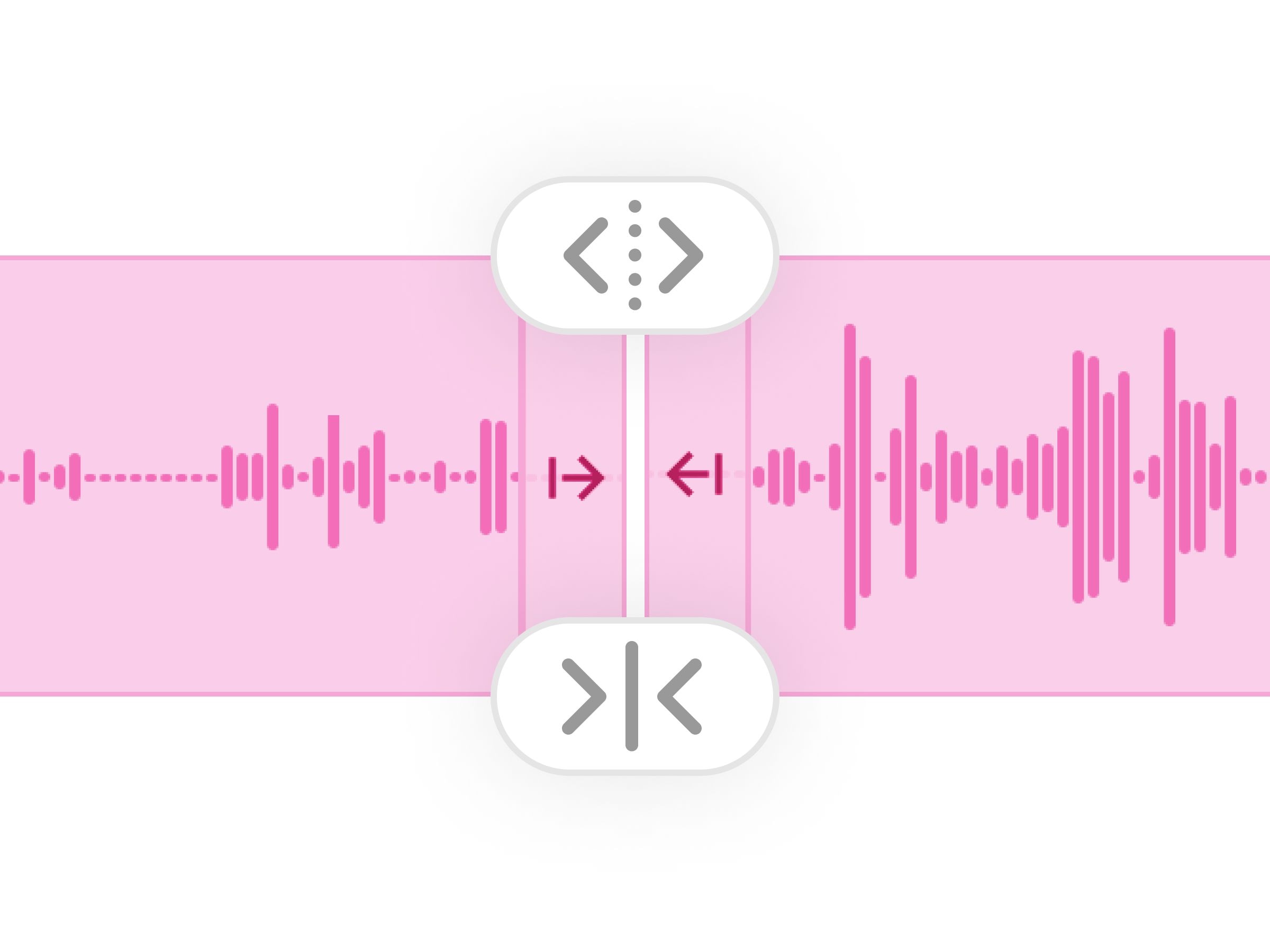

Manage clips

Merge, split, delete, or move clips around to tailor your project’s audio to the exact requirements of your scene or dialogue, and sync dialogue with on-screen action

Regenerate clips

Refresh any dub clip with updated settings or translations to ensure your audio matches your latest edits perfectly, maintaining consistency and quality.

Flexible timeline

Adjust audio clip position directly on the timeline for precise match, and fine-tune the sync between spoken audio and on-screen action

How to Dub Videos into 29 Languages Automatically (with Voice Cloning)

Want to reach millions of new viewers by dubbing your videos into different languages? In this tutorial, you'll learn step-by-step how to dub your YouTube videos (or any video content) into 29 languages automatically using ElevenLabs AI dubbing and voice cloning.

See how creators and businesses are leveraging AI speech narration

ENTERPRISE

Power audio experiences in publishing at scale

✓ Enterprise-level SLAs

✓ Dedicated support

✓ Priority access

✓ API access

✓ Unlimited seats

✓ Volume discounts

Trusted by leading publishing and media companies

Frequently asked questions

Explore our cutting-edge technology

Create custom sound effects and ambient audio with our powerful AI sound effect generator.

Automate video voiceovers, ad reads, podcasts, and more, in your own voice

Say it how you want and hear it delivered in a completely different voice, with full control over the performance. Capture whispers, laughs, accents, and subtle emotional cues.