Eleven v3 Audio Tags: Dando conciencia situacional al audio IA

Mejora el habla IA con Eleven v3 Audio Tags. Controla el tono, la emoción y el ritmo para una conversación natural. Añade conciencia situacional a tu Text to Speech.

Las audio tags de ElevenLabs controlan la emoción, el ritmo y los efectos de sonido de la voz IA.

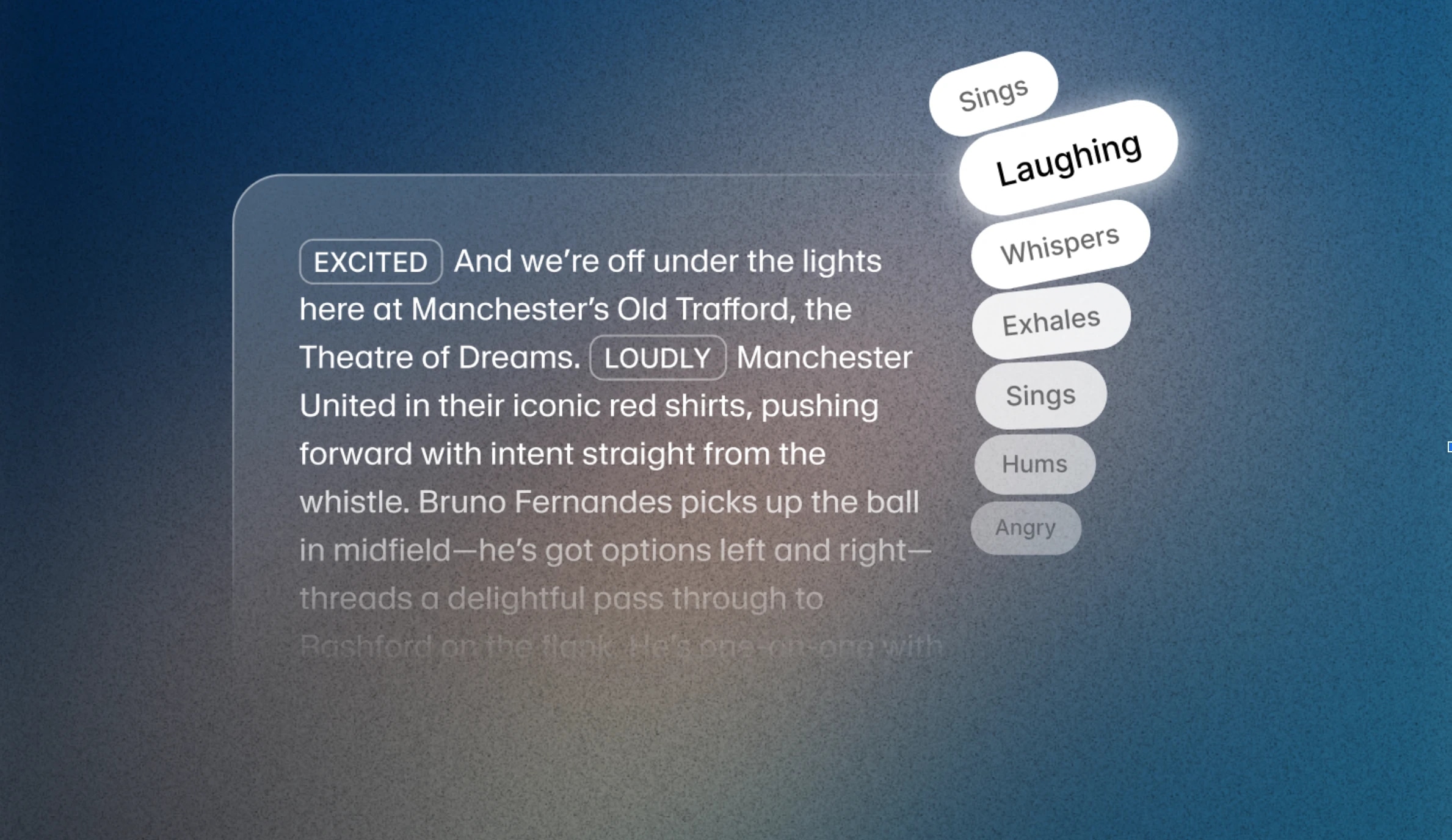

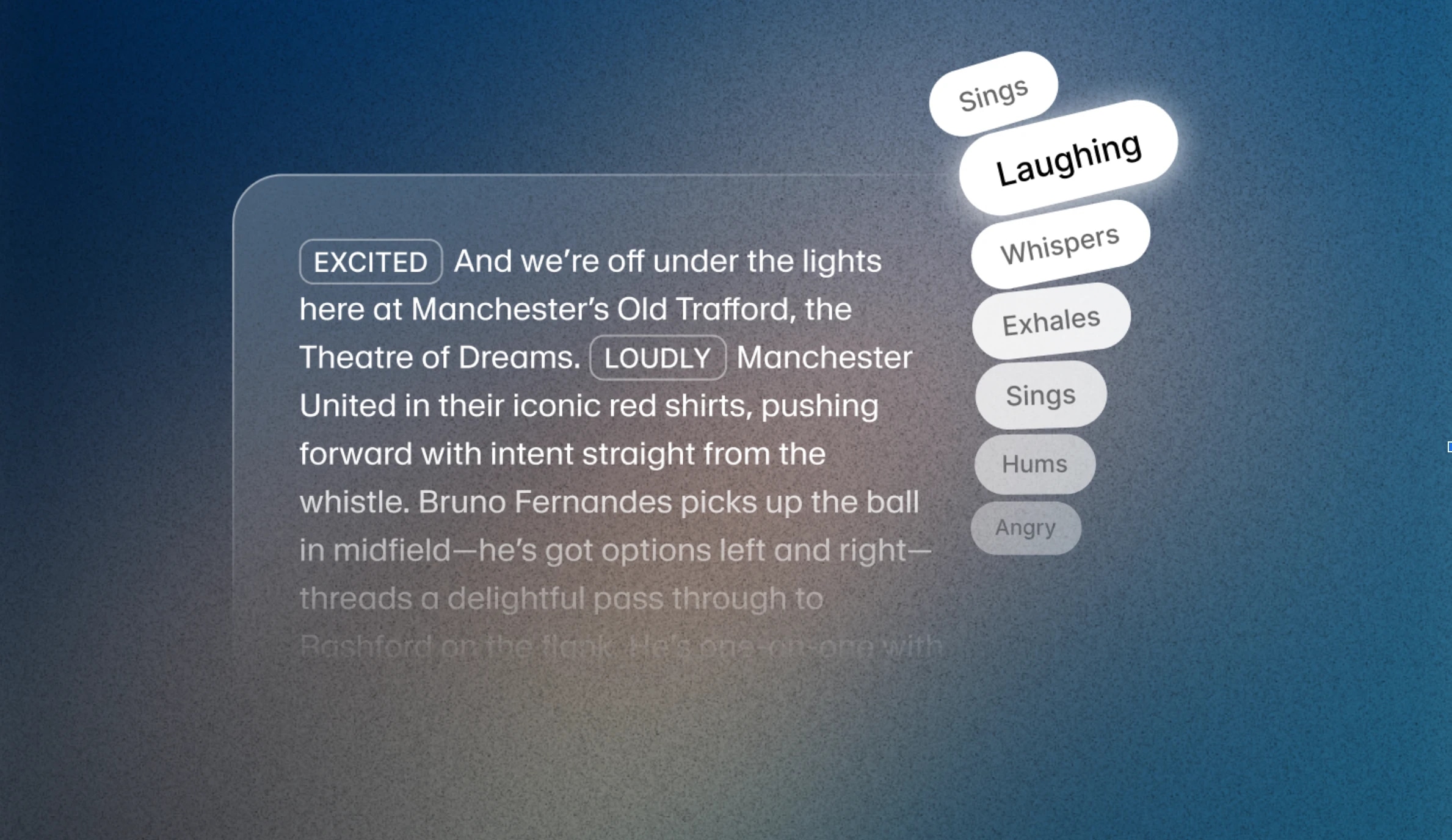

Con el lanzamiento de Eleven v3, el audio prompting se ha convertido en una habilidad esencial. Ahora, en vez de solo escribir o pegar el texto que quieres que diga la voz IA, puedes usar una nueva función — Etiquetas de audio — para controlar desde la emoción hasta la entonación.

Eleven v3 es una versión alfa en fase de investigación del nuevo modelo. Requiere más prompt engineering que los modelos anteriores, pero los resultados son sorprendentes.

Las Audio Tags de ElevenLabs son palabras entre corchetes que el nuevo modelo Eleven v3 interpreta para dirigir la acción sonora. Pueden ser desde [excited], [whispers] y [sighs] hasta [gunshot], [clapping] o [explosion].

Las Audio Tags te permiten definir cómo suenan las voces IA, incluyendo señales no verbales como tono, pausas y ritmo. Ya sea que crees audiolibros inmersivos, personajes interactivos o medios centrados en el diálogo, estas herramientas sencillas a nivel de guion te dan control preciso sobre la emoción y la entonación.

Puedes colocar Audio Tags en cualquier parte de tu guion para ajustar la entonación en tiempo real. También puedes combinar varias tags en un mismo guion o incluso en una frase. Las tags se agrupan en varias categorías principales:

Estas tags te ayudan a marcar el tono emocional de la voz, ya sea serio, intenso o alegre. Por ejemplo, puedes usar una o varias como [sad], [angry], [happily] o [sorrowful].

Estas tags se centran más en el tono y la interpretación. Puedes usarlas para ajustar el volumen y la energía en escenas que requieren contención o fuerza. Ejemplos: [whispers], [shouts] o incluso [x accent].

El habla natural incluye reacciones. Puedes añadir realismo insertando momentos espontáneos en el discurso, como [laughs], [clears throat] o [sighs].

Detrás de estas funciones está la nueva arquitectura de v3. El modelo entiende el contexto del texto a un nivel más profundo, lo que le permite seguir señales emocionales, cambios de tono y transiciones de hablante de forma más natural. Combinado con las Audio Tags, esto permite una expresividad mucho mayor que antes en Texto a Voz.

Ahora también puedes crear diálogos con varios personajes que suenan espontáneos, gestionando interrupciones, cambios de humor y matices conversacionales con muy poco prompting.

Los clones de voz profesionales (PVC) aún no están totalmente optimizados para Eleven v3, por lo que la calidad puede ser inferior a la de modelos anteriores. Durante esta fase de investigación, lo mejor es usar un Instant Voice Clone (IVC) o una voz diseñada si necesitas las funciones de v3. La optimización de PVC para v3 llegará pronto.

Eleven v3 está disponible en la interfaz de ElevenLabs y ofrecemos un 80% de descuento hasta finales de junio. La API pública de Eleven v3 (alfa) también está disponible. Tanto si quieres experimentar como si vas a escalar, ahora es el momento de descubrir todo lo que puedes hacer.

Crear voz IA que interpreta — no solo lee — depende de dominar las Audio Tags. Hemos preparado siete guías prácticas y breves que muestran cómo tags como [SUSURRANDO], [RÍE SUAVEMENTE], o [acento francés] te permiten definir el contexto, la emoción, el ritmo e incluso el diálogo entre varios personajes con un solo modelo.

[SUSURRANDO], [GRITANDO], y [SUSPIRA] permiten que Eleven v3 reaccione al momento: subiendo la tensión, suavizando advertencias o haciendo pausas para crear suspense.[voz de pirata] hasta [acento francés], las tags convierten la narración en interpretación. Cambia de personaje en mitad de una frase y dirige interpretaciones completas sin cambiar de modelo.[suspira], [emocionado], o [cansado] marcan el sentimiento en cada momento, añadiendo tensión, alivio o humor, sin necesidad de volver a grabar.[pausa], [asombro], o [tono dramático] controlan el ritmo y el énfasis para que las voces IA guíen al oyente en cada momento.[interrumpiendo], [superpuesto], o cambios de tono. Un solo modelo, muchas voces: conversación natural en una sola toma.[pausa], [apresurado], o [alargado] te dan precisión sobre el tempo, convirtiendo texto plano en interpretación.[acento americano], [acento británico], [acento del sur de EE. UU.] y más, para voces con riqueza cultural sin cambiar de modelo.

Mejora el habla IA con Eleven v3 Audio Tags. Controla el tono, la emoción y el ritmo para una conversación natural. Añade conciencia situacional a tu Text to Speech.