Waveforms AI and the vocal turing test

New startup from OpenAI and Google veterans shares ambitious plans for audio AI, with products still in development

Today, WaveForms AI, founded by former OpenAI and Google veterans, announced its mission to develop audio AI systems that can emulate human speech indistinguishably. CEO Alexis Conneau emphasized their goal to pass the “Speech Turing Test,” aiming for a 50% preference score where users cannot discern between human and AI-generated speech. The company is currently in the development phase, with plans to reveal specific products next year.

What is the Speech Turing Test?

The Speech Turing Test is a benchmark for AI audio systems, measuring whether humans can distinguish between AI-generated and human speech. A system passes this test when it achieves a 50% preference score, meaning listeners can’t tell if they’re hearing a person or an AI. ElevenLabs has already made significant strides in achieving this level of indistinguishability, with voices widely recognized for their human-like realism.

How WaveForms AI is tackling the Speech Turing Test

WaveForms AI, founded by former OpenAI and Google veterans, aims to create audio AI systems capable of seamless, human-like communication. Led by Alexis Conneau, the startup focuses on developing models that not only replicate human speech but also capture emotional nuance, making interactions feel more natural and engaging. ElevenLabs’ Text-to-Speech models have set the standard for combining speed and expressiveness, already delivering nuanced and contextually aware speech at scale.

What is a preference score in AI speech systems?

The preference score gauges the indistinguishability of AI-generated speech from human speech. A 50% score signifies that listeners show no clear preference, effectively marking parity between the two. ElevenLabs has consistently achieved high preference scores, with industry-leading adoption by creators, media, and accessibility organizations.

Why does emotional nuance matter in AI audio?

Current AI voice systems often lose emotional subtleties, limiting their ability to convey empathy or engage meaningfully. WaveForms AI claims to address this with their Audio LLMs, which process audio natively to capture context and emotion, enabling richer communication. ElevenLabs has already demonstrated the importance of emotional nuance, offering tools that allow users to fine-tune tone, expressiveness, and pacing to suit any context.

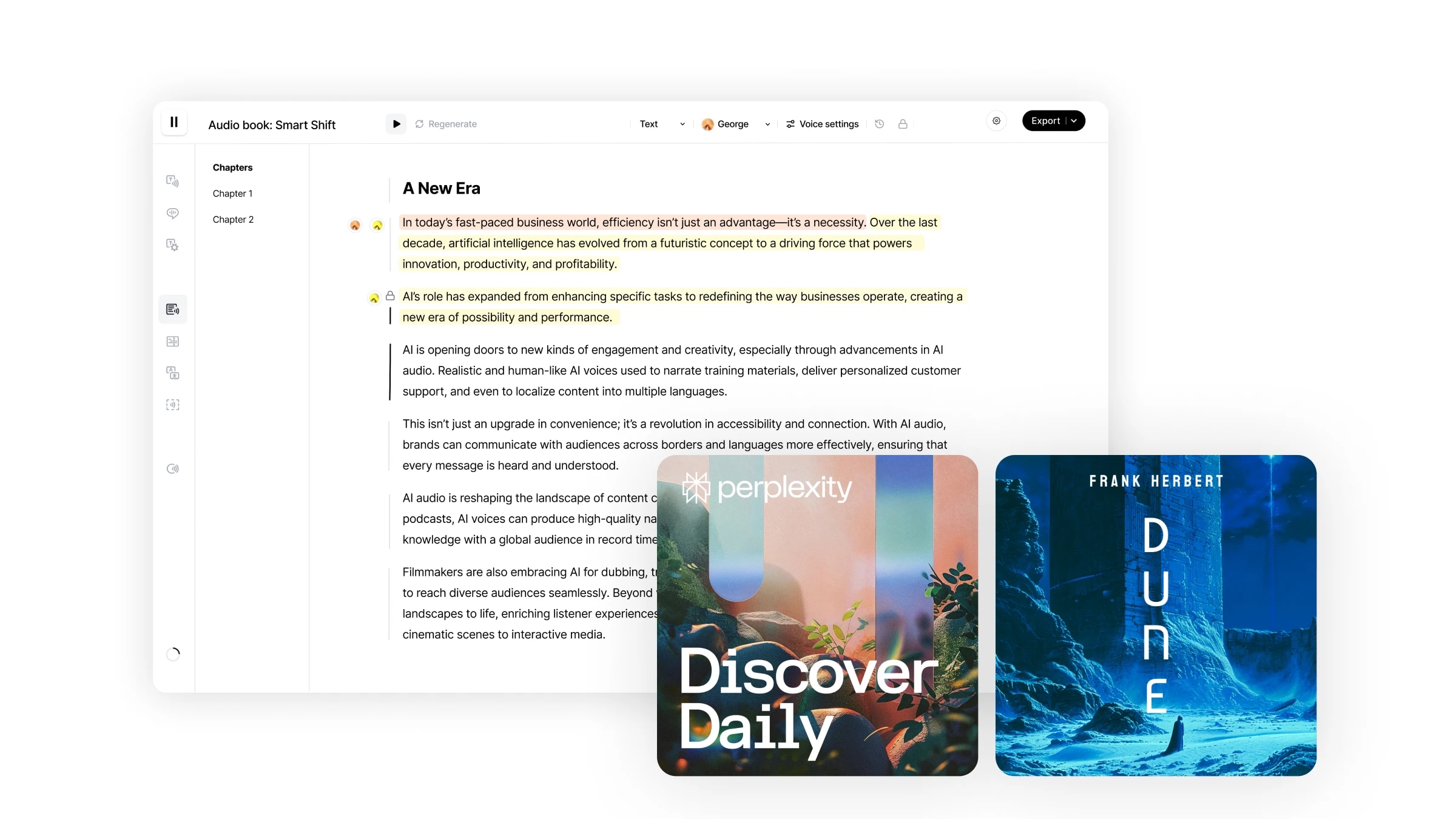

Your complete workflow to edit videos and audio, add voiceovers and music, transcribe to text, and publish narrated, captioned productions

How is WaveForms AI different from existing AI audio systems?

Unlike traditional Text-to-Speech systems, WaveForms AI's end-to-end Audio LLMs aim to capture the depth and complexity of human interaction. Their focus on Emotional General Intelligence (EGI) introduces a social-emotional layer to AI, prioritizing connection and empathy over basic functionality. ElevenLabs has pioneered breakthroughs in emotional depth and flexibility, with tools designed to handle complex, real-world scenarios while being accessible and available today.

What challenges come with achieving the Speech Turing Test?

Developing indistinguishable AI speech systems poses both technical and ethical challenges. Conneau highlights risks like users forming attachments to AI characters and the broader societal implications of AI’s increasing realism. Addressing these issues responsibly is a key focus for WaveForms AI. ElevenLabs has built safeguards, such as “no-go” voice policies and rigorous content moderation, to responsibly navigate these challenges while delivering cutting-edge technology.

Applications of AI systems designed to pass the Speech Turing Test

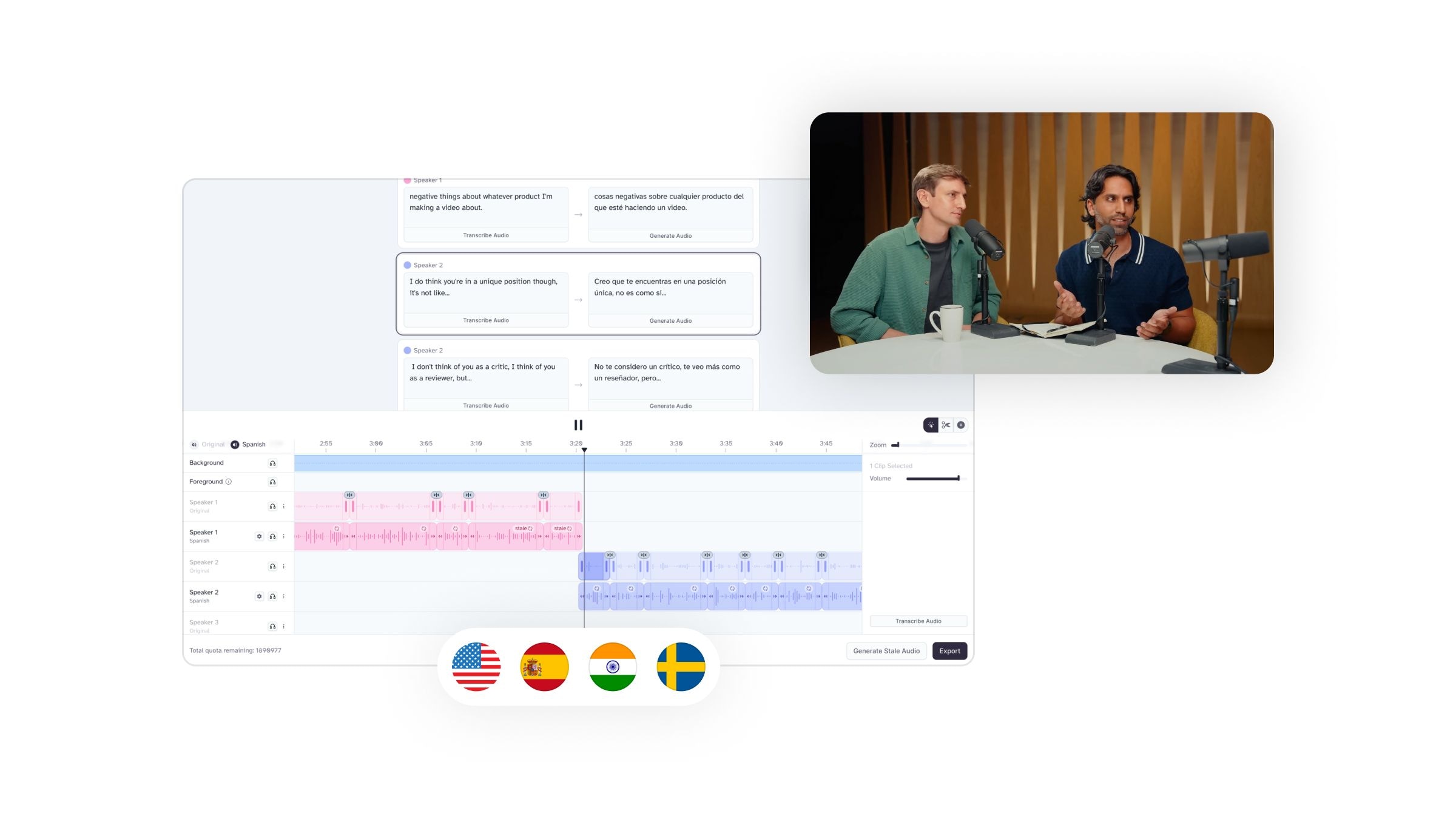

WaveForms AI envisions their technology being used across a broad spectrum of applications, including education, customer support, and entertainment. The ability to create human-like voice interactions opens possibilities for more immersive, empathetic experiences in these areas. ElevenLabs is already powering applications across these fields, from accessible education tools to multilingual media localization, showcasing what’s possible with today’s technology.

Translate audio and video while preserving the emotion, timing, tone and unique characteristics of each speaker

The future of AI audio systems

While WaveForms AI's products remain in development, their ambition to redefine AI audio interactions has attracted significant attention, including $40 million in seed funding led by Andreessen Horowitz. As the company works toward solving the Speech Turing Test, its potential to reshape how we interact with technology is immense. ElevenLabs continues to lead in shaping the future of audio AI, delivering solutions that are transforming industries and meeting the needs of users right now.

Add conversational agents to your web, mobile or telephony in minutes. Our realtime API delivers low latency, full configurability, and seamless scalability.

How WaveForms AI Audio compares to ElevenLabs

Looking to support numerous audio generation use cases in the future, WaveForms AI looks like it could become a good general-purpose audio AI toolkit. For now, it remains a product announcement. ElevenLabs, on the other hand, is available today, offering production-grade quality and customization.

Let's briefly assess how WaveForms AI compares against in key areas like Text-to-Speech and sound generation.

Text-to-Speech

ElevenLabs stands as the clear industry leader in Text-to-Speech technology, offering:

- Support for 70+ languages with authentic accents and cultural nuances

- Advanced emotional intelligence that responds to textual context

- Control over voice characteristics

- High-quality, human-like speech that maintains consistency across long-form content

- An extensive library of natural-sounding voices

- The ability to clone and customize voices

ElevenLabs' technology already delivers reliable, production-ready output that meets professional standards. Its specialized approach consistently produces more natural-sounding voices that capture the subtle nuances of human speech.

Sound Effects

ElevenLabs already provides a more streamlined and precise approach to sound effect generation. ElevenLabs offers:

- Instant generation of four different samples for each prompt

- Precise control through detailed text descriptions

- High-quality output suitable for commercial projects

- A comprehensive library of common sound effects

- The ability to create distinctive effects directly from text descriptions

ElevenLabs delivers specialized excellence in both voice and sound effect generation. As one of the best AI sound effect generators, it produces reliable, production-ready output that better serves professional content creators' needs.

How to use ElevenLabs for Text-to-Speech

Transform your content into professional-quality voiceovers with these simple steps:

- Sign up: Create a free or paid account with ElevenLabs

- Choose your voice: Select from a diverse library of natural-sounding voices

- Input your text: Paste or type your script into the interface

- Customize settings: Adjust the speed, tone, and emphasis to match your needs

- Preview and generate: Listen to a sample and generate your final audio output

- Download: Download your high-quality voiceover

Final thoughts

The emergence of AI audio tools like WafeForms and ElevenLabs marks an exciting evolution in content creation. However, while WaveForms AI announced impressive ambitions in experimental sound generation and audio manipulation, it's not yet available to use.

ElevenLabs, on the other hand, is available and production-grade. It's also the leading solution currently on the market for AI Text-to-Speech voice and sound effects generation.

Ready to test out ElevenLabs' AI technology? Sign up today to get started.

FAQs

Explore articles by the ElevenLabs team

Text to Speech API - Up To 40% Faster Globally

Introducing Experiments in ElevenAgents

The most data-driven way to improve real-world agent performance.