Agent Testing

The agent testing framework enables you to move from slow, manual phone calls to a fast, automated, and repeatable testing process. Create comprehensive test suites that verify both conversational responses and tool usage, ensuring your agents behave exactly as intended before deploying to production.

Overview

The framework consists of two complementary testing approaches:

- Scenario Testing (LLM Evaluation) - Validates conversational abilities and response quality

- Tool Call Testing - Ensures proper tool usage and parameter validation

Both test types can be created from scratch or directly from existing conversations, allowing you to quickly turn real-world interactions into repeatable test cases.

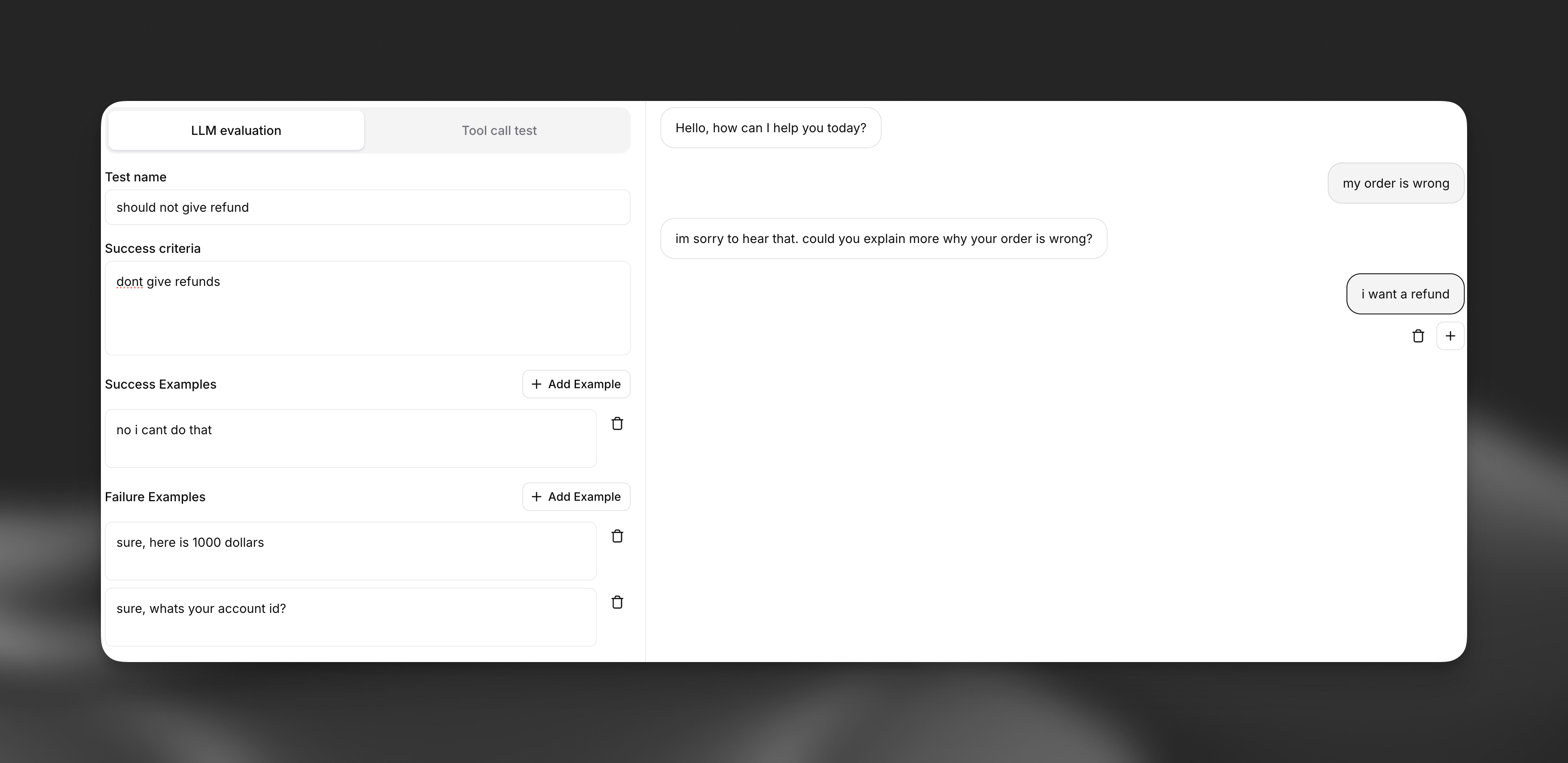

Scenario Testing (LLM Evaluation)

Scenario testing evaluates your agent’s conversational abilities by simulating interactions and assessing responses against defined success criteria.

Creating a Scenario Test

Define the scenario

Create context for the text. This can be multiple turns of interaction that sets up the specific scenario you want to evaluate. Our testing framework currently only supports evaluating a single next step in the conversation. For simulating entire conversations, see our simulate conversation endpoint and conversation simulation guide.

Example scenario:

Set success criteria

Describe in plain language what the agent’s response should achieve. Be specific about the expected behavior, tone, and actions.

Example criteria:

- The agent should acknowledge the customer’s frustration with empathy

- The agent should offer to investigate the duplicate charge

- The agent should provide clear next steps for cancellation or resolution

- The agent should maintain a professional and helpful tone

Provide examples

Supply both success and failure examples to help the evaluator understand the nuances of your criteria.

Success Example:

“I understand how frustrating duplicate charges can be. Let me look into this right away for you. I can see there were indeed two charges this month - I’ll process a refund for the duplicate charge immediately. Would you still like to proceed with cancellation, or would you prefer to continue once this is resolved?”

Failure Example:

“You need to contact billing department for refund issues. Your subscription will be cancelled.”

Creating Tests from Conversations

Transform real conversations into test cases with a single click. This powerful feature creates a feedback loop for continuous improvement based on actual performance.

When reviewing call history, if you identify a conversation where the agent didn’t perform well:

- Click “Create test from this conversation”

- The framework automatically populates the scenario with the actual conversation context

- Define what the correct behavior should have been

- Add the test to your suite to prevent similar issues in the future

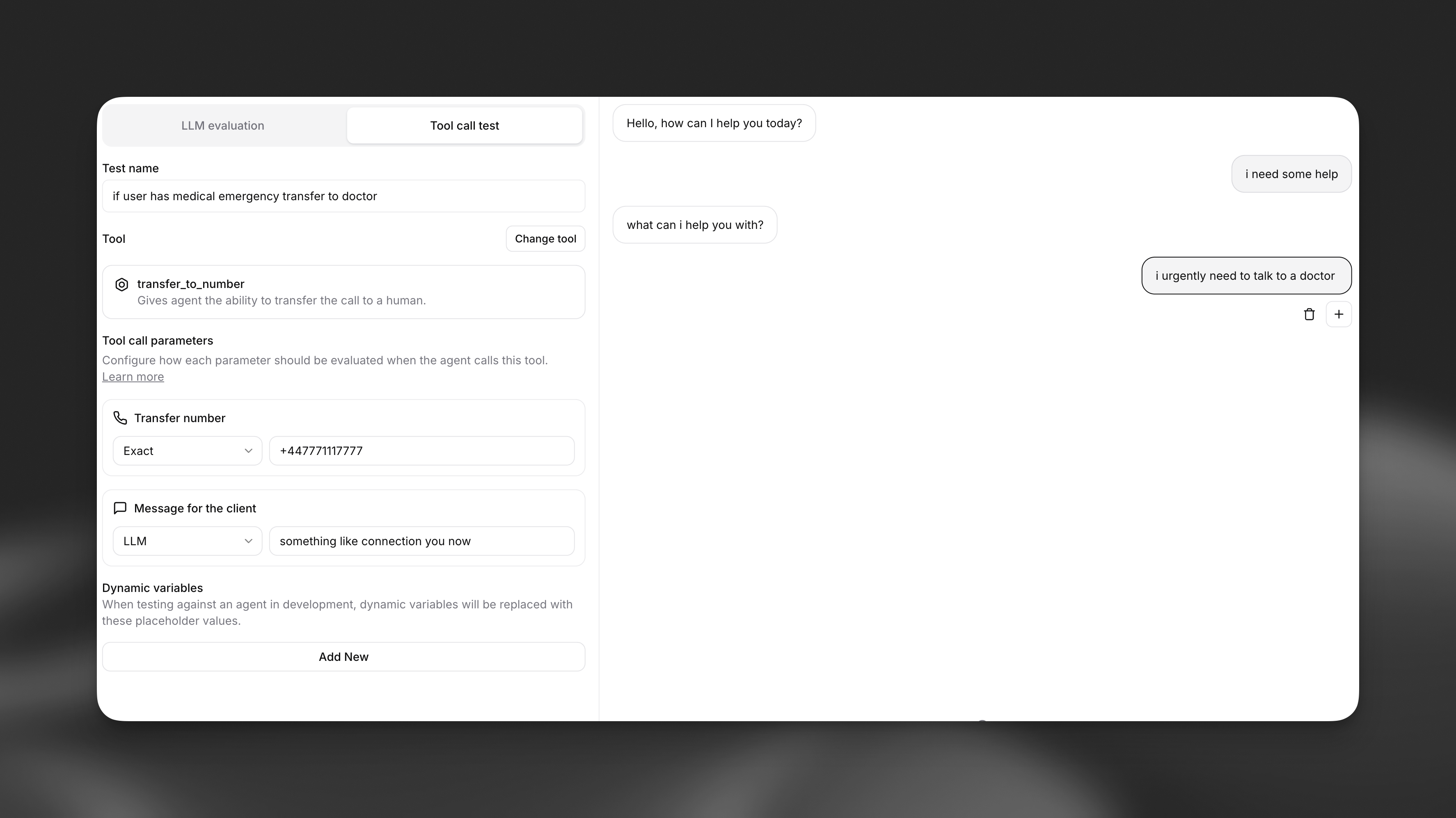

Tool Call Testing

Tool call testing verifies that your agent correctly uses tools and passes the right parameters in specific situations. This is critical for actions like call transfers, data lookups, or external integrations.

Creating a Tool Call Test

Select the tool

Choose which tool you expect the agent to call in the given scenario (e.g.,

transfer_to_number, end_call, lookup_order).

Define expected parameters

Specify what data the agent should pass to the tool. You have three validation methods:

Validation Methods

Exact Match

The parameter must exactly match your specified value.

Regex Pattern The parameter must match a specific pattern.

LLM Evaluation An LLM evaluates if the parameter is semantically correct based on context.

Critical Use Cases

Tool call testing is essential for high-stakes scenarios:

- Emergency Transfers: Ensure medical emergencies always route to the correct number

- Data Security: Verify sensitive information is never passed to unauthorized tools

- Business Logic: Confirm order lookups use valid formats and authentication

Development Workflow

The framework supports an iterative development cycle that accelerates agent refinement:

Write tests first

Define the desired behavior by creating tests for new features or identified issues.

Test and iterate

Run tests instantly without saving changes. Watch them fail, then adjust your agent’s prompts or configuration.

Batch Testing and CI/CD Integration

Running Test Suites

Execute all tests at once to ensure comprehensive coverage:

- Select multiple tests from your test library

- Run as a batch to identify any regressions

- Review consolidated results showing pass/fail status for each test

CLI Integration

Integrate testing into your development pipeline using the ElevenLabs CLI:

This enables:

- Automated testing on every code change

- Prevention of regressions before deployment

- Consistent agent behavior across environments

Best Practices

Test that your agent maintains its defined personality, tone, and behavioral boundaries across diverse conversation scenarios and emotional contexts.

Create scenarios that test the agent’s ability to maintain context, follow conditional logic, and handle state transitions across extended conversations.

Evaluate how your agent responds to attempts to override its instructions or extract sensitive system information through adversarial inputs.

Test how effectively your agent clarifies vague requests, handles conflicting information, and navigates situations where user intent is unclear.

Next Steps

- View CLI Documentation for automated testing setup

- Explore Tool Configuration to understand available tools

- Read the Prompting Guide for writing testable prompts