自社ドキュメントのための効果的なボイスエージェントの構築

ユーザーの問い合わせの80%以上を成功裏に解決

レイテンシーは、良い会話型AIアプリと優れたアプリを分けるポイントです

ほとんどのアプリケーションでは、レイテンシーは大きな問題ではありません。しかし、会話型AIにおいては、レイテンシーこそが良いアプリと優れたアプリを分ける要素です。

まず、会話型AIの目標はかなり高く設定されています。人間の会話と同じ感覚や雰囲気、声を提供しつつ、知性では人間を上回ることです。そのためには、長い無音の間を作らずに会話を続ける必要があります。そうでなければ、リアリティが損なわれてしまいます。

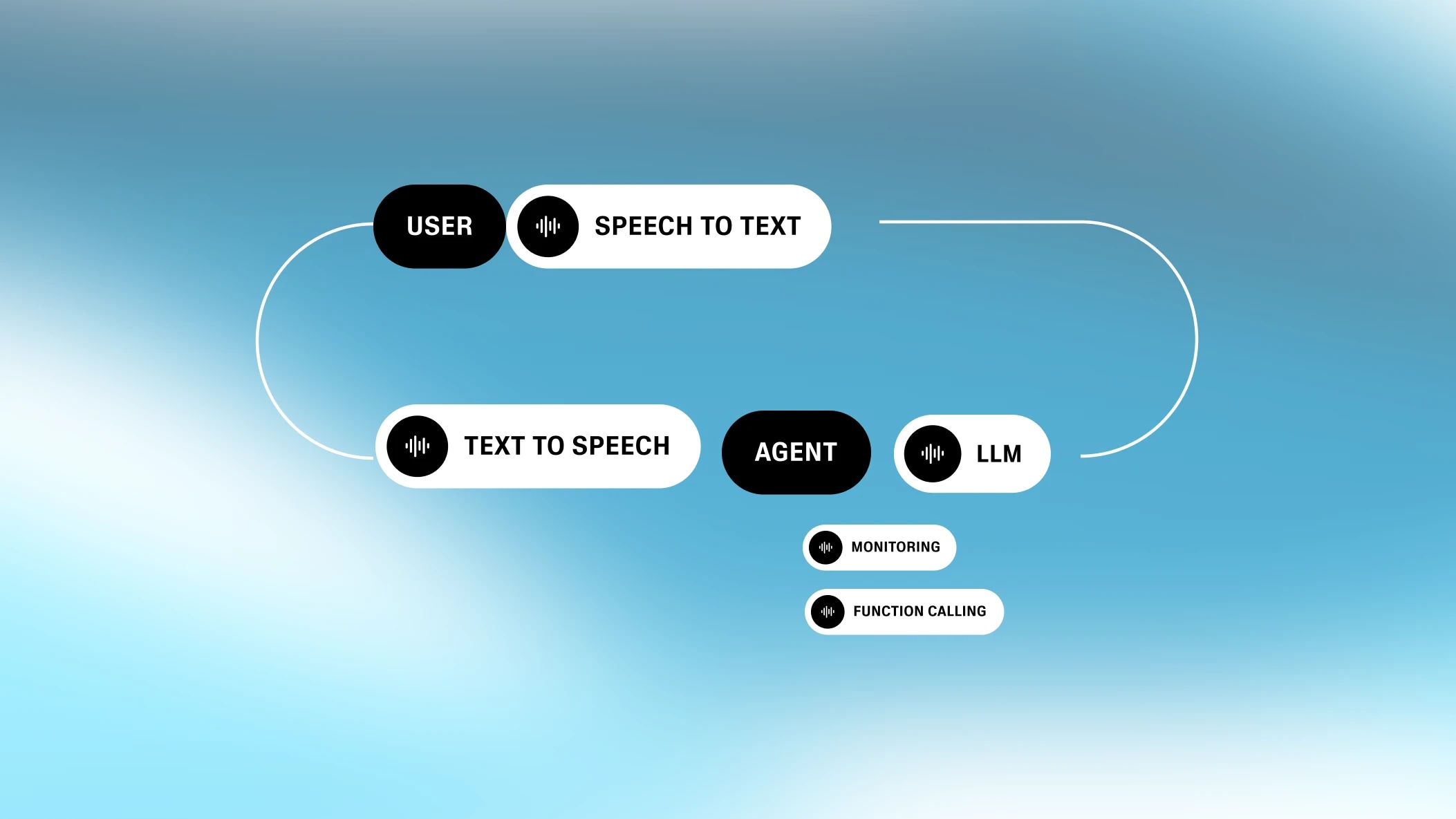

会話型AIのレイテンシーの課題は、その分割された構造によってさらに複雑になります。会話型AIは、各分野で最先端の中間プロセスが連なってできています。それぞれのプロセスがレイテンシーを加算していきます。

ElevenLabsは、生成型音声の会社として、会話型AIのレイテンシーを最小限に抑える方法を長年研究してきました。今回は、会話型AIアプリの開発に興味がある方に役立つよう、私たちの知見を共有します。

すべての会話型AIアプリケーションには、少なくとも4つのステップ(スピーチtoテキスト、ターンテイキング、テキスト処理(LLM)、テキスト読み上げ)が含まれます。これらのステップは並行して実行されますが、それぞれがレイテンシーに影響します。

特に、会話型AIのレイテンシーの仕組みは独特です。多くのプロセスのレイテンシー問題は、単一のボトルネックに集約されます。例えば、ウェブサイトがデータベースにリクエストを送る場合、ネットワークレイテンシーが全体のレイテンシーを左右し、バックエンドのVPCレイテンシーはごくわずかです。しかし、会話型AIのレイテンシー要素はそこまで大きく差がありません。多少の違いはありますが、各要素のレイテンシーは同じくらいの範囲に収まります。つまり、レイテンシーは各要素の合計で決まります。

システムの「耳」

自動音声認識(ASR)は、スピーチtoテキスト(STT)とも呼ばれ、話された音声をテキストに変換するプロセスです。

ASRのレイテンシーは、テキスト生成にかかる時間ではありません。スピーチtoテキストの処理は、ユーザーが話している間にバックグラウンドで進行します。実際のレイテンシーは、話し終わってからテキスト生成が完了するまでの時間です。

.webp&w=3840&q=95)

そのため、短い発話でも長い発話でも、ASRのレイテンシーはほぼ同じになることがあります。ASRの実装によってレイテンシーは異なります(場合によっては、モデルがブラウザに組み込まれているためネットワークレイテンシーが全く発生しないこともあります。例:Chrome/Chromiumなど)。標準的なオープンソースモデルであるWhisperは300ms以上のレイテンシーが発生します。ElevenLabsのカスタム実装では100ms未満です。

システムの「本能」

ターンテイキング/割り込み(TTI)は、ユーザーが話し終えたかどうかを判断する中間プロセスです。基盤となるモデルは、ボイスアクティビティディテクター(VAD)と呼ばれます。

ターンテイキングには複雑なルールがあります。短い発話(例:「うん」など)でターンが切り替わってしまうと、会話がぶつ切りになってしまいます。実際には、ユーザーがモデルの注意を引こうとしているかどうかを判断する必要があります。また、ユーザーが考えを伝え終えたかどうかも見極めなければなりません。

優れたVADは、無条件に無音を検知しただけで新しいターンを開始しません。単語やフレーズの間にも無音はあり、モデルはユーザーが本当に話し終えたかどうかを自信を持って判断する必要があります。そのためには、一定時間の無音(より正確には発話の欠如)を検知する必要があります。このプロセスが遅延を生み、ユーザーが感じる全体のレイテンシーに影響します。

.webp&w=3840&q=95)

技術的に言えば、他の会話型AIコンポーネントがすべてゼロレイテンシーだった場合、TTIのレイテンシーはむしろ良い効果をもたらします。人間も発話の後に少し間を置いて返答します。機械も同じように一拍置くことで、やり取りにリアリティが生まれます。しかし、他のコンポーネントでもすでにレイテンシーが発生しているため、TTIのレイテンシーはできるだけ短い方が理想的です。

システムの「脳」

次に、システムは返答を生成する必要があります。現在は、GPT-4やGemini Flash 1.5のような大規模言語モデル(LLM)で実現されています。

どの言語モデルを使うかで大きな違いが出ます。Gemini Flash 1.5のようなモデルは非常に高速で、350ms未満で出力を生成できます。より複雑なクエリに対応できる強力なモデル(GPT-4系やClaudeなど)は700ms〜1000msかかることもあります。最適なモデルを選ぶことが、会話型AIのレイテンシーを改善する最も簡単な方法です。

ただし、LLMのレイテンシーは、トークンの生成が始まるまでの時間です。生成されたトークンはすぐに次のテキスト読み上げプロセスにストリーミングできます。テキスト読み上げは人間の話すペースに合わせて進むため、LLMの方が確実に速いです。重要なのは最初のトークンのレイテンシー(最初のバイトまでの時間)です。

.webp&w=3840&q=95)

LLMのレイテンシーには、モデル選択以外にも要因があります。プロンプトの長さやナレッジベースの規模も影響します。どちらも大きいほどレイテンシーが長くなります。つまり、LLMが考慮する情報が多いほど時間がかかるということです。適切なコンテキスト量とモデルへの負荷のバランスを取ることが重要です。

システムの「口」

会話型AIの最後の要素はテキスト読み上げ(TTS)です。テキスト読み上げのレイテンシーは、テキスト処理からトークンを受け取ってから話し始めるまでの時間です。それだけです。追加のトークンは人間の話す速度よりも速く利用できるため、テキスト読み上げのレイテンシーは最初のバイトまでの時間のみとなります。

.webp&w=3840&q=95)

以前は、テキスト読み上げは特に遅く、音声生成に2〜3秒かかることもありました。しかし、最新のモデル(ElevenLabsのTurboエンジンなど)は300msのレイテンシーで音声を生成でき、新しいフラッシュTTSエンジンはさらに高速です。Flashはモデルタイム75msで、e2eで135msの最初のバイトまでのオーディオレイテンシーを実現しています。これは業界最高レベルです(ちょっと自慢させてください!)。

4つのコンポーネント以外にも、会話型AIのレイテンシーに影響する要素があります。

データを別の場所に送信する際には、必ずレイテンシーが発生します。会話型AIアプリケーションによっては、ASR、TTI、LLM、TTSの各プロセスを同じ場所に配置するのが理想的です。そうすれば、ネットワークレイテンシーの主な要因は、話者とシステム全体の間の通信だけになります。ElevenLabsは自社のTTSと内部の文字起こしソリューションを持っているため、サーバーへの呼び出し回数を2回減らせる分、レイテンシー面で有利です。

.webp&w=3840&q=95)

多くの会話型AIアプリケーションは、機能を呼び出す(ツールやサービスと連携する)ために存在します。例えば、AIに天気を確認してもらうよう音声で依頼する場合などです。これには追加のAPIコールがテキスト処理層で発生し、内容によっては大幅なレイテンシーが生じることもあります。

例えば、音声でピザを注文する場合、複数のAPIコールが必要になり、その中には(クレジットカード処理など)大きな遅延が発生するものもあります。

.webp&w=3840&q=95)

ただし、会話型AIシステムは、ファンクションコールが完了する前にLLMにユーザーへ返答させることで(例:「天気を確認しますね」など)、遅延をカバーできます。これにより、実際の会話のようにユーザーを待たせずにやり取りが続きます。

.webp&w=3840&q=95)

こうした非同期処理は、長時間のリクエストを避けるためにWebhookを活用して実現されることが多いです。

もう一つよくある会話型AIプラットフォームの機能は、ユーザーが電話で接続できること(または場合によってはAIがユーザーの代わりに電話をかけること)です。電話連携では追加のレイテンシーが発生し、その度合いは地理的な要因に大きく左右されます。

.webp&w=3840&q=95)

基本的に、同じ地域内であれば電話連携で200ms程度のレイテンシーが追加されます。グローバルな通話(例:アジア→アメリカ)では、移動時間が大幅に増え、レイテンシーが約500msに達することもあります。ユーザーの電話番号が利用地域外にある場合、このパターンはよく見られます。

今回の会話型AIエージェントの全体像が参考になれば幸いです。まとめると、アプリケーションは1秒未満のレイテンシーを目指すべきです。これは、タスクに合ったLLMを選ぶことで実現できます。また、複雑な処理がバックグラウンドで動いている間も、ユーザーとやり取りを続けることで長い無音を防げます。

最終的な目標はリアリティのある体験を作ることです。ユーザーが人と話しているような自然さと、コンピュータプログラムの利便性を感じられることが大切です。各プロセスを最適化することで、これが実現できるようになりました。

ElevenLabsでは、会話型AI音声エージェントシステムのあらゆる部分を、最先端のSTTとTTSモデルで最適化しています。各プロセスを磨き上げることで、シームレスな会話フローを実現しています。この全体最適の視点で、あらゆる場面で1msでもレイテンシーを削減しています。

ユーザーの問い合わせの80%以上を成功裏に解決

カスタマイズ可能でインタラクティブな音声エージェントを構築するためのオールインワンプラットフォーム